There is a multitude of Agile testing techniques that are quite sophisticated. The DAD process can help guide your process of tailoring decisions.

There is a multitude of Agile testing techniques that are quite sophisticated. The DAD process can help guide your process of tailoring decisions.

Agile developers are said to be quality infected, and disciplined agilists strive to validate their work to the best of their ability. As a result they are finding ways to bring testing and quality assurance techniques into their work practices as much as possible. These strategies include rethinking about when to do testing, who should test, and even how to test. Although this sounds daunting at first, the agile approach to testing is basically to work smarter, not harder.

This article describes how to take a disciplined approach to tailoring a testing strategy best suited for your agile team. It adapts material from my existing Agile Testing and Quality Strategies article[1] which describes the strategies in greater detail than what I do here. I also put this advice in context of the Disciplined Agile Delivery (DAD) process framework[2], a new generation agile process framework.

Disciplined agile delivery

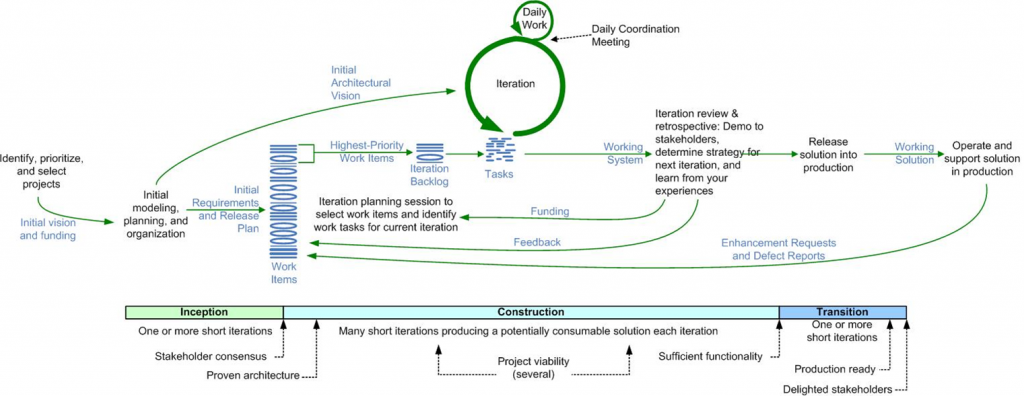

The DAD process framework provides a coherent, end-to-end strategy for how agile solution delivery works in practice. The DAD process framework is a people-first, learning-oriented, and hybrid agile approach to IT solution delivery. It has a risk-value lifecycle, is goal-driven, is scalable, and is enterprise aware. The basic/agile lifecycle for DAD is presented in Figure 1. As you can see it extends the Scrum lifecycle to encompass the full delivery lifecycle from project start to delivery into production. There is also an advanced/lean lifecycle which is loosely based on Kanban, but for the sake of this article the basic lifecycle is sufficient for our needs.

Figure 1. The basic DAD lifecycle.

On agile projects testing occurs throughout the lifecycle, including very early in the project. Although there will likely be some end-of-lifecycle testing during the Transition phase it should be minimal. Instead testing activities, including system integration testing and user acceptance testing, should occur throughout the lifecycle from the very start. This can be a significantly challenging change for organizations that are used to doing the majority of testing at the end of the lifecycle during a release/transition phase. So, the graphical depiction in Figure 1 aside, the Transition phase should be as short as possible – very little work should occur here at all.

An interesting feature of the DAD process framework is that it is not prescriptive but is instead goals based. So instead of saying “here is THE agile way to test” we instead prefer a more mature approach of “you need to test, there are several ways to do so, here are the trade-offs associated with each and here is when you would consider adopting each one.” Granted, there is a minority of people who prefer to be told what to do, but my experience is that the vast majority of IT professionals prefer the flexibility of having options.

So what are some of your agile testing options? Although there is much written in agile literature about the merits of developers doing their own testing, sometimes even taking a test-first approach, the reality is that there is a wide range of agile testing strategies. These strategies include:

- Whole team testing

- Test-driven development (TDD)

- Independent parallel testing

- End-of-lifecycle testing

Let’s look at each strategy one at a time and then consider if and when to apply them.

Whole team testing

Whole team testing

An organizational strategy common in the agile community, popularized by Kent Beck in Extreme Programming (XP)[3], is for the team to include the right people so that they have the skills and perspectives required for the team to succeed. To successfully deliver a working solution on a regular basis, the team will need to include people with analysis skills, design skills, programming skills, leadership skills, and yes, even people with testing skills. Obviously this isn’t a complete list of skills required by the team, nor does it imply that everyone on the team has all of these skills. Furthermore, everyone on an agile team contributes in any way that they can, thereby increasing the overall productivity of the team. This strategy is called “whole team”.

With a whole team approach testers are embedded in the development team and actively participate in all aspects of the project[4]. Agile teams are moving away from the traditional approach where someone has a single specialty that they focus on – for example Sally just does programming, Sanjiv just does architecture, and John just does testing – to an approach where people strive to become generalizing specialists[5] with a wider range of skills. So, Sally, Sanjiv, and John will all be willing to be involved with programming, architecture, and testing activities and more importantly will be willing to work together and to learn from one another to become better over time. Sally’s strengths may still lie in programming, Sanjiv’s in architecture, and John’s in testing, but that won’t be the only things that they’ll do on the agile team. If Sally, Sanjiv, and John are new to agile and are currently only specialists that’s OK: By adopting non-solo development practices such as pair programming and working in short feedback cycles they will quickly pick up new skills from their teammates (and transfer their existing skills to their teammates too).

Test driven development

With a whole team testing approach the development team itself is performing the majority, and perhaps all of, the testing. They run their test suite(s) on a regular basis, typically several times a day, as part of their overall continuous integration (CI) strategy. People new to agile will often take a “test after” approach where they write a bit of production code and then write the tests to validate it. This is a great start, but more advanced agilists will strive to take a test-driven approach.

Test-driven development (TDD) is an agile development technique practice which combines refactoring and test-first development (TFD). Refactoring is a technique where you make a small change to your existing source code or source data schema to improve its design without changing its semantics. Examples of refactorings include moving an operation up the inheritance hierarchy and renaming an operation in application source code; aligning fields and applying a consistent font on user interfaces; and renaming a column or splitting a table in a relational database. When you first decide to work on a task you look at the existing source and ask if it is the best design possible to allow you to add the new functionality that you’re working on. If it is, then proceed with TFD. If not, then invest the time to fix just the portions of the code and data so that it is the best design possible so as to improve the quality and thereby reduce your technical debt over time.

With TFD you write a single test and then you write just enough software to fulfill that test. The first step is to quickly add a test, basically just enough code to fail. Next you run your tests, often the complete test suite although for sake of speed you may decide to run only a subset, to ensure that the new test does in fact fail. You then update your functional code to make it pass the new tests. The fourth step is to run your tests again. If they fail you need to update your functional code and retest. Once the tests pass the next step is to start over.

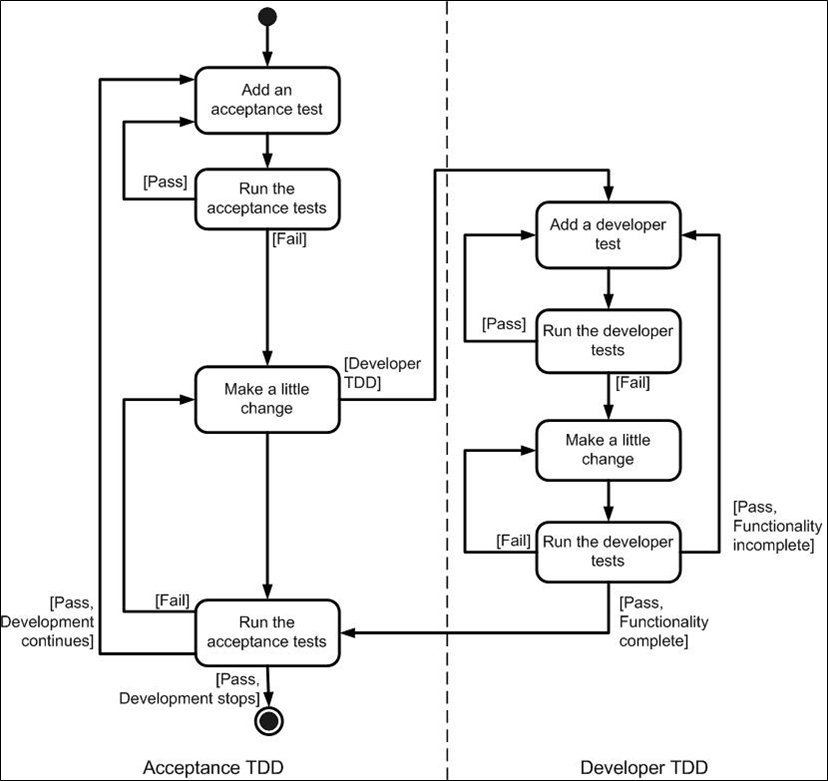

There are two levels of TDD: acceptance TDD (ATDD) and developer TDD. With ATDD you perform TDD at the requirements level by writing a single customer test, the equivalent of a function test or acceptance test in the traditional world. Acceptance TDD is often called behavior-driven development (BDD) or story test-driven development, where you first automate a failing story test, then driving the design via TDD until the story test passes (a story test is a customer acceptance test). Developer TDD is performed at the design level with developer tests, sometimes called xUnit tests.

With ATDD you are not required to also take a developer TDD approach to implementing the production code although the vast majority of teams doing ATDD also do developer TDD. As you see in Figure 2, when you combine ATDD and developer TDD the creation of a single acceptance test in turn requires you to iterate several times through the write a test, write production code, get it working cycle at the developer TDD level. Clearly to make TDD work you need to have one or more testing frameworks available to you – without such tools TDD is virtually impossible. The greatest challenge with adopting ATDD is lack of skills amongst existing requirements practitioners, yet another reason to promote generalizing specialists within your organization. Similarly, the greatest challenge with adopting developer TDD is lack of skills amongst existing developers.

Figure 2. ATDD and TDD together.

With a TDD approach your tests effectively become detailed specifications which are created on a just-in-time (JIT)

basis. Like it or not most programmers don’t read the written documentation for a solution, instead they prefer to work with the code. And there’s nothing wrong with this. When trying to understand a class or operation most programmers will first look for sample code that already invokes it. Well-written unit/developers tests do exactly this – they provide a working specification of your functional code – and as a result unit tests effectively become a significant portion of your technical documentation.

Similarly, acceptance tests can form an important part of your requirements documentation. This makes a lot of sense when you stop and think about it. Your acceptance tests define exactly what your stakeholders expect of your solution, therefore they specify your critical requirements. The implication is that acceptance TDD is a JIT detailed requirements specification technique and developer TDD is a JIT detailed design specification technique. Writing executable specifications[6] is one of the best practices of Agile Modeling (www.agilemodeling.com).

Although many agilists talk about TDD the reality is that there seems to be far more doing “test after” development where they write some code and then write one or more tests to validate. TDD requires significant discipline, in fact it requires a level of discipline found in few traditional coders, particularly coders which follow solo approaches to development instead of non-solo approaches such as pair programming. Without a pair keeping you honest it’s easy to fall back into the habit of writing production code before writing test code. If you write the tests very soon after you write the production code, in other words “test immediately after”, it’s pretty much as good as TDD, the problem occurs when you write the tests days or weeks later if at all.

The popularity of code coverage tools such as Clover and Jester amongst agile programmers is a clear sign that many of them really are taking a “test after” approach. These tools warn you when you’ve written code that doesn’t have coverage tests, prodding you to write the tests that you would hopefully have written first via TDD.

Parallel independent testing

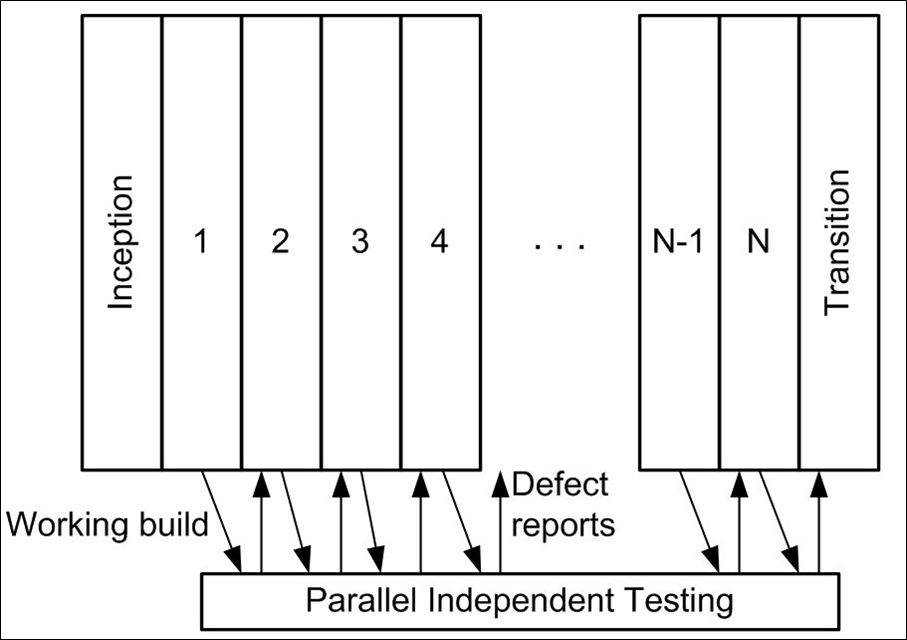

The whole team approach to development where agile teams test to the best of their ability is a great start but it isn’t sufficient in some situations. In these situations, described below, you need to consider instituting a parallel independent test team which performs some of the more difficult (or perhaps advanced is a better term) forms of testing. As you can see in Figure 3 the basic idea is that on a regular basis the development team makes their working build available to the independent test team, or perhaps they automatically deploy it via their continuous delivery tools, so that they can test it. The goal of this testing effort is not to redo the confirmatory testing which is already being done by the development team, but instead to identify the defects which have fallen through the cracks. The implication is that this independent test team does not need a detailed requirements speculation, although they may need architecture diagrams, a scope overview, and a list of changes since the last time the development team sent them a build. Instead of testing against the specification the independent testing effort will instead focus on production-level system integration testing, investigative testing, and usability testing to name a few things.

Figure 3. Parallel independent testing throughout the lifecycle.

It is important to recognize that the development team is still doing the majority of the testing when an independent test team exists. It is just that the independent test team is doing forms of testing that either the development doesn’t (yet) have the skills to perform or is too expensive for them to perform.

You can also see in Figure 3 that the independent test team reports defects back to the development. These defects are treated as type of requirement by the development team in that they’re prioritized, estimated, and put on the work item stack.

There are several reasons why you should consider parallel independent testing:

Investigative testing: Confirmatory testing approaches, such as TDD, validate that you’ve implemented the requirements as they’ve been described to you. But what happens when requirements are missing? User stories, a favorite requirements elicitation technique within the agile community, are a great way to explore functional requirements but defects surrounding non-functional requirements such as security, usability, and performance have a tendency to be missed via this approach.

Lack of resources: Many development teams may not have the resources required to perform effective system integration testing, resources which from an economic point of view must be shared across multiple teams. The implication is that you will find that you need an independent test team working in parallel to the development team(s) which addresses these sorts of issues. System integration tests often require expensive environment that goes beyond what an individual project team will have.

Large or distributed teams: Large or distributed teams are often subdivided into smaller teams, and when this happens system integration testing of the overall solution can become complex enough that a separate team should consider taking it on. In short, whole team testing works well for agile in the small, but for more complex solutions and agile at scale you need to be more sophisticated.

Complex domains: When you have a very complex domain, perhaps you’re working on life critical software or on financial derivative processing, whole team testing approaches can prove insufficient. Having a parallel independent testing effort can reduce these risks.

Complex technical environments: When you’re working with multiple technologies, legacy solutions, or legacy data sources the testing of your solution can become very difficult.

Regulatory compliance: Some regulations require you to have an independent testing effort. My experience is that the most efficient way to do so is to have it work in parallel to the development team.

Production readiness testing: The solution that you’re building must “play well” with the other solutions currently in production when your solution is released. To do this properly you must test against versions of other solutions which are currently under development, implying that you need access to updated versions on a regular basis. This is fairly straightforward in small organizations, but if your organization has dozens, if not hundreds of IT projects underway it becomes overwhelming for individual development teams to gain such access. A more efficient approach is to have an independent test team be responsible for such enterprise-level system integration testing.

Some agilists will claim that you don’t need parallel independent testing, and in simple situations this is clearly true. The good news is that it’s incredibly easy to determine whether or not your independent testing effort is providing value: simply compare the likely impact of the defects/change stories being reported with the cost of doing the independent testing.

End of lifecycle testing

End of lifecycle testing

An important part of the release effort for many agile teams is end-of-lifecycle testing where an independent test teamvalidates that the solution is ready to go into production. If the independent parallel testing practice has been adopted then end-of-lifecycle testing can be very short as the issues have already been substantially covered. As you see in Figure 3 the independent testing efforts stretch into the Transition phase of the DAD life cycle because the independent test team will still need to test the complete solution once it’s available.

There are several reasons why you still need to do end-of-lifecycle testing:

It’s professional to do so: You’ll minimally want to do one last run of all of your regression tests in order to be in a position to officially declare that your solution is fully tested. This clearly would occur once iteration N, the last construction iteration, finishes (or would be the last thing you do in iteration N, don’t split hairs over stuff like that).

You may be legally obligated to do so: This is either because of the contract that you have with the business customer or due to regulatory compliance. Many agile teams find themselves in such situations, as the November 2009 State of the IT Union survey discovered[7].

Your stakeholders require it: Your stakeholders, particularly your operations department, will likely require some sort of testing effort before releasing your solution into production in order to feel comfortable with the quality of your work.

There is little publicly discussed in the mainstream agile community about end-of-lifecycle testing. It seems that the assumption of many people following first generation agile methods such as Scrum is that techniques such as TDD are sufficient. This might be because much of the mainstream agile literature focuses on small, co-located agile development teams working on fairly straightforward solutions. But, when one or more scaling factors (such as large team size, geographically distributed teams, regulatory compliance, or complex domains) are applicable then you need more sophisticated testing strategies.

Got discipline?

A critical aspect of the DAD process framework is that it is goal driven. What that means is that DAD doesn’t prescribe a specific technique, such as ATDD, it instead suggests several techniques to address a goal and leaves it to you to choose the right one that is best for the situation you face. To help you make this decision intelligently the DAD process framework describes the tradeoffs of each technique and suggests when it is best to apply it.

One aspect of discipline is to recognize that you have to tailor your process to reflect the situation that you find yourself in. This includes your testing processes. Other aspects of discipline pertinent to your agile testing efforts is to purposely adopt techniques such as ATDD and TDD which shorten the feedback cycle (thereby reducing the average cost of addressing defects), streamlining your transition/release efforts through automation and performing testing earlier in the lifecycle, and of course the inherent discipline required by many agile techniques.

There are far more agile testing strategies that what I’ve described here. My goal with this article was to introduce you to the idea that there is a multitude of agile testing techniques, and quality techniques for that matter too, and that many of them are quite sophisticated. I also wanted to introduce you to the DAD process framework and illuminate how it can help guide your process tailoring decisions. With a bit of flexibility you will soon discover that agilists have some interesting insights into how to approach testing.

References

1 Ambler, S.W. (2003). Agile Testing and Quality Strategies: Discipline Over Rhetoric. www.ambysoft.com/essays/agileTesting.html

2 Ambler, S.W. and Lines, M. (2012). Disciplined Agile Delivery: A Practitioner’s Guide to Agile Software Delivery in the Enterprise. IBM Press. www.ambysoft.com/books/dad.html.

3 Beck, K. and Andres, C. (2005). Extreme Programming Explained: Embrace Change, 2nd Edition. Addison Wesley.

4 Crispin, L. and Gregory, J. (2009). Agile Testing: A Practical Guide for Testers and Agile Teams. Addison-Wesley.

5 Ambler, S.W. (2003). Generalizing Specialists: Improving Your IT Career Skills. www.agilemodeling.com/essays/generalizingSpecialists.htm

6 Adjic, G. (2011). Specification by Example: How Successful Teams Deliver the Right Software. Manning Publications.

7 Ambler, S.W. (2009). November 2009 DDJ State of the IT Union Survey. www.ambysoft.com/surveys/stateOfITUnion200911.html

The book: Disciplined Agile Delivery: A Practitioner’s Guide to Agile Software Delivery in the Enterprise by Scott W. Ambler and Mark Lines was released in June 2012 by IBM Press.

The book: Disciplined Agile Delivery: A Practitioner’s Guide to Agile Software Delivery in the Enterprise by Scott W. Ambler and Mark Lines was released in June 2012 by IBM Press.

Scott W. Ambler

Scott W. Ambler