Introduction

Everything changes. It’s the only constant. The landscape of software testing is undergoing a fast and dramatic change driven by societal, business and technology changes that have placed software everywhere.

Computing is ubiquitous. There is hardly anything today that doesn’t contain a CPU or information transmission capability, with software to drive it. From smart toasters to passports with microchips that log and share information, and more on the way every day as technologies are crossing and blending. The thinking insulin pump delivers correct doses of life-saving insulin and wirelessly sends reports to you and your doctor’s phone and orders a refill when supplies get low. Just a few years ago, who would have thought test strategy for an insulin pump would need to include communication, security and performance testing? In a relatively short time, financial transactions went from paper checks to online bill pay and now to mPay (mobile payments). The technologies that have enabled such acceleration in product development have changed the testing landscape, dramatically impacting testers and testing.

Software testing skills, training, tools and process have traditionally lagged behind the development of new technologies and products for software programming. Even as everyone seems to have become Agile, there are frameworks for project management (such as Scrum) and many recommendations for development practices like eXtreme Programming (XP), but no common test practice reference to keep up with these more dynamic practices. This article examines some of the larger business trends that impact our products and delivery. We will examine some technology, process and mega-trends to better prepare you for the new normal in software testing. These trends will impact your work. The first step in projecting these impacts is understanding the changes. Let’s begin!

Where are we now?

The Business of Software Development

The software development world is driven by business and enabled by technology. Already we take for granted what seemed so new just a few years ago.

From the beginning of software development, testing was thought of as a necessary evil that needed to be faster- always – deliver better quality to customers and keep costs down. This idea is not new. Yet the speed and necessity of these are ever–increasing: Faster SDLCs, faster product distribution. Even recently from CD distribution to downloadable product to App download and SaaS, and even more distributed teams and need for high-volume test automation to meet these demands.

Many people think the move to Agile development practices is a recent shift in the testing landscape. The Agile manifesto, the document that starting it all, was written in 2001— that’s 12 years ago. Scrum was presented publicly as a framework for software development in 1995—it is now 17 years old. No longer new. Many companies have moved past Scrum to Kanban—which is a newer SDLC but it is just a digital application of a process introduced in 1953.

While Agile and Scrum are not new, there have been significant changes in product development coupled with Agile. Offshoring and outsourcing Agile development, which we will refer to as distributed Agile, helps maximize efficiency and lead to faster product development. These changing practices are some of the many pressures on test teams to automate as much testing as possible. What has recently changed the landscape of software testing is the full expectation of teams that test software to be Agile, distribute tasks for best time and cost efficiency and accomplish high-volume test automation simultaneously. Doing this seamlessly and fast is no longer an expectation. It’s a given. It’s the new normal, and it’s not easy. It requires great communication, ALM tools, expert automation frameworks and highly skilled teams. Yet making Agile, distributed testing and test automation effective and efficient while reducing work stress and working at a sustainable pace is still out-of-reach for some teams.

Game Changers

There are some big trends in business, technology and products but we need to focus on the disruptive innovations. Not progress or direction adjustors – they are important as well, but wholesale change – the big trends that will impact our work the most and need the most preparation.

These trends are different than adding a new technology; for example, moving from a mobile optimized website to a native app or moving to HTML5. Testers will need new skills and perhaps new tools for testing HTML5. This is progress. There will always be technical progress, new languages, platforms, operating systems, databases and tools. Progress is not a game changer.

Disruptive Innovation

Disruptive innovation is an innovation that creates a new market by applying a different set of values, which ultimately (and unexpectedly) overtakes an existing market. (E.g.: lower priced smart phones). A technology disruptive innovation, or game changer, was moving from procedural to object oriented programming. It abruptly and radically restructured software development and it hasn’t been the same since.

A disruptive innovation is an innovation that helps create a new market and value network and eventually goes on to disrupt an existing market and value network (over a few years or decades), displacing an earlier technology. The term is used in business and technology literature to describe innovations that improve a product or service in ways that the market does not expect, typically first by designing for a different set of consumers in the new market and later by lowering prices in the existing market.

Just a few years ago, Salesforce.com created disruptive innovation. Salesforce.com’s logo showing “No software.” tells the story. People initially thought of SalesForce as a software company, but the fact that it’s really a service company and produces software as a service is now better understood.

This leads us to the first mega-trends or disruptive innovation changing the landscape of software testing:

1. The Cloud and SaaS

Much has been written about SaaS and how the Cloud is taking over. We will focus on how both of these technologies are changing the landscape of software testing. NIST defines cloud computing as a model for enabling convenient, on-demand network access to a shared pool of configurable computing resources—for example, networks, servers, storage, applications and services—that can be rapidly provisioned and released with minimal management effort or service provider interaction.

Often, Cloud Computing and Software as a Service (SaaS) are used interchangeably, but they are distinct. Cloud computing refers to the bigger picture. It is the broad concept of using the internet to allow people to access enabled services— Infrastructure as a Service (IaaS), Platform as a Service (PaaS), and Software as a Service (SaaS). Cloud computing means that infrastructure, applications and business processes can be delivered to you as a service, via the Internet or your own network.

Software as a Service (SaaS) is a service within Cloud Computing. In the SaaS layer, the service provider hosts the software so you don’t need to install, manage, or buy hardware for it. All you have to do is connect and use it. SaaS examples include customer relationship management as a service (www.thebulldogcompanies.com/what-is-cloud-computing).

2. Virtualization

Virtualization (or virtualisation) is the simulation of the software and/or hardware upon which other software runs. This simulated environment is called a virtual machine (VM). There are many forms of virtualization, distinguished primarily by the computing architecture layer. Virtualized components may include hardware platforms, operating systems (OS), storage devices, network devices or other resources.

Virtualization can be viewed as part of an overall trend in enterprise IT that includes autonomic computing, a scenario in which the IT environment will be able to manage itself based on perceived activity and utility computing, wherein computer processing power is seen as a utility that clients can pay for on an as-needed basis. A primary goal of virtualization is to centralize administrative tasks while improving scalability and overall hardware-resource utilization. With virtualization, several operating systems can be run in parallel on a single central processing unit (CPU) to reduce overhead costs—not to be confused with multitasking where several programs run on the same OS.

Using virtualization, an enterprise can better manage updates and rapid changes to the operating system and applications without disrupting the user. Ultimately, virtualization dramatically improves the efficiency and availability of resources and applications in an organization. Instead of relying on the old model of “one server, one application” that leads to underutilized resources, virtual resources are dynamically applied to meet business needs without any excess fat. (ConsonusTech).

Simulating software and hardware environments has changed test execution and the entire landscape of software testing.

3. Ubiquitous Computing

I prefer the term coined at MIT—Things That Think.

Ubiquitous computing (ubicomp) is a post-desktop model of human-computer interaction in which information processing has been thoroughly integrated into everyday objects and activities. In the course of ordinary activities, someone “using” ubiquitous computing engages many computational devices and systems simultaneously, and may not necessarily even be aware that they are doing so. This model is considered an advancement from the older desktop paradigm. The more formal definition of ubiquitous computing is – “machines that fit the human environment instead of forcing humans to enter theirs.”

There is an explosion of things that think, powered by complex algorithms that can process huge amounts of data in near real-time and provide instantaneous results. Smart devices with embedded software systems range from traffic lights and watering systems to medical devices, cars and dishwashers, equipped with scanners and sensors, vision, communication and object-based media (self-aware content meeting context-aware consumer electronics). Increasing sensor density is enabling massive data gathering from everyday objects. At the consumer electronics show, CES, it was projected 350 million IP devices will ship in 2013. Computing is ubiquitous, everything not only thinks, but collects data, communicates and makes decisions for you. And all of these thinking devices are ready to be tested!

4. Big Data

By now we have all heard the phrase big data. What does it mean? And, more important to us – how will it affect my work?

Big data is a collection of data sets so large and complex that they become difficult to process using on-hand database management tools or traditional data processing applications. The challenges include capture, curation, storage, search, sharing, analysis and visualization. The trend to larger data sets is due to the additional information derivable from analysis of a large, single set of related data, as compared to separate smaller sets with the same total amount of data, allowing correlations to be found to “spot business trends, determine quality of research, prevent diseases, link legal citations, combat crime and determine real-time roadway traffic conditions.”

Some of the often mentioned applications of big data are meteorology, genomics, connectomics, complex physics simulations, biological and environmental research, internet search, finance and business informatics.

Examples from the private sector:

- Wal-Mart handles more than 1 million customer transactions every hour, which is imported into databases estimated to contain more than 2.5 petabytes of data—the equivalent of 167 times the information contained in all the books in the US Library of Congress.

- Facebook handles 50 billion photos from its user base.

- FICO Falcon Credit Card Fraud Detection System protects 2.1 billion active accounts world-wide.

Imagine if you could overlay your blood pressure history with your calendar and discover your most stressful meetings.

In the future, there will be more pressure sensors, infrared, NFC (Near Field Communication, mobile payments ormPay). Other sensors will be added, creating new services – automakers adding new features taking advantage of sensor data to help you park, for instance. Progressive insurance has Pay As You Drive feature, lower rates if you share GPS data.

5. Mobility

I know. Everything is mobile. You may think it last year’s news, but it is generally agreed we are just at the beginning of the mobility tidal wave.

Apple launched the iPhone in 2007 and now 65% of mobile phone use time is in non-communications activities as the eco-system is becoming less focused on voice communication. People are using mobile phones more and more as core pieces of hardware. Shawn G. DuBravac, chief economist and research director for the Consumer Electronics Association states, “The smartphone has become the viewfinder of your digital life.” The unexpected consequences are that devices and tablets are becoming hub devices: TV remotes, power notebook computers, blood pressure monitors, etc. Smartphones are now in 52% of U.S. households; tablets in 40% of U.S. households. The traditional PC is dead. Long live the tablet. We are testing in the post-PC era.

If we pause here and look at testing implications, ABI Research indicates revenues from mobile application testing tools exceeded $200 million in 2012. About three-fourths of the revenue was from sales of tools that enhance manual testing, but in the coming years, the market will be driven predominantly by test automation. Growth in the automation segment will push revenues close to $800 million by the end of 2017.

($200 Million Mobile Application Testing Market Boosted by Growing Demand for Automation London, United Kingdom – 22 Oct 2012).

6. Changing Flow of Input

Dozens of companies are working on gesture and voice based controls for devices – gesture, not just touch. Haptic computing with touch sensitivity has already been developed for applications from robotics to virtual reality.

Language understanding, speech recognition and computer vision will have application in an ever-increasing number of products. We already have voice activated home health care and security systems with remote healthcare monitoring.

Most of us think voice integration for cars is just for calls. Google’s driverless car logged 483,000km/300,000miles last year without an accident, and it’s legal in a few US states. It will be important for virtual chauffeurs to reliably understand verbal directions—in many languages and dialects. Would you feel safe being driven in a car 100% operated by software that was tested by someone like you, with your skill set?

The tools and software to test these types of applications will be very different than what we know today. They are likely to be graphical applications much like today’s video games to be able to adequately test the input devices.

Testing in the New Landscape

Big changes are happening in the software and hardware product development world. To examine how these things are changing testers, testing and test strategy, we’ll use the SP3 framework. This is a convenient framework to examine who tests, the test process and what methods and tools are used.

Components of a Test Strategy – SP3

A test strategy has three components that need to work together to produce an effective test effort. We developed a model called SP3, based on a framework developed by Mitchell Levy of the Value Framework Institute. The strategy (S) consists of:

- People (P1) – everyone on your team.

- Process (P2) – the software development and test process.

- Practice (P3) – the methods and tools your team employs to accomplish the testing task.

Note: We need to make some generalizations here about the state of software testing. While some organizations are now automating 100% of their 10,000 or 100,000 test case regression, some organizations have 100% manual regression of pick and choose coverage. For more automation trends, check out question “O1” in our 2010-2011survey results on automation testing.

There are teams already testing Google cars. Some teams do all the performance and security testing they need. There are teams already on the cutting edge, though many are not. The following descriptions may already be true for you, I am writing in global terms.

People – Skill, distributed/offshore tasks and training

Skills

The shift that is happening with people and skills today is a result of ubiquitous computing and Agile development practices. The variety of skills test teams need today has expanded to the point that hiring traditional testers does not cut it in software development any longer. For this reason, many organizations have had to go offshore to find available resources for projects.

Increased skills are needed for all testing facets. New devices, platforms, automation tools, big data methods, and virtualization all require new levels of expertise. Today, performance issues with virtual machines (VMs) running android emulators is a persistent problem. Automating the test cases to run against the emulators on these VMs will require talented experts to sort out current issues and maintain usable performance.

Many Agile practices espouse cross-functional teams. While this is difficult or impossible for the vast majority of teams, testers need to grow their cross-functional skills to do more than just execute test cases! Testers need to be multi-functional, whether that is helping with UI design, code-review, automation engineering or help/documentation. Many organizations today think of testers as software developers who test.

Fully Distributed

We live with global products and teams that are assembled from a globally distributed workforce. Skill, availability, cost, continuous work/speed—these are all driving organizations to distribute tasks for optimal execution.

We know distribution of testing tasks is successful and, at the same time, needs more management oversight, great communication infrastructure, increased use of ALM tools, and cross-cultural training.

The Agile/Scrum move to co-located teams, balanced against both the cost and availability of skilled staff, is still playing out on most teams. What has been successful, and what I would advise any team looking for staff optimization, is to first distribute test automation tasks. The level of expertise needed to engineer new test automation frameworks can be successfully separated from test design and jump start automation.

SMEs (Subject Matter Experts)

Not only do testers need to become more technical, all members of the team need to increase subject matter or domain knowledge. Subject matter experts are still essential but they need to work together with other, more technical testing staff on test case design for automation, data selection and additional tasks such as flushing out User Story acceptance criteria and bug advocacy. Ultimately, super specialized test teams will evolve—with domain testers at one end and niche technical testers at the other.

Interpersonal Skills

With the blending of work and home life- working hours to overlap distributed teams, working in bull-pens/cross functional project team areas—people working from home, working at a sustainable pace is a key eXtreme Programming practice.

In the new software development paradigm, there is a much closer relationship with product owners, customers, business analysts and testing. Development organizations always need more teamwork, seamless communication, trust, respect and all the other people skills that are essential in a highly technical, distributed environment. Soft skill development is essential, not optional, to thrive in the rapid, distributed development world. Skills include: leading technology workers, cross-cultural communications and using ALM tools.

Training

Lean development as a software practice is gaining many followers today. One of the 7 Lean Principles is to amplify learning. With the rate of technology, product, and development practice change, it’s a no-brainer that there is a constant need for training. For an in-depth view of training in testing, read our February, 2012 Training issue.

Process

Team Process

Software development processes/SDLCs have changed in the past 10 years like never before in the history of software development. The shift toward more metrics, more dashboards and better measurement prevalent in the late 90s and early 2000’s has totally reversed. Now the focus is on

leaner processes with the best metric of team success being the delivery of working software. The only metric in Scrum is burndown, velocity is secondary, and bug counts may be moot and turned into user stories. This may be extreme for your organization, but it is definitely the movement.

Software development has been greatly impacted by the use of ALMs (application lifecycle management tools). This is not the place to detail ALMs, (I went into great detail on the subject in a past article) except to say that their use today is bigger than ever and growing. That these tools are more integrated with testing, automation and test management is new.

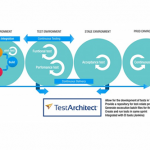

ALMs can greatly facilitate communication with offshore teams. Continuous Integration (CI) is a pervasive XP practice today. The best ALM tool suites have complete CI tooling (source control, unit test execution, smoke test/UI/ automated build validation test execution, autobuild, traceability, and reporting) or easy integration for these.

Test Process

Testing often lags behind development in most organizations and test teams are still responding to Agile. Many test teams are struggling in ScrumBut processes. Most tests are trying to prevent “handoffs” and waste rework as well as being flexible and responsive. As noted above, test teams are still figuring out test practices on Scrum teams and in XP practices. This has been the subject of many articles at LogiGear Magazine.

The biggest test process impacts, or game changers to testing in Agile/XP, are CI and the need for high volume test automation. These are detailed in the following “Practices: Methods and Tools” section.

Joint Ownership – Ownership of Quality

It has been a long time since people who test gladly got rid of the QA (quality assurance) job title. However, some unaware people continued to believe in those who test guaranteed quality rather than measure it, report risk, find bugs, or any other achievable tasks. The Agile movement has finally done away with the illusion with more Teams today espousing joint ownership of quality. There are more upstream quality practices: code review, unit testing, CI, better user story and requirements from need for customer satisfaction and closer communication with test teams. Following the Scrum framework, most teams now have the Product Owner as the ultimate owner of quality.

Speed

The speed of development today is a changing process. The pressure to deploy rapidly and simultaneously on multiple devices or platforms— the ease of distribution, market pressure, cost pressure, do more with less pressure— is pushing some teams to abandon or reduce even some universally accepted practices, such as hardening or release sprints, to accommodate the need for speed. Abandoning process for speed is as old as software development itself, but the pressure is greater today. This is risky when testing large and complex systems where test teams need high-volume test automation and excellent risk reporting skills.

SaaS/Cloud

There are evolving process changes in the Cloud, particularly in SaaS. With the immediacy of deployment, rapid change, and ever-smaller or non-existent architecture, some Agile teams cannot keep up. With the speed SaaS products are made available to all customers, patches, hot-fixes or roll-backs are particularly problematic. As always, large-scale test automation is a team’s most important defense.

Practice – Methods and Tools

Methods

This area is leading the most dramatic changes in software testing.

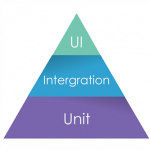

Massive Automation– Smoke Tests and Regression Tests

There are two foundation practices that have had a profound impact on the testing landscape. Continuous Integration —mistaken sometimes to mean only auto build—is the automatic re-running of unit tests, the test team’s smoke or UI automated tests, and reporting changed code, fixed bugs and automation results. This process is significant to the new Agile speed of development, requiring rapid release, and is dependent on test teams having significant smoke or build validation tests as well as traceability. Using an ALM such as TFS (Team Foundation Server), for example, that has all the pieces for CI built-in, the reporting can detail the code changes and the bug fixes. Gone are the days of getting bad builds, or good builds with no idea what changed. The test team must have a great automated smoke test to have a true CI practice.

Regardless of what other practices you do, or advances your team is making to keep up with the new paradigms in software development, you cannot succeed without massive automation. As we know, old, high-maintenance, random, automation methods will not work today. Automated regression tests are not only for speed, they are necessary for confidence, coverage, focus, information and to free us up to do more exploratory discovery. This last point is often lost in the new Agile development paradigms. With reduced design time and vague user stories, test teams need to discover behaviors (intended or unintended), examine alternative paths and explore new functionality. No one has the time to do this without massive automation.

We also know that scripted, old style automation does not work. I suggest you read Hans Buwalda’s article on Action based Testing for the most advanced methods for test automation.

The Cloud and SaaS

The Cloud

The common use of the cloud in software development today is virtualization of environments to quickly share tools, resources and run simulations of both hardware and software. This becomes more relevant in the context of the current explosion in the mobile applications market for smartphones and tablets. These applications require shorter development cycles and automated virtual regressions making cloud testing cost-effective and efficient.

SaaS

Software-as-a-Service presents unique test method challenges that are different from process changes with SaaS. A positive aspect of SaaS testing is the ability for teams to control the production environment. There is no server side compatibility testing and even client-side access can be restricted and controlled.

The biggest impact of SaaS on test methods is the necessity and growing importance of performance, load, security, and scalability testing, in addition to having our hands full with functionality testing. Where load, performance and scalability testing have often been postponed due to the method’s reputation of being expensive, slow, nice-to-have, optional, etc., there needs to be crucial discussion before compromising security testing.

The value proposition behind SaaS is: let “us” manage the servers, databases, updates, patches, installs and configuring. Everything your IT team used to do when you bought software, you can now buy as a service. Implicit in this proposal is – “We” will manage and secure your data. The biggest hurdle most companies face in letting someone outside the company manage and maintain corporate intellectual property is data security. Security testing is not optional. In some cases, security testing is performed by specialized teams with their own tools. In most cases I see, the test teams are integral to security testing in:

- Test case design.

- Data design.

- Stress points.

- Knowledge of common and extreme user scenarios.

- Reusing existing automation for security testing.

Virtualization

The primary impact of virtualization is the ability to do things faster.

Virtualization makes managing test environments significantly easier. Setting up the environments requires the same amount of work, but regular re-use becomes significantly easier. Management and control of environments through virtualization is now a basic tester skill for:

- Executing tests on multiple virtual servers, clients, and configurations using multiple defined data sets increases test coverage significantly.

- Reproducing user environments and bugs is easier.

- Easy tracking, snapshots and reproducibility to aid team communication.

- Whatever resources are constrained, such as database, service or tools, your test automation and manual execution can be virtualized for independent, faster, shared or not, independent execution.

- Facilitate faster regression testing economically using many virtual machines.

- Virtualization frees-up your desktop.

Performance bottlenecks and problems are common with virtualization test projects. Automation may be more complex to set-up. Daily deployment of new builds to multiple (think 100s) of VMs needs skill to run smoothly. To be effective, your team will probably need a virtualization or tool expert.

Devices That Think

Embedded system testing is very different than application testing. With the explosion in things that think and ubiquitous computing/sensors and devices that interact, and often provide some components of big data, new test methods are needed.

As an overview of some of the complications:

- How to generate a function call and parameters:

- Need for new tools, APIs, various input systems.

- Need for virtual environments.

- Separation between the application development and execution platforms (the software will not be developed on the systems it will eventually run on. This is very different from traditional application development).

- How to simulate real-time conditions.

Difficulty with test validation points or observed behaviors:

- Parameters returned by functions or received messages.

- Value of global variables.

- Ordering and timing of information.

- Potentially no access to the hardware until final phases of development.

- All these will potentially complicate test automation.

With the added worry that many embedded systems are safety-critical systems we will need technical training, more tools, new thinking, new methods and more sophisticated test automation for devices that think with no UI.

Big Data

Big Data methods are not jump running a few SQL queries. Saying big data is difficult to work with using relational databases, desktop statistics and visualization packages is an understatement.

Instead, “massively parallel software running on tens, hundreds, or even thousands of servers” is required. What is considered “big data” varies depending on the capabilities of the organization managing the dataset, and on the capabilities of the applications that are traditionally used to process and analyze the data set in its domain. For some organizations, facing hundreds of gigabytes of data for the first time may trigger a need to reconsider data management options. For others, it may take tens or hundreds of terabytes before data size becomes a significant consideration (www.en.wikipedia.org/wiki/Big_data).

Correlating global purchases at Wal-Mart with direct advertising, marketing, sales discounts, new product placement, inventory and thousands of other analyses that can be pulled from the 2.5 petabytes of data creates serious testing challenges. Testing the data, the algorithms, analysis, the presentation, the interface, all at the petabyte levels requires software testing by people like you and I.

This scale of testing will require huge virtualization efforts, a new test environment set-up, management skills, data management, selection and expected result prediction, as well as the obvious data analysis skills. There will be heavy tool use in big data testing. Quick facility in aggressive automation is a pre-requisite.

Mobility

Much has already been written about the increased needs for mobile testing. I will focus on two less discussed ideas.

There is less and less focus on the phone aspect of mobile devices, and more focus on intersecting technologies. From common intersections such as Wi-Fi, Bluetooth and GPS to newer and high growth intersections of RFID, the currently hot NFC (near-field communication), ANT+ (used mainly for medical devices), QR Code (quick response code) and a growing variety of scanners. As with things that think, learning new technology, new input tools and methods, capturing output for validation and observation and complexities for test automation are demanded with fast ramp-up.

Security and performance testing are two commonly neglected aspects of mobile testing-. Much lip-service is given to the need for these test methods which need different planning, skill, expensive tools and time to execute. In today’s fast-at-all-cost delivery environments these test methods very often get postponed until a crisis occurs (check out “Mobile Testing By the Numbers” for information on how much mobile apps are performance tested). Testers need to be excellent at risk assessment and planning for the times when these are added back into the test strategy.

New Input Technologies

In the past, input variation meant testing mainly keyboards for Japanese and Chinese character input. It’s no longer that simple. Even the recent phrase, “Touch, type or tell” must now include gesture. Add in the new and more common virtual thumbwheels and the range of innovation from companies such as Swype and Nuance and even the most traditional business productivity app must be touch-screen and voice enabled. As with many of the innovations changing the landscape of software testing today, technology specific training, new skills and new tools are a pre-requisite to testing effectively.

Tools

A theme in the new development world is: always leverage technology. Regardless of your product market space, your team’s tool use should have recently increased quite significantly. There has been a new focus on test tools like never before.

While I have stated testing tools often lag behind development tools, the lag is getting smaller. With business seeing the need for speed and testing often being the bottle-neck due to lack of good tools, skills, test environments and access to builds and code, there has been huge change in tooling for test teams. Today, the availability of significantly better test automation frameworks supporting more platforms and devices and integrating with more ALM tools is not only commonplace, but essential. And it’s becoming more common for test teams to take over, or bring key members in to own the continuous integration tool.

In addition to ALM and Continuous Integration, as stated earlier, virtualization tools are widely used today and are becoming an IT necessity.

You will probably need tools specific to a technology (example: Google’s Go! or F#), platform, or device (example: input devices, simulators and emulators) as well.

Significant, high-volume test automation is essential, not optional. Old style test automation will not meet project needs today. Fast, efficient, low-maintenance test design and automation is mandatory. Tools that support action-based testing can help you achieve the highest production and more effective levels of automation. If this includes more programmers focused on designing code for testability and automation stability, so be it—the testing landscape is undergoing revolutionary change. Leverage technology wherever possible!

Summary

Be prepared! The landscape of software testing has changed forever.

The world, business, the marketplace, and society have moved toward ubiquitous computing. Things that think are everywhere. And what we test changes how we test. The flood of new, non-traditional products and services has greatly increased the skills needed to test. And, as always, test everything, but do it faster and cheaper.

If you are not mobile you will be. If your product has no mobility, it will.

If you are not fully integrated with your programmers and everyone on the development team, you will be.

If you do not currently have an expanded role in security, scalability, load and performance testing- you will.

If you are not doing high-volume automation, you will be.

If you are not virtualized, you will be.

If you are not fully distributed, you will be.

If you are not thinking/re-thinking what you distribute and what you keep in the home office, you will be.

References

Wikipedia

Application testing in the Cloud

By Joe Fernandez, Oracle and Frank Gemmer, Siemens

CES 2013: State Of The CE Industry And Trends To Watch

By Eric Savitz, Forbes January 2013

The Law of Accelerating Returns

By Ray Kurzweil.