Looking for a solution to test your voice apps across devices and platforms?

Whether you’re new or experienced in testing voice apps such as Alexa skill or Google Home actions, this article will give you a holistic view of the challenges of executing software testing for voice-based apps.

It also explores some of the basic test tools you’ll need during development and concludes with how to meld different automated testing tools & methods together to build an effective test strategy.

Always-on, Always-listening: Voice-First Devices

Dwayne ‘the Rock’ Johnson’s Apple advert went viral as he took on the world with Siri as his go-to tool. But have you ever heard people shout half-human and half-computer phrases to their phone while walking down the street?

If you have not, you will be seeing it happen more soon. Welcome to the voice-first applications era. Amazon and Google have sold millions of Amazon Echo and Google Home devices, respectively, and 24.5M voice-first devices are expected to ship this year. In a report, Gartner predicts worldwide spending on virtual personal assistant (VPA)-enabled wireless speakers will top $2 billion by 2020.

A voice-first device is a smart device specifically designed to get tasks done conversationally. They’re always-on and always-listening. Amazon Echo and Google Home devices are typical example of voice-first devices. You can use these devices to set up reminders, search the web, or order pizza.

Mobile devices such as iPhones or Android phones can be configured to be always-listening but they were not designed from the ground-up for a voice-first experience. These voice-first devices have a far-field voice input processing (FFVIP) support as well as higher speaker quality.

Testers beware: Voice Apps Are Coming

Google must already be pondering about how to use these devices to push ads in front of their customers. It’s interesting to see how they’ll do this, and whether or not the result will do better than radio and television ads.

Businesses should be thinking about how they can leverage this new channel to provide better service for their customers.

Developers and designers might think about how they can design and deliver the best voice experience.

Hackers are already able to break into these types of devices. Rooting a Linux-based box like the Echo device is not the hardest hacking job in the world. Security specialists need to think about securing data and network access in the event of a hack attack.

What about testers? We find bugs before our customers do. We automate our testing process so we can release faster and with higher quality. To keep up, we need to adapt our processes to the new technology.

The Basics

These are the concepts, definitions, and underlying architecture you need to know before testing voice apps.

Application Under Test (AUT): Alexa Custom Skill

Echo is the name of the product line that includes Echo, Echo Dot, Tap, Echo Show, etc. To use Echo, just say anything you want, starting with the wake word “Alexa.” This ‘wakes up’ the system. For example, “Alexa, what’s time is it?”

Alexa is the intelligent assistant powered by cloud computing. Alexa can answer your queries, or facilitate other requests. Some examples include setting an alarm, playing music, or controlling home automation devices. These are just a few instances, and the list grows every day.

All of the above Alexa’s capabilities are called Alexa “skills.” Amazon continuously adds more built-in skills while allowing third parties to develop Alexa custom skills (or Alexa apps) using their Alexa Skills Kit. This allows businesses to create a (usually) commerce-driven skill that allows them to monetize the platform. Take for example, Uber. Did you know that you can use Alexa to request and schedule a ride for yourself by saying “Alexa, ask Uber to request a ride”.

So our AUT here is the Alexa Custom Skill.

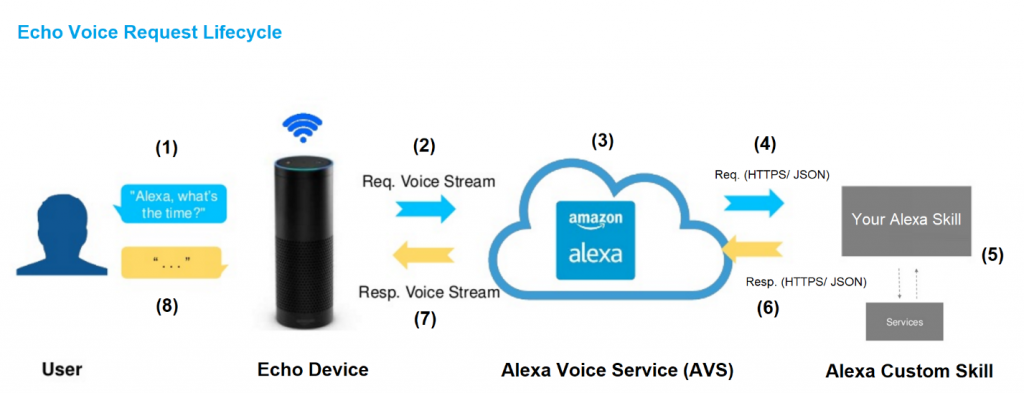

Understanding Alexa’s Voice Request Lifecycle

An effective and thorough test strategy starts with a good understanding about the underlying architecture of the system under test. We need to understand how things flow from the moment the user starts asking the Echo device to do something until the task is complete.

- The user gives a command by saying the wake-word (or hot-word) “Alexa” and it’s followed by command details.

- The device sends the voice stream to AVS.

- AVS does a couple things:

3.1 Uses speech-to-text technology to translate the voice stream to text.

3.2 Identifies the skill name and maps the user’s spoken inputs to the skill’s intents and slots, which are pre-configured by the skill developer in the skill’s Interaction Model. For example:

Source: https://www.slideshare.net/aniruddha.chakrabarti/amazon-alexa-building-custom-skills/18

- AVS sends a POST request to the skill’s end point and attaches parsed information such as intent and slots it in the request’s JSON body.

- Alexa custom skill code processes the request which certainly involves calling some other services.

- Alexa custom skill sends back the Response to AVS. The Response essentially contains the output speech and the directives for the device.

- AVS sends the voice stream of the output speech.

- Echo device plays back the output speech.

Voice Apps Testing Challenges: A Learning Curve for Everyone

Delivering the best voice app quality is difficult even for the most experienced app-makers. Just because you have a highly-rated mobile app doesn’t mean that it’ll translate well to the voice app world.

At the time of writing, early Alexa adopters such as Starbucks and Domino’s Pizza have their skills rated at 2.5 stars. Quite a shocking contrast, when compared to their iOS apps, which are overwhelmingly loved by their customers with a 4.5 star rating.

| iOS Rating Alexa Rating |

This is no exception to a platform provider like Amazon. While you can try your best to deliver your Alexa skill, there are just some things you can’t control and that is the Alexa Skills Kit and Alexa Voice Service in itself. The last thing you want for your skill is for it to stop working due to new updates on the Alexa platform (shipped with bugs).

VUI vs. GUI: Descriptive vs. Prescriptive

In the voice-first world, the primary interaction between human and machine is done through the Voice User Interface (VUI). In this natural form of interaction, one can tell the machine about their intent and whatever supportive details they have in mind when they start speaking. For instance, to order pizza you might say “Alexa, can you order 2 large cheese pizzas please?” or “Alexa, order a thin crust pepperoni pizza.” In both cases, the voice app is expected to be able to process the request.

On the other hand, the GUI is designed to limit the choices so that the user can easily navigate through the business process. Think about UI controls such as dropdown lists, check boxes, radio buttons, etc. You can’t select a pesto pizza if that option is not there but you can always say it. And psychologically, you never say “Order 2 hot Frappuccinos” when you’re in line at your local Starbucks store but, that doesn’t change the fact that people do this with an Echo device. It’s because Alexa is not fully humanized yet.

That is the fundamental difference between testing the VUI and the GUI. In GUI testing, there is limited data input while in VUI testing, it can create an indefinite number of test cases. In some business-critical apps, academic testing techniques like Pairwise Testing can help design much less test cases while having better test coverage.

Multi-Platforms

There are at least two prominent leaders in the field: Amazon and Google, followed closely by Microsoft (Cortana) and Apple (Siri). Although there are common concepts in building voice apps in these platforms, they are simply not the same. There is no standardization for where these apps can operate and run. Consider browsers alone—some are only on mobile, some are only on the web, and some run on both.

It might not be as diverse as mobile devices but ideally, you would want a set of tests that can be executed to verify your apps across platforms.

Tools for Basic Testing Needs

Service Simulator

This is Amazon’s web-based tool that allows you to test the JSON Response returned by your custom skill. Here is what it looks like:

- Pizzamia is a sample custom skill to order pizza delivery.

- “Can I order 2 large cheese pizzas” is the text of what the user speaks to the Echo device. This is the text presumably recognized by the Alexa Voice Service mentioned in step 3.1 in the voice request lifecycle.

- The Lambda Request text box displays the JSON Request with identified intent and slots. In this case, you can see quantity and size have been recognized properly.

- The Lambda Response text box displays the JSON Response returned by your custom.

It’s important to note two things:

- The Lambda Request is generated by AVS in a black box process. At the time of this writing, there is no tool/library to produce the JSON Request from a given utterance so that you can do automated testing to ensure the predefined Interaction Model works as expected.

- The Service Simulator has not supported the Dialog Model yet. If your skill is using Dialog Model, you won’t find this tool useful.

Echosim.io

Echosim.io is an Echo device simulator that can run on any browser that supports WebRTC for a microphone input. It’s not a tool from Amazon, but is recommended by Amazon in its official documentation. Echosim helps perform some basic levels of User Acceptance Test during development phase.

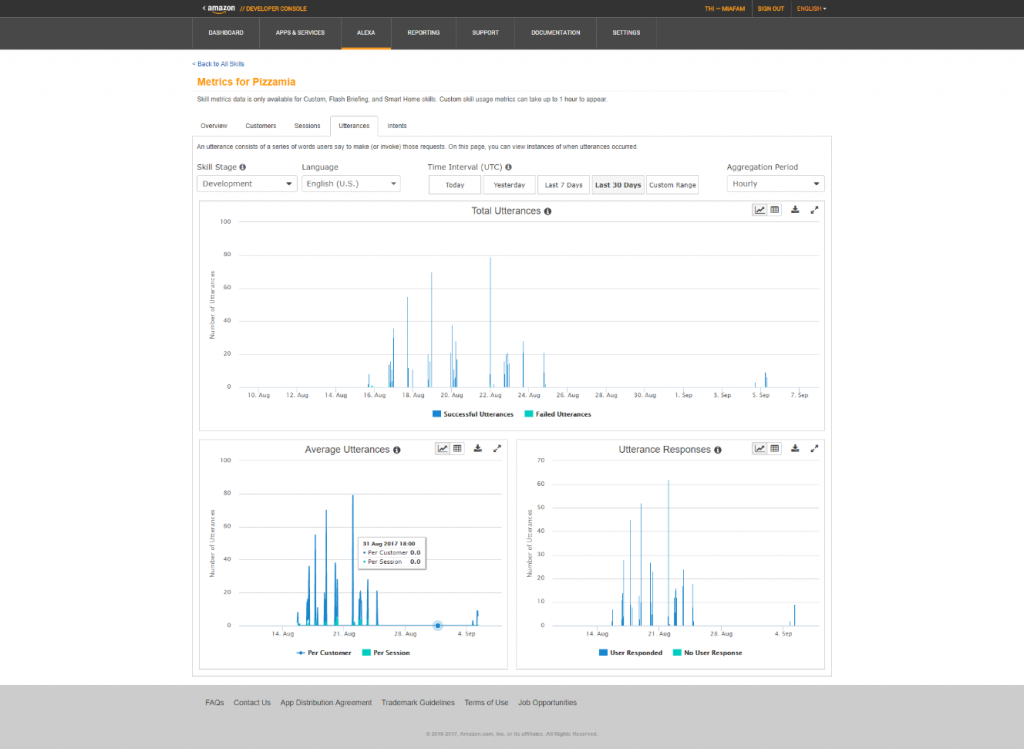

Skill Metrics

Skill Metrics can be found in the Amazon developer console. It’s not a test tool but it helps skill developers and testers understand how users interact with their skills. These insights can help produce a more user-focused test strategy.

These tools can be very helpful during the development phase as it allows you to do different kinds of testing for certain areas in the lifecycle. However, if you do use these tools you’ll have to perform tests manually. In order to scale, we need to employ automated testing when we can.

API Testing for Alexa Skill

In the voice request lifecycle of step 4 and step 5, API Testing can be performed to ensure your skill processes the JSON Request from the AVS properly to return the expected JSON Request:

Ensure code that interacts with third party services works as expected

Ensure Alexa-specific code works well; including how the skill manages the conversation and the returned directives to the device

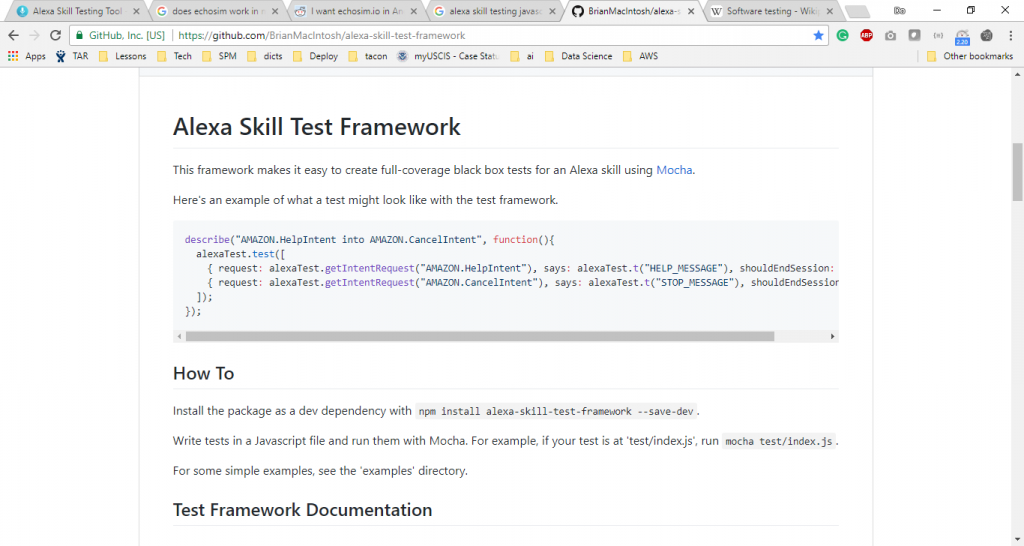

For NodeJS-based Alexa skills, the alexa-test-framework can help you quickly build your Alexa-specific API tests.

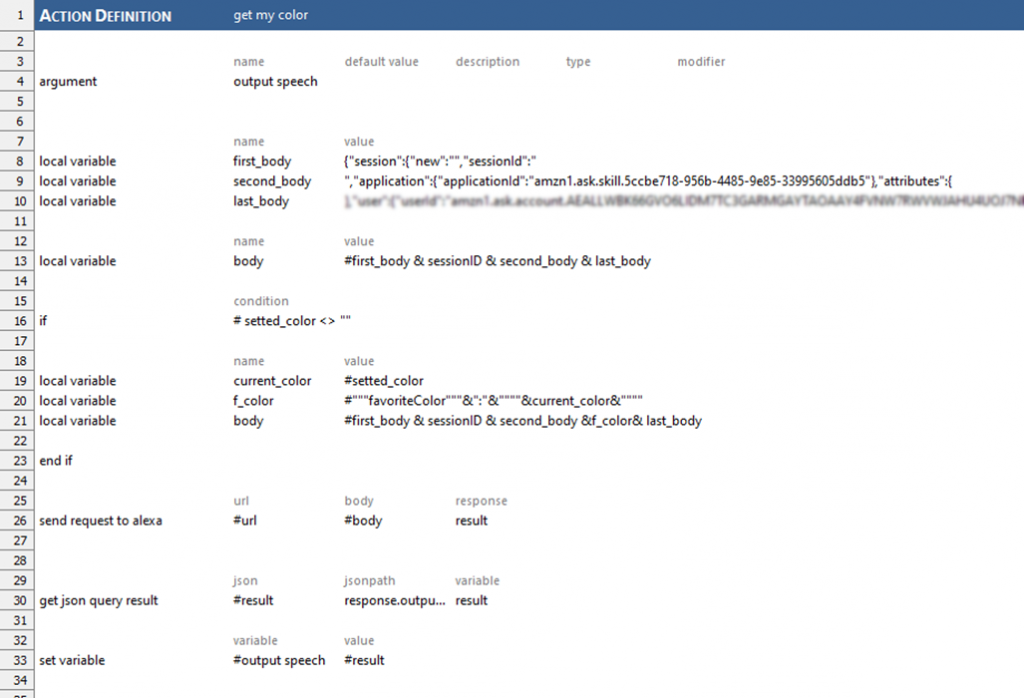

For less technical testers, there are codeless automation tools such as TestArchitect. Below is an example of how you can use API Actions in TestArchitect to get color from the JSON returned by get color skill.

Automated Voice Testing (AVT)

Approach

This approach uses Speech Recognition Engine (SRE) to automatically control the Echo device conversationally as a human user would.

To grasp the idea, let’s take a quick look at this video showing automated voice testing in action. The device in use is the Echo Show. The female’s voice is Alexa’s and the male’s voice comes from the Microsoft .Net Speech Recognition Engine controlled by TestArchitect.

Using Microsoft SRE we can build a simple voice automation framework as below:

Key Benefits

This approach is device-agnostic and platform-agnostic as we’re automating tests at the voice level. Thus, the automated tests can be executed against any kind of voice apps running on any device.

Some use cases where AVT can be applied:

- Alexa app testing (Amazon Echo device)

- Google Home apps testing (Google Home device)

- Voice-enabled web sites and web apps

- Virtual assistant testing: Siri, Cortana, OK Google on Android, etc.

- Communication: call center, voicemail, etc.

- One set of tests can be executed cross-device and cross-platform

Implementation Challenges

Continuous Speech Recognition

Although speech recognition technologies are reaching human parity, it’s still a technical challenge to get a smooth continuous speech dictation. To achieve this, you would need to spend a sufficient amount of time deeply understanding its concepts to be able to train the engine, detect the end of a sentence, and tweak settings as well as thresholds to get the best recognition results.

To get a feel of how well the speech recognition engines can perform, try these online demos from Google and Microsoft. As of the time of writing, Microsoft outperforms Google.

Auto-Pilot Conversation

While using the Echo device I found that it does not always say the same thing for the same request. One reason for that is that Alexa cannot always fully and consistently understand what you say due to the instability of speech recognition. Another reason is that Alexa is programmed to say different variations of the same thing to make it more humanistic.

This challenge requires the automation framework to be smart enough in dealing with multi-turn conversations. It needs to always understand what Alexa says to determine the next step to keep the conversation going as far as possible, before the conversation reaches the end gracefully. This auto-pilot fashion makes the test execution more robust and meaningful.

Automated Voice Testing – A Case Study

Application under Test – Pizzamia Skill

Pizzamia is a sample Alexa custom skill to order pizza. A customer can say “Alexa, open Pizzamia” to activate the skill. Once activated, Pizzamia will walk the customers through the prompts to select the pizza type, size, crust type, and the number of orders. Once all the order details are gathered, Pizzamia will tell the customer the order total.

Test Automation Tool

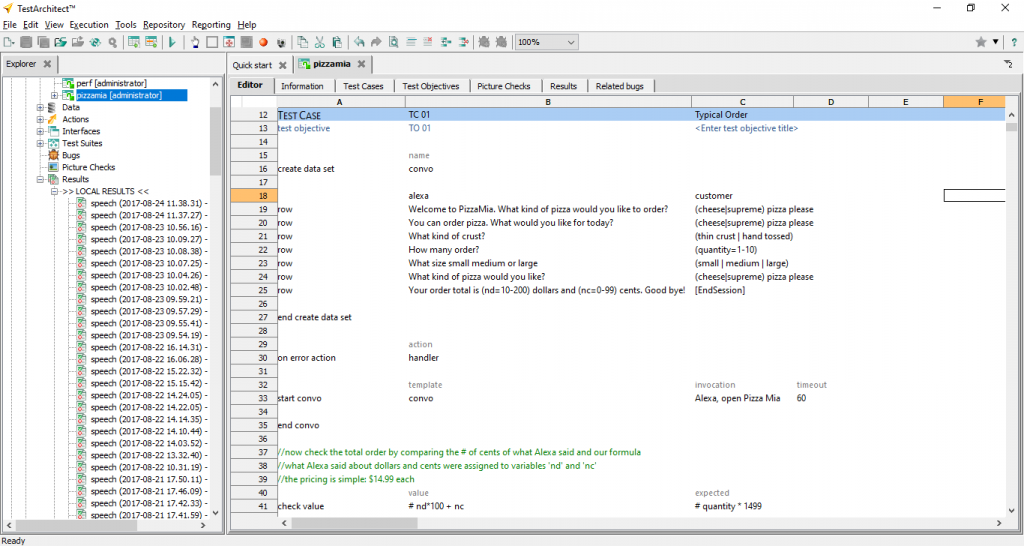

The Tool used in this demonstration is TestArchitect. It is a codeless automation tool that can be easily extended using C#, Java or Python. All the test scripts we see in this case study are written in Action-Based Testing language. Essentially, every test line is a coded/programmed action (keyword).

Test Module

The below test module tests a typical order for functional testing. Let’s take a quick look at the test lines:

- Lines 16 – 27: “interaction data table” which is used to model the conversation.

- The ‘alexa’ column is what Alexa is expected to say.

- The ‘customer’ column stores dialogue that TestArchitect is going to say when it hears the corresponding dialogue in “alexa” column. Learn more on this in the next section.

- Line 33: action ‘start convo’ does a couple of things:

- It trains the Speech Recognition Engine using the interaction data table.

- Talks to Alexa in an auto-pilot fashion.

- Line 35: collects and logs conversation details to test/report when the conversation has ended.

- Line 41: verifies whether Alexa says the right order total by comparing it with the computed price calculation.

Test Conversations Modeling

The “interaction data table” (lines 16 – 27) is used to define the possible responses from Alexa (column ‘alexa’ and how the customer (in this case, test automation tool TestArchitect) should react (column ‘customer’).

Here are the interesting findings of what we did:

- Data is randomized

- Line 19, ‘(cheese | supreme)’ would result either ‘cheese’ or ‘supreme’. This means when Alexa says ‘Welcome to PizzaMia. What kind of pizza would you like to order?’, TestArchitect might say ‘cheese pizza please’ or ‘supreme pizza please’

- Line 22, quantity will be a random number between 1 and 10

- Randomized values are assigned to variables

- Line 22, ‘quantity’ is the variable name to hold the value of randomized number of pizza orders

- This is important information to capture to allow us to verify the order total

- Extracted speech data are assigned to variables

- Line 25, ‘nd’ and ‘nc’ are variables to hold the translated dollar and cent values spoken by Alexa

All of the above are designed to make the test execution more robust, yet generic enough so that we can use the same test to run across multiple platforms and devices.

Test Report

Details of the conversation:

Check if session is complete. Then, print out the captured variables: quantity, nd and nc.

Now verify the order total:

Conclusion

Voice-first devices have gone mainstream. Voice apps have been proving that human-machine interaction can be frictionless. This is a new way for businesses and brands to better engage with their customers by delivering a fast, easy, and familiar voice experience.

In order to ensure a high quality voice app, we need to have a comprehensive test strategy that cover all layers of the voice request life cycle including the VUI, interaction between the voice app and the voice platform as well as the back-end services. The above solutions enable you to thoroughly perform automated voice testing across platforms and devices.

How Are You Developing And Testing Your Voice Apps?

Poking Echo is fun, but I’m not sure about developing and testing voice apps. Talk to us and share your Echo/Google Home/Siri/Cortana/etc. experience in the comment section.

Disclaimer: Amazon, Alexa and all related logos and motion marks are trademarks of Amazon.com, Inc. or its affiliates.

Hi,

Can I get demo of how this automated voice testing works and complete understanding of the tools and technologies involved in this and what knowledge is required

Can I use this tools for testing other voice based applications like Virtual/Personal assistants

Thanks,

Mangesh