Team collaboration is essential for testing embedded systems.

Developing software for an embedded system often carries more risk than for general purpose computers, so testing is extremely critical. However, there still has to be a good balance between time spent on testing and time spent on development to keep the project on track. As consultants for embedded open source technologies, we at Mind encounter many different approaches to testing with our customers. This article structures these varied experiences and combines best practices and techniques, with a focus on embedded open source software.

The Efficient Software Developer Uses Testing

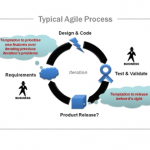

We develop software because we want to make a working product. Therefore, validation is an essential part of the software development process. Validation should be done from a user’s perspective. That makes the loop back to development very expensive: it has been a long time since the code was written so the developer may have to refresh their own memory or the original developer has moved on. Either of these may make it difficult to pinpoint the cause of a problem because everything is already glued together, and there isn’t much time because the release is due soon. To tighten that loop, the software should be tested as soon as possible, during development and integration.

Loops back to development exist not only because of validation, but but also because the software evolves over time: features are added, requirements shift, supporting libraries are upgraded, etc. All of this results in modifications to the existing code. Unfortunately, every modification may mean that something that used to work, now breaks. This is why agile methods stress testing so much: in agile methods, modifying existing code is much more important than writing brand new code. Pre-existing, automated tests reduce the threshold to modify code. They have to be automated to some extent, otherwise the threshold to actually run the tests becomes too high.

An agile team based approach to testing improves efficiency. By working as a team, developers and testers can shorten the loop by performing early testing. Here are some guidelines to follow.

- Make sure there is a test infrastructure from the very start of the project. It doesn’t have to be much, but if nothing is there it becomes increasingly difficult to create the infrastructure while the project grows.

- Make sure that every team member can run the tests. The easiest way to achieve this is to automate the tests.

- Make sure the tests run fast. That of course means that it can not be very complete. Complete testing is the responsibility of integration and of validation. The software developers, on the other hand, should run the tests after each change, and certainly before publishing changes to the rest of the team. If it takes a long time to run the tests, they will be delayed, which makes the development loop larger. Also it would delay publishing of changes, which makes the integration loop larger.

- Tailor the tests to your implementation. While developing, you know pretty well where the risks are of doing something wrong. For example, when doing string manipulation in C, the main risk is doing something wrong with the terminating 0 byte. Make a test that checks this specifically.

- Distinguish between specific tests and smoke tests. We only need to test the things we are currently modifying. Modifications can break things in two ways: it can break the existing features of the functionality we’re modifying, or it can break something unrelated (or expose an existing bug). For the first, we just need to test the functionalities that we’re modifying. This typically corresponds to a unit test, but it can be more on the integration level (when modifying the interface between modules, which happens quite often). For breaking unrelated things, those are very often catastrophic (e.g. stack overflow, double free). Therefore, it is often sufficient to check that the system as a whole still works. For embedded systems, it’s usually sufficient to boot a system with all features enabled and check that it still does something.

Embedded Testing: Test Hardware, Simulation, Timing and Updates

Testing for embedded systems is different than for general-purpose computers. First of all, there is an important hardware dependency, for instance analog audio input, a radio tuner, or a camera. However, the hardware may not be available for an extended time (e.g. there are only 5 boards for 9 software developers). It is often very resource-constrained and doesn’t have the CPU power, memory or flash space to accommodate test infrastructure. And its I/O capabilities are usually rather limited, e.g. lack of writable file system for input data or saving traces. These physical limitations can be overcome by stubbing and simulation. Second, it interacts non-trivially with its environment. For instance, a video screen should show the video in real time and degrade gracefully when too many streams are shown simultaneously. These things make up the essential difference between the embedded system and a desktop media player, and are the reason you can’t simply use existing software as is. So these things should also be tested. Finally, updating the software once the embedded system has been sent into the field is completely different from updates of general-purpose computers. Therefore special attention has to be paid to the update procedure and it should be tested to assure it is repeatable by the end user.

Testing the Hardware Setup

Since the embedded system software depends on the hardware, it is important to have a good setup of test hardware. This is typically a concern for the validation team however, efficiency can be boosted if the validation team makes test hardware available to the developers as well. A good test hardware setup allows remote control of the I/Os and remote updates of the firmware, so that it can for instance be placed in an oven for testing. An nfs root is a good solution to allow remote updates. Not just the I/O should be controlled remotely, also power cycling. This makes it possible to test the behavior when faced with sudden power loss.

As an example, consider testing a wireless metering device. The test setup could consist of two of these devices: one with the actual firmware under test, the other is a controller that provides radio input and monitors radio output. Both of them are network-connected to be accessible for testing. Another example is an audio processing board, where the (analog) audio inputs and outputs are connected to a PC that generates sine waves and samples the output.

Simulation

To be able to perform testing close to the developer, we can perform simulation. The most obvious form of simulation is using a virtual machine, for instance KVM/qemu or VirtualBox. This allows you to simulate the entire system, including the kernel. This has several disadvantages. but first you will probably need to add new peripheral simulators for your particular device. Creating such a peripheral simulator correctly can be very tricky. Second, the simulators are not entirely reliable (especially when it comes to peripherals). Thus, you may end up debugging problems which don’t actually occur on the system, but only in the simulator. Finally, simulation carries a speed penalty. For virtual machines (KVM, VirtualBox), the speed penalty is limited to the times when virtualization kicks in, e.g. when serving interrupts or accessing peripherals. For emulation (qemu), the penalty kicks in for every instruction. However, since the development server often runs an order of magnitude faster than the target platform, emulation may still turn out to be faster than running it on the actual system.

An alternative approach is to run your application code natively on the development host. In this case, you don’t try to simulate the entire system, but only the (user-space) application code. To make this possible, you need to add a Hardware Abstraction Layer (HAL) to your application, which has a different implementation on the development host and on the target platform. If you heavily use standard libraries, these often already form a HAL. For instance, Qt and GLib have different implementations depending on the platform they are compiled for. The HAL is in addition a good way to make sure the application is easy to port to new hardware. If the application consists of several interacting processes, it is usually advisable to test each one in isolation. Using e.g. D-Bus for the IPC simplifies this, since you can replace the bus with a program that gives predefined reactions.

Running the application on the development host has several advantages. First of all, you have a much larger set of debugging tools available, including debugger, IDE, valgrind, trace tools, and unlimited tracing. Second, it is often much faster than either simulation or running it on the target platform.

Whatever the simulation approach, it also has to be made reproducible. That typically means that inputs are taken from a file instead of the normal channels (network, A/D, sensors, FPGA, …). Also outputs go to a file instead of to the normal channels, to allow off-line analysis. Creating reproducible inputs is even useful on the target platform itself, where you can debug the full system including timing.

Timing

Embedded systems show a lot of time-dependent behavior. Part of this is hidden in the HAL (e.g. timeouts of devices), but often also the application itself has time as one of its inputs. For example, a video display unit has to synchronise several streams for simultaneous display, or a DSP algorithm has to degrade gracefully when the processor is overloaded. Also race conditions in a multi-thread program depend on the timing. This time-dependent behavior is hard to make reproducible, especially when using simulation.

On the target platform, the time-dependent behavior can be approximated fairly well. The only requirement is that the simulation of inputs (see above) also includes information about the time at which this input is available. The thread that parses the input adds delays to match the timestamps in the input file. If the input timestamp has already passed, this is equivalent to a buffer overflow in e.g. DMA and is probably an error. Clearly, the HAL should be carefully thought out to make this scheme possible, e.g. sizing buffers so they match the size of DMA buffers.

One possibility for making timing reproducible in simulation is to simulate time as well. The simulator keeps track of the simulated time of each thread. Every thread (including the input thread) adds delays to the simulated time; the delays should correspond (more or less) to the amount of processing time it would take on the target platform. Whenever a thread communicates with another thread or with the HAL, a synchronization point is added: the thread blocks until the simulated time of all other threads has reached its own simulated time. This concept was invented by Johan Cockx at Imec.

Updates

Unlike PCs, embedded systems are very easy to “brick”. If something goes wrong while updating the firmware, it is very difficult to recover from that because it’s not possible to boot from a USB or CD-ROM. Often, the device isn’t even easily reachable, for example, the controller of a radar buoy in the middle of the ocean just has a network connection; if something goes wrong with an upgrade, somebody has to travel in a boat for two days to recover it—assuming they can find it in the first place.

Therefore, for embedded systems it is essential that the update system works and never fails. It is mainly the responsibility of the validation team to test if it works, but the developer has a much better insight in where it can go wrong. This is where a team testing approach has significant benefits and can jointly take into account the following in the update mechanism:

Power failure in the middle of the update, which corrupts the root file system or kernel. To protect against this, the updated software should be installed in parallel with the existing software. Links should be updated only after successful installation, and this should be done atomically (i.e. using rename(2), not editing a file in-place). Package managers usually take care of this pretty well. Of course, a journalled file system is needed as well to avoid corruption of the file system itself.

Integrity of the software, which may be jeopardized by e.g. data loss over a serial connection or premature termination of a network connection. Package managers protect against this with a hash and signature.

Installation of incompatible pieces of firmware. Again, package managers help to protect against this.

Installation of firmware that is not compatible with the hardware. This is most pressing for the kernel and boot loader, but also other pieces of software may have a strong dependency on the hardware. A package manager can help by creating a platform name package and depending on it.

Clearly, package managers help a lot to secure the update system. However, they can’t be used on read-only file systems (e.g. squashfs). Other solutions need to be found in that case.

Conclusion

It cannot be stressed enough that testing should start early. Developers can do a lot of testing on their own, but in agile environments, team collaboration can make testing just one of the project tasks. Embedded software has specific constraints, like hardware availability, which make it even more important to think about testing early on as a team.