In recent years, much attention has been paid to setting up Test Automation frameworks which are effective, easy to maintain, and allow the whole testing team to contribute to the testing effort. In doing so, we often leave out one of the most critical considerations of Test Automation: What do we do when the Test Automation doesn’t work correctly?

Testing teams need to develop a practical solution for determining who’s accountable for analyzing Test Automation failures, and ensure that the right processes and skills exist to effectively do the analysis.

There are 3 primary reasons why your Test Automation may not work correctly:

- There is an error in the automated test itself

- The application under test (AUT) has changed

- The Automation has uncovered a bug in the AUT

The first step whenever a failed test occurs in Test Automation is to figure out what happened. So who should be doing this?

Too often in testing organizations, it’s the case that as soon as a Test Engineer runs into a problem with the Test Automation, they simply tell the Automation Engineer “Hey, the Test Automation isn’t working!” The job of analysis then falls to the Automation Engineer, who is already overburdened with implementing/maintaining new and existing Test Automation.

How can we push this analysis ‘upstream’ to the Test Engineers who execute the Test Automation? In order to do this, we must first look at why the Test Engineers don’t feel that they can or should analyze the issues.

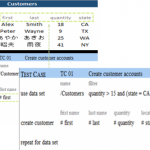

In a typical ‘scripting approach’ to Test Automation, the Test Engineers will first write a verbose test case, typically in Word, Excel, or some sort of in-house or 3rd party test case management tool. Once that task is completed, the Test Engineers effectively “throws it over the wall” to the Automation Engineer. The Automation Engineer thens create a scripted version of the test case, and “throw it back over the wall” to the Test Engineer, who then executes the automated test.

More often than not, the Test Engineer will not understand the scripted test very well. If something is broken, they rely on the Automation Engineer to figure out what went wrong. This situation violates the very principle of the four fundamental tasks that an experienced Test Engineer must be able to do:

- Design/write tests.

- Execute tests and identify/seek out failure.

- Analyze a failure for reproducibility and ideas to incorporate into new tests.

- Report a failure and/or bug.

At a minimum, the Test Engineer should be able to analyze the results of the automated tests, and figure out if a failure is due to an actual bug in the AUT. If there is no apparent bug, then the Test Engineer should be able to determine whether or not a change occurred in the application. Finally if there is no apparent bug or changes in the AUT, then they may confidently consider that the issue was caused by an error in the Automation.

So how can you empower the Test Engineer to analyze Test Automation failuress? It’s simple really. If your Test Engineers can create automated tests themselves, then they will be empowered to analyze those tests when they don’t work. In our experience, a Keyword-Driven Test Automation framework is the best way to enable your test engineers to effectively own the analysis of Test Automation failures.

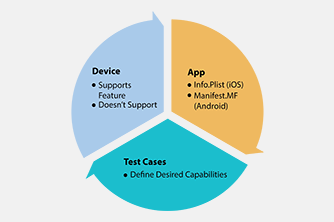

With a properly implemented Keyword-Driven Test Automation framework, the analysis of a Test Automation failure consists of the following steps:

- Did the Test Automation uncover a bug in the AUT? (Done by a Test Engineer)

- Was the failure caused by a change in the AUT? (Done by a Test Engineer and/or Automation Engineer)

- Was the failure caused by an error in the Automation itself? (Done by an Automation Engineer)

With Keyword-Driven Test Automation, scripting is kept to a minimum, so most of your failures will occur due to bugs or changes in the AUT. Test Engineers should be able to do most of the failure analysis, freeing your Automation Engineers to focus more on creating new automated tests, and allowing you to further increase your test coverage, reduce testing time, decrease maintenance, and most importantly, create higher quality products!