There are few topics in quality assurance testing that cause as much confusion as smoke testing versus sanity testing. The two names would seem to describe very different practices— and they do! But people still get them confused, since the distinction is somewhat subtle.

Whether you are developing a mobile app, a web service, or the Internet of Things, you will probably undertake smoke as well as sanity testing along the way, likely in that order. Smoke testing is a more generalized, high-level approach to testing, while sanity testing is more particular and focused on logical details.

Let’s take a look at each one in more depth:

Smoke testing

The first thing you may be wondering about is: Why the name “smoke testing”? The name is certainly unusual, but it makes sense. In fact, the term originates with hardware testing. Test engineers who turn on a PC, server or storage appliance check for literal smoke coming from the components once the power is running. If no smoke is detected, the test is passed; if not, all other project-related work must be put on hold until the unit passes the test.

As we can see, the idea is to verify that the most basic functionality— is operating properly before additional testing is undertaken. In the case of hardware the ability to power on without catching fire, as well as to successfully start up and interact with required libraries and software services, is the what the smoke test evaluates.

Smoke testing usually takes place at the beginning of the software testing lifecycle. It verifies the quality of a build— i.e., a collection of files that make up (or “comprise”) a program— and checks to see if basic tasks can be properly executed. The idea is to ensure that the initial build is stable; if the build cannot pass a smoke test, the program must be reconstructed before the testing phase can resume. Some organizations refer to smoke testing as build verification testing.

“In smoke testing, the test cases chosen cover the most important functionality or component of the system,” explained a guide from Guru99. “The objective is not to perform exhaustive testing, but to verify that the critical functionalities of the system [are] working fine. For example, a typical smoke test would be to verify that the application launches successfully, check that the GUI is responsive, etc.”

Smoke tests reveal plainly recognizable deficiencies that could severely throw a release off schedule. By running a group of test cases that cover the most essential components, tests can determine whether critical functionalities behave as needed. At times, smoke tests may uncover the need for more granular testing, such as a sanity test.

An additional function of smoke tests is to assess new builds on whether the program construct is testable, covering such questions as “How well does the program run?” or “How well does the application interface with the system?” The test reveals whether the functionality is so obstructed that is unprepared for testing that delves more deeply into the software functions.

Performing smoke tests

A smoke test can be performed manually or it can be automated. QA teams can therefore create manual test cases, or come up with scripts to automatically check if the software can be installed and launched without incident. An enterprise test management suite is the best resource to help with your smoke tests.

A smoke test is most effective when a preliminary code review that focuses on code changes, has been performed. In this way code quality is best assured, better ensuring against coding defects. Subsequent to code review, the smoke tests checks the changes in coding; assesses how changes affect software functionality; and generally verifies that dependencies are not adversely affected.

Performing sanity testing

Sanity testing, generally performed subsequent to smoke tests, is sometimes called a sanity check. Like a literal sanity check, it is meant to be less than exhaustive. Instead, sanity tests verify that recent upgrades are not causing any major problems. The “sanity” in the name refers to an assurance that the application has been rationally and sanely developed or updated.

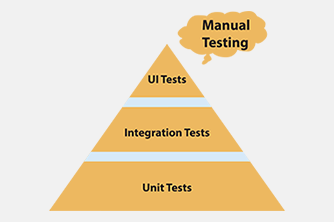

The basics of sanity testing differ from smoke testing, as well as from acceptance testing, of which sanity testing is categorized as a subset. Acceptance testing is a much more thorough testing process. Smoke testing is more generic.

Sanity testing is usually done near the end of a test cycle, to ascertain whether bugs have been fixed and whether minor changes to the code are well tolerated. The test is typically executed after receiving a new build, to determine whether the most recent fixes break any component functionality. Sanity tests are often unscripted and may take a “narrow and deep” approach as opposed to the “wide and shallow” route of smoke testing.

While a smoke test can determine whether an application is constructed well, a sanity test helps determine that an app can fundamentally function well. One example of a sanity test is one used to determine whether a calculator app can give a correct result for 2 + 2. If the component function cannot return a result of 4, the process has failed and there is no point yet in doing further tests on the programmed ability to handle more advanced activities, such as trigonometric functions. Sanity tests can be performed manually, or with the help of automated tools.

The sanity test evaluates rational processes within the application. Therefore, the goal of the test is to ensure that obviously false results are not present in component processes, for a speedier testing process than granular in depth testing. Possibly prior to a more intense set of tests, a sanity test is a concise scrutiny of a program which broadly assure that components bring about expected results without in depth analytics.

As we can see, there is some overlap between smoke testing and sanity testing, especially when it comes to the fact that neither is really designed to be a thorough process. However, there are also obvious and important differences.

QA teams and developers use smoke tests, and QA teams use sanity tests, to determine in a timely manner whether an application is sound and solid. The best time to perform smoke tests is during a daily build. Testing at the component level, rather than the level of ‘done’, catches deficiencies that could otherwise remain undetected, embedded in the build.

| Smoke Testing | Sanity Testing |

| Smoke Testing is performed to ascertain that the critical functionalities of the program are working fine | Sanity Testing is done to check that new functionality / bugs have been fixed |

| The objective of smoke testing is to verify the “stability” of the system in order to proceed with more rigorous testing | The objective of sanity testing is to verify the “rationality” of the system in order to proceed with more rigorous testing |

| Smoke testing is performed by developers as well as testers | Sanity testing is usually performed by testers alone |

| Smoke testing is usually documented or scripted | Sanity testing is usually undocumented and unscripted |

| Smoke testing is a subset of regression testing | Sanity testing is a subset of Acceptance testing |

| Smoke testing exercises the entire system from end to end | Sanity testing exercises a particular component of the entire system |

| Smoke testing is a general health check | Sanity Testing is a specialized health check |

Automated test management can significantly augment both smoke and sanity tests. Automated testing is most often generated by the build process. Smoke tests are initial to testing the software build, followed by sanity testing. The thoroughness of both smoke and sanity tests are dependent upon the accurate coverage provided by the test cases, or test suites, designed for each.

Developers and testers rely on smoke and sanity testing to move through application development and deployment with as few delays and technical errors as possible. Smoke testing especially identifies issues of integration. Using smoke tests, fundamental problems are discovered early, enhancing confidence that upgrades to the application have not obstructed essential functions.

Sanity tests provide the summary testing of a software product to ensure that the application logically produces expected results for a successful outcome. At the point in which a sanity test is performed, the software product has already passed other fundamental and related tests. With a quick evaluation of the logical quality of software functions, sanity tests help determine software eligibility.

Overall, we can look at smoke testing and sanity testing as being similar processes at the opposite ends of a test cycle. Smoke testing ensures that the fundamentals of the software are sound so that more in-depth testing can be conducted, while sanity testing looks back to see whether the changes or innovations made after additional development and testing generally broke anything.

Smoke tests, Performance tests, and the Enterprise

Of utmost importance to the enterprise is that software performance target customer requirements. Both smoke and sanity tests cover the software product in a timely manner to mitigate the risk of poor customer engagement. Test cases can be written that apply to varying real world business challenges, while automated reporting allows QA teams to quickly assess such attributes as accuracy, capacity, and performance.

By comparing the performance of updated software with the application’s previous performance, both smoke and sanity tests broadly cover the product’s anticipated operations. Coverage must include a surface assessment of the efficiency with which software products interface with systems, servers, and platforms. Comparisons with the most recent release also allow generalized test coverage to quickly spot discrepancies, especially those which involve the programmed build or logic that support software operations. The manner in which smoke and sanity tests can combine to expedite deployment mitigates risk to the enterprise, with contributions to increased ROI and reduced time to market.