Introduction

All too often, senior management judges Software Testing success through the lens of potential cost savings. Test Automation and outsourcing are looked at as simple methods to reduce the costs of Software Testing; but, the sad truth is that simply automating or offshoring for the sake of automating or offshoring will only yield poor results. And if the same simplistic approach was taken in the planning of Automation and offshoring, the costs may not even be lower.

Several times, testing is considered a bottleneck in software delivery. Testing is abstract in nature and the outcome of testing is not something that can be measured or seen easily. Managers should stop seeing just the cost of Software Testing, but rather, evaluate how the value of testing relates to its costs. Testing, just like any development activity, does have a cost, but its cost is massively outweighed by its value.

Organizations cannot wait for customers to provide feedback to determine if they are meeting quality goals. It is critically important for management to define “success” for their Software Testing efforts in the beginning. While cost is one metric, it is not the sole metric for success. Other issues that should also be taken into account include:

- The breadth of test coverage

- Test reusability

- Value of the data reported by Software Testing

- The quality of the software under test

Using these criteria, management should come up with a clear definition of “success” that is consistent with their testing strategy and testing methodology. Of course, if there is no strategy nor methodology, then that must be developed first–but that is a topic for another article.

In this article, we will discuss the significance of non-cost success metrics.

Test Coverage

Test coverage is a primary indicator of quality and effectiveness. Testing coverage indicates how much of your codebase is covered by the tests before the software is released. It is a measure used to describe the degree to which the source code of a program is executed when a particular test suite runs [Wikipedia].

Exhaustive testing is impossible for large, complex software applications when working in timeboxed sprints. While it is not possible to test absolutely everything, it is certainly desirable to test as much as possible. A reasonable place to start is to thoroughly test the most critical and most used parts of the software. This is where test coverage is beneficial. Coverage reports enable testers to identify critical misses in testing and provide actionable guidance to identify the pieces of the code that are not covered by tests. Test coverage can help in test case prioritization and minimization.

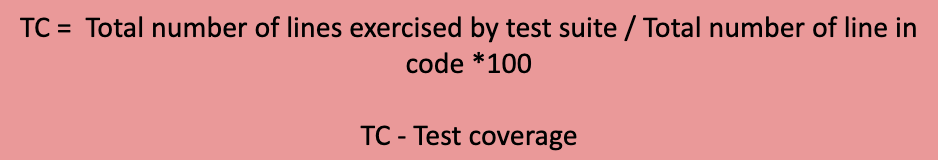

How to Calculate Test Coverage

Below is the formula to calculate test coverage:

Code coverage is the most commonly used metric for measuring test coverage in unit testing. The development team should thoughtfully consider the appropriate code coverage for their products. Making code coverage a part of the Continuous Integration (CI) process is becoming a pattern for all DevOps driven organizations. For example, CI build fails if the overall code coverage is below our certain threshold–let’s say less than 80%.

There are several open-source and commercial code coverage tools that you can choose based on measuring your code coverage. Some of the popular code coverage tools include Atlassian Clover, Cobertura, JaCoCo, jtest, Coverage.py, Istanbul, Blanket.js, simpleCov, PHPUnit, etc.

It may turn out that Test Automation and/or offshoring may allow a company to test its software much more thoroughly in the same amount of time. In this scenario, testing costs may not actually go down, but fewer defects will make it through to the customer–which will yield down-stream cost savings in avoided in-market support and maintenance costs.

Test Reusability

Managers cannot afford to lose speed or compromise on quality in today’s competitive world. Testing teams need to find intelligent techniques to design and execute test cases. Every QA manager would like to spend more time running tests and spend less time creating them. Utilizing modularization and parameterization makes reusable test design possible.

A Software Testing effort where all tests need to be recreated with each revision of the software or release of related software is one that will be expensive regardless of Automation and offshoring. A consideration for any Software Testing effort should be how much return are you getting on your Software Testing effort–how many of the tests are a reusable asset that helps to keep down ongoing test maintenance costs? (see: Software Testing: Cost or Product? by Hung Q. Nguyen).

Whether it’s automated test scripts or test cases that are executed manually, reusability adds tremendous value. As test reusability goes up, test maintenance costs go down. A high degree of test reusability should be an important goal for any Software Testing effort. Reusability increases productivity by avoiding re-work. Not only does it reduce the costs, but it also allows future testing to be performed rapidly. Test reusability codifies organizational knowledge about the software under test.

Here is our advice on how to ensure your tests are reusable:

- Write modular test scripts to avoid redundant tests and maximize test coverage.

- Follow the DRY (Do not Repeat Yourself) principle. Avoid WET code (Write Everything Twice/Waste Everyone’s Time) when writing automated scripts.

- Ensure that each component of the Automation framework is reusable and avoid writing similar code across multiple classes, files, projects, etc.

- Automation code can be reviewed with static analysis and code quality tools like SonarQube to analyze duplications.

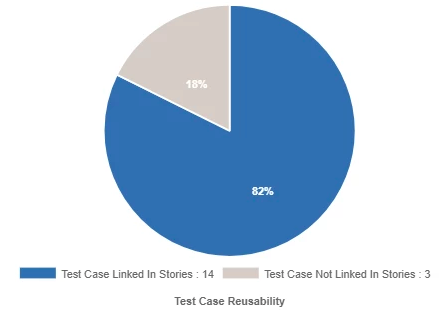

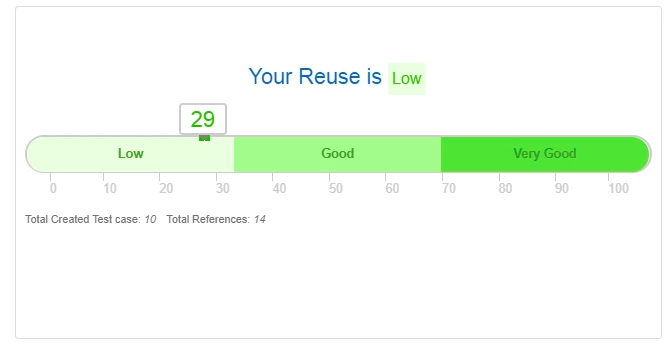

2. Modern test case management tools enable users to reuse test cases across multiple projects, test suites, and test runs. They also provide the ability to create new test cases by reusing the test steps.

Some test case management tools also provide you a reusability report, which provides you insight about the reusability of test cases.

Value of Data

Without data, you’re just another person with an opinion.

W. Edwards Deming

A software or product’s success is gauged by its quality, reliability, and customer satisfaction. Thus, Software Testing is a critical and integral part of the development in the Agile/DevOps era. One of the main objectives of Software Testing is to provide management with the data that they need to be able to make critical business decisions.

Software Testing should report clear, concise and valuable metrics that provide actionable insights to the team and the stakeholders. For example, the number of open P0 bugs can be a valuable metric that tells management about the software’s readiness for the market. Such data may help organizations to avoid shipping a product prematurely, which can result in higher downstream support costs.

Metrics such as test cases run, testing coverage, open bugs, and bugs fixed provide management with insight into the quality of the software and its readiness for the market. Only with such data can management make informed ship/no-ship decisions (see: Understanding Metrics in Software Testing). Visualization of data from various sources and calculating the metric for decision making is made easy by JIRA dashboards.

Test Automation is the key enabler of agility and inevitable for transitioning to current trends like DevOps and Continuous Testing. Key Performance Indicators (KPIs) play a crucial role in measuring the effectiveness and accuracy of your team’s Automated Testing efforts. Additionally, by communicating KPIs, your executive team will have more clarity about what exactly Test Automation can achieve. KPIs like Percentage of Automatable Test Cases, Automation Progress, DRE (Defect removal efficiency), and Test Automation Coverage Levels are really useful if they are aligned with your Automation strategy and guide your strategic decision making (see: 5 Incredibly Useful KPIs for Test Automation to learn how to measure these KPI’s).

Software Quality

Good Software Testing will not itself result in quality software. There are 4 pillars upon which software quality rests, of which Software Testing is just one.

Assuming that the processes are in place to yield quality product, Definition, Design, Coding, and then Software Testing form the 4 pillars of quality–all of which play integral, yet separate, parts in overall quality.

How to Measure Software Quality

You need to consider several quality aspects when measuring quality.

1. Reliability – A simple yet effective way to measure the reliability of your product is by counting the number of bugs reported in production.

2. Performance – Application Performance Monitoring (APM) tools enable you to analyze application performance at a glance. You can easily monitor page load times, error rates, slow transactions, and so on.

3. Security – The number of vulnerabilities detected can be a good measure of security. Web vulnerability scanners, like Burp Scanner tools, measure your security against all vulnerabilities against OWASP top 10.

4. Maintainability – Number of Lines of code is a simple metric that can measure maintainability. The more lines, the higher the complexity is and the code is more prone to bugs. Teams can leverage static analysis tools to measure LOC, Code quality, and complexity.

5. Quality

Quality is the backbone of Customer Experience (CX). One of the major objectives of Software Testing is to ensure end-user satisfaction. Great quality can get people buzzing about your product and spread recommendations through word-of-mouth. Word-of-mouth can be the deciding factor for customers when they’re considering buying your products or services. A great Customer Experience (CX) yields increased revenue, customer loyalty, retention, builds brand trust, creates new customers, and reduces support costs. All of these things can be missed when focused simply on the cost of Software Testing.

Conclusion

Many of these success criteria may not actually reduce the cost of Software Testing, but rather will provide benefits “downstream.” They help an organization to avoid future costs, as well as help to accrue other benefits such as goodwill, customer loyalty, new customers, and increased revenue. Overall, considering these other criteria may actually help to improve revenue, reduce costs overall, and improve profitability–even if the organization does not save a dime on Software Testing. These are outcomes that would be totally missed by a company solely focused on decreasing testing costs. Defining success using all metrics can yield a far superior Software Testing program. Developing an effective testing strategy is very essential but is often overlooked–Strategic QA Management Consultation can help organizations to define strategies ensure product quality meets customer expectations.