This survey on modern test team staffing completes our four-part 2017 state of software testing survey series. We’ll have more results and the “CEO Secrets” survey responses to publish in 2018.

This survey is like none other; unlike our Test Essentials, Continuous Delivery or Automation Survey, it doesn’t solely cover skills or processes. Instead, we wanted to focus on the “people” side of testing—particularly staffing, and the factors that compose distributed test teams. The questions in this survey were designed for both management, and non-management alike. Distributed staffing impacts everyone.

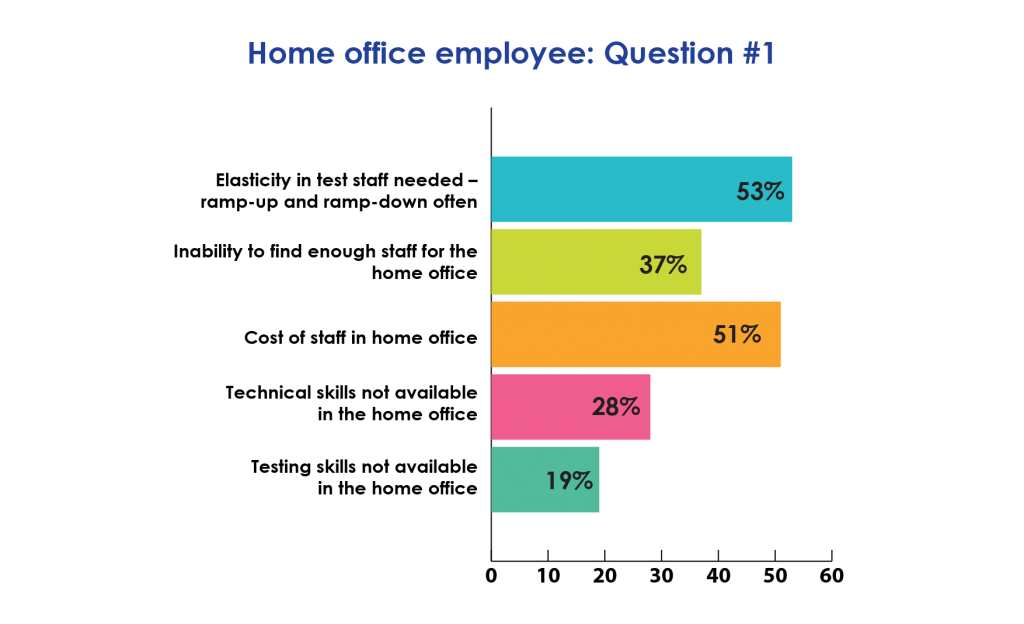

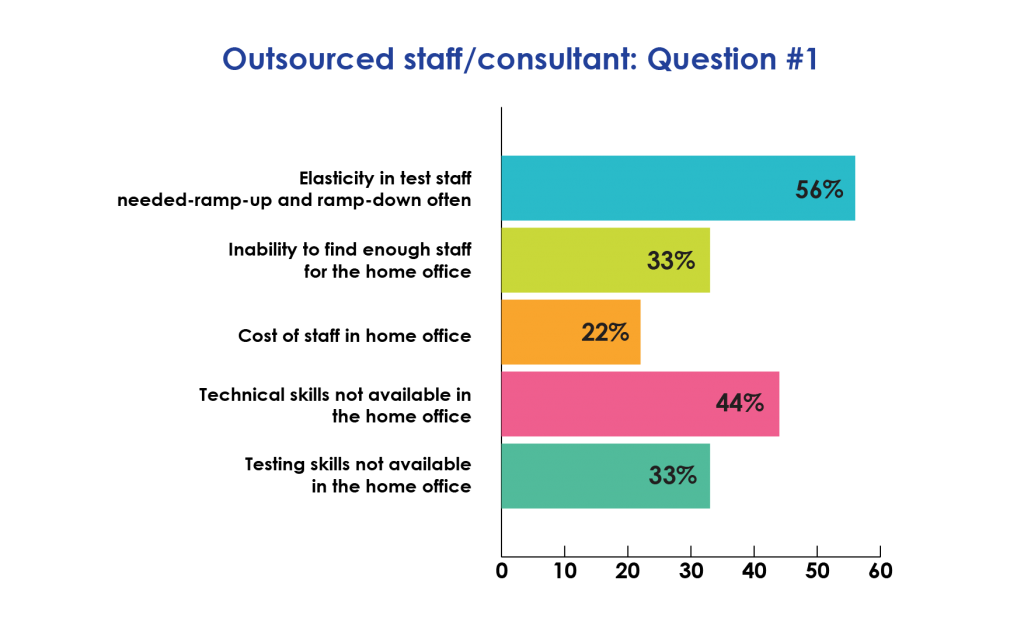

#1 What is the goal of the work distribution? (Multiple answers selected)

There is an agreement that elasticity of staff is the #1 goal for distributing work. For the home office, cost is a secondary goal. Interestingly to me, what I hear from managers often is the inability to get certain staff skills. Perhaps people do not realize how hard it is to find skilled staff these days, or it is not as problematic as many people express.

From the responses of the offshore staff, #1 is elasticity, and last is cost. This differs from the response collected from the home office, all the missing skill answers were higher. It’s great to see that more than half of respondents are at the right level to build and maintain tests. This is an important area of growth for all test engineers.

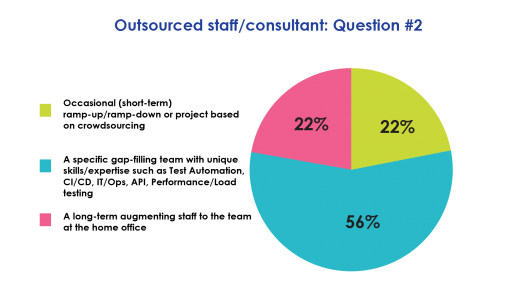

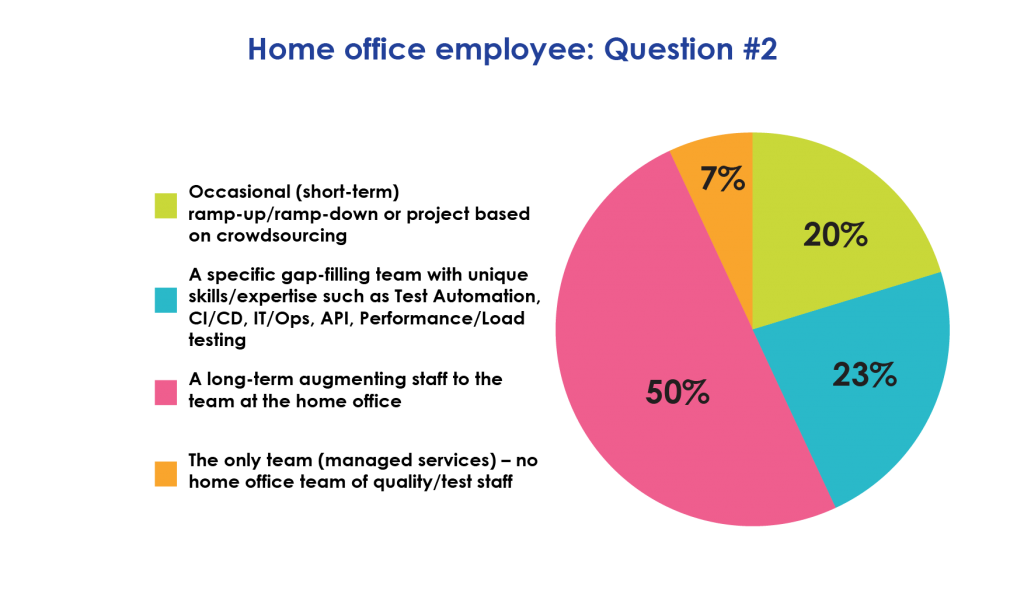

#2 The current distributed team is:

This question gives the answer I expected for #1, which is that more than half of the engagements are long-term projects. This somewhat contradicts the elasticity goal—quick up and down shifting of staff to suit a project. Long-term staffing leans much more toward skill.

Almost 75% of projects are long-term and have specific skill gap models. More than half of the offshore team’s work is to fill a gap in skills.

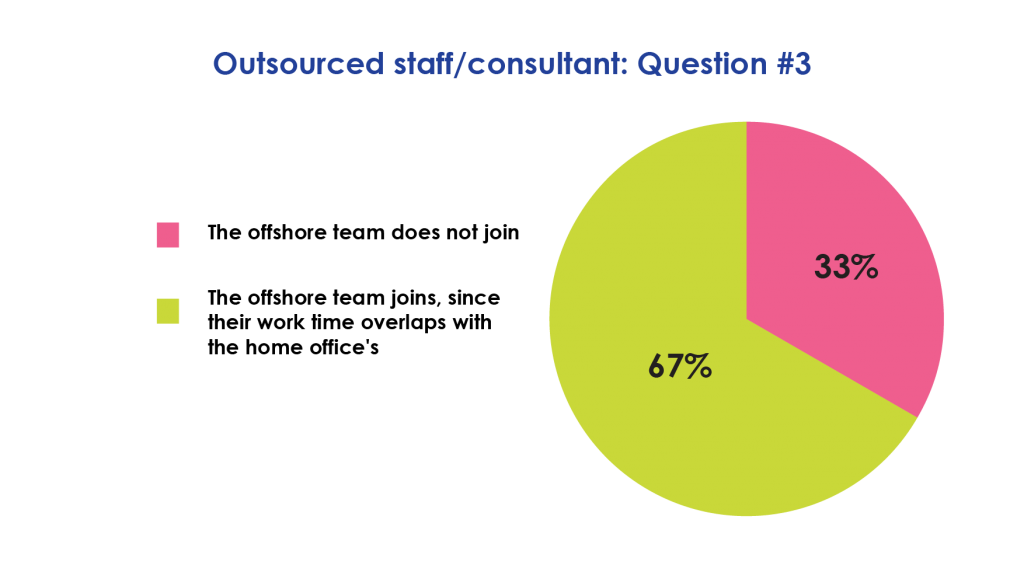

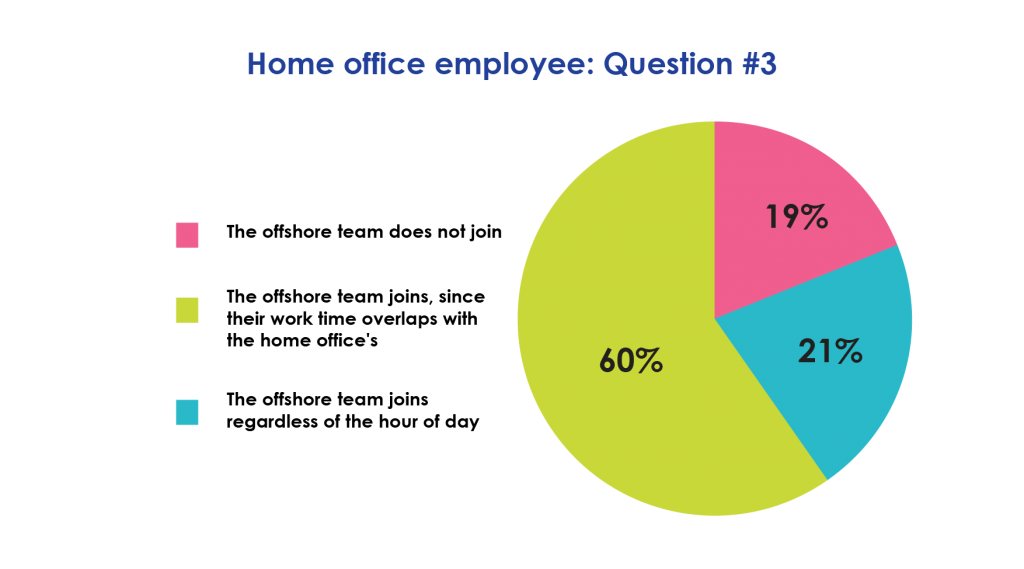

#3 If you use Scrum practices with teams outside the home office, for the daily standup meeting:

The results here are straightforward, but imply that most teams have time overlap. As I stated in the introduction, this has changed with time. Nearshoring and inshoring will have the greatest time overlap. For US-based teams, the approximately 12-hour time difference to India provides no work time overlap.

Attendance at daily standup or Scrum meetings is something that is not emphasized enough. It goes beyond having regular communication with all team members. One of the implicit reasons in attending or having the meeting is the importance of the distributed team being viewed as equal members of the team. For many

distributed teams, feeling the same sense of “belongingness” as the home office is a difficult hurdle to overcome. Many distributed team members feel detached from their teams in the home office.

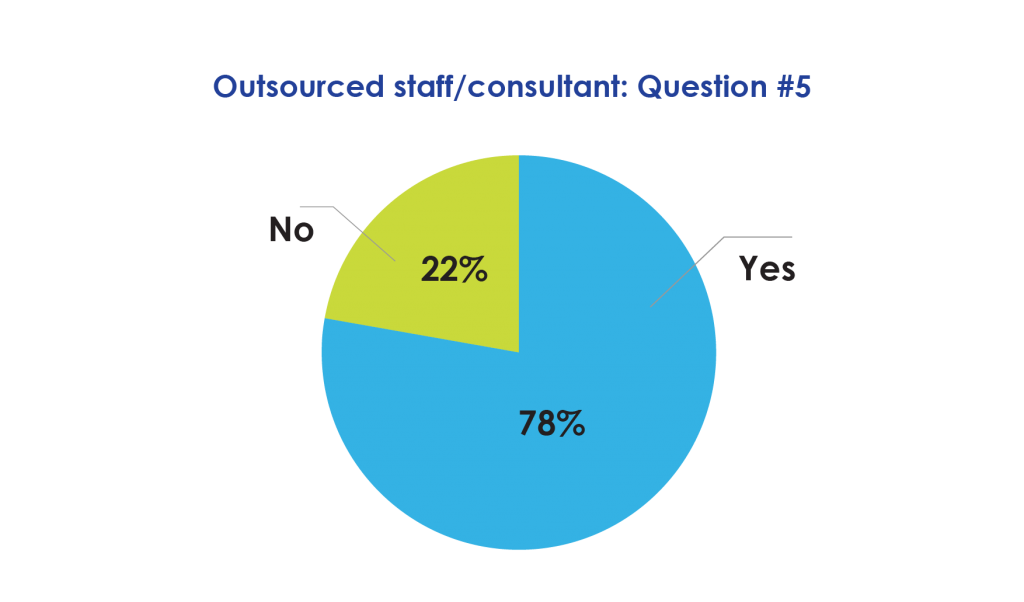

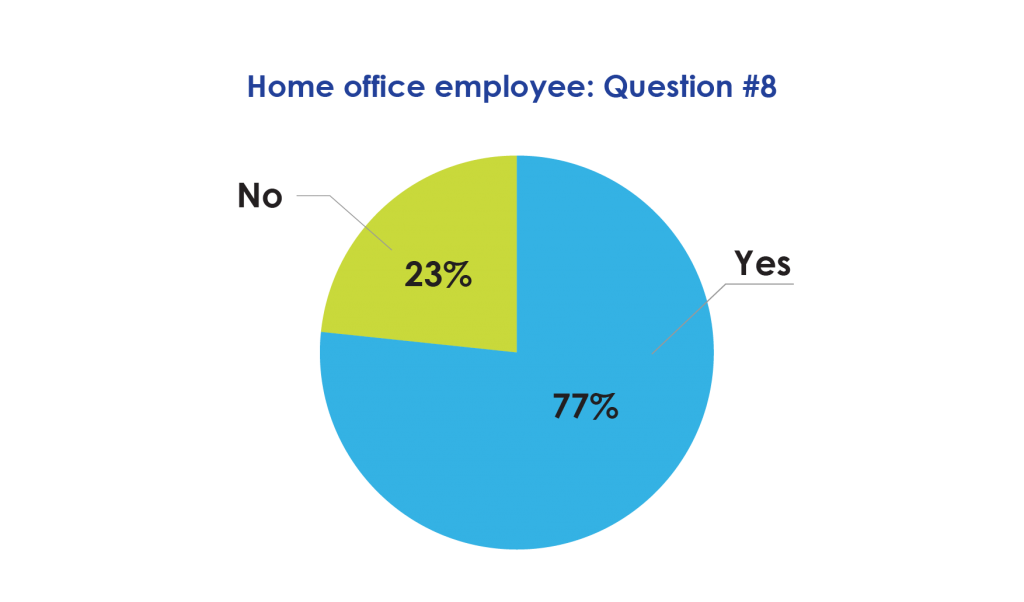

#8 and #5 Is your outsourcing/offshoring (any variety) successful or effective?

It is impressive to see about 75% of respondents’ perceive the distribution of work as successful. A 3:1 ratio seems overwhelming. Of course, I wish the number were higher. The remaining 25% of respondents see the distribution of work as unsuccessful. This number is too large.

At the same time, software development is hard. Many teams struggle to release high quality products on-time while still getting along and feeling good about their work. As part of my work, I see very successful teams and seriously unsuccessful teams with big people issues. Perhaps having only 25% of it be unsuccessful is not such a substantial number after all.

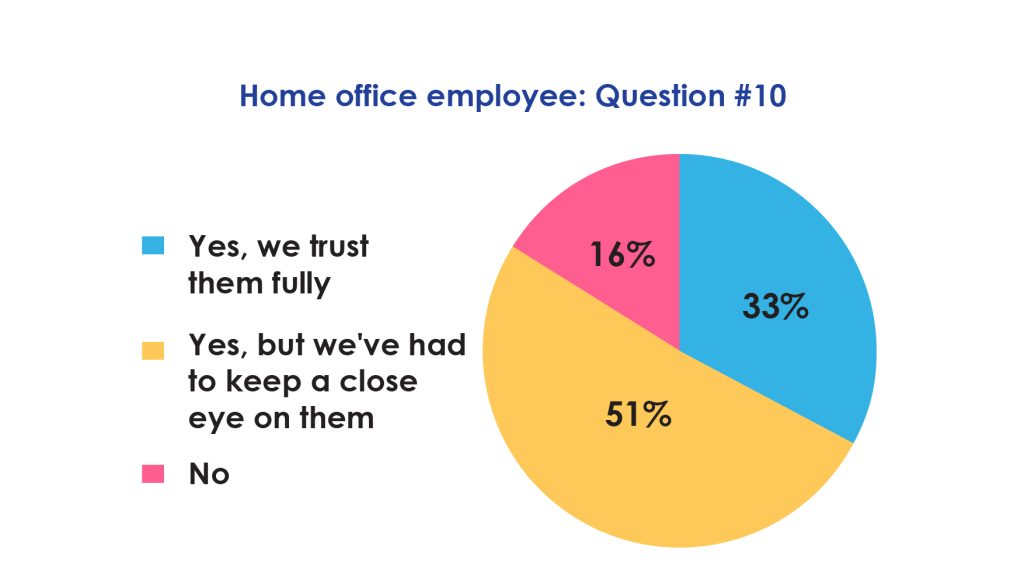

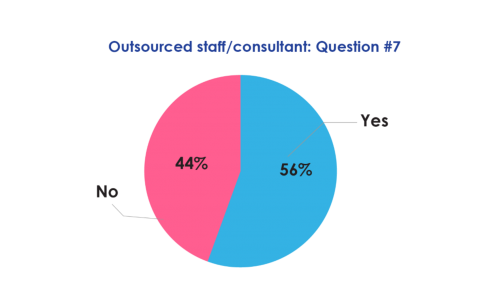

#10 and #7 Is the outsourced/offshore team respected and trusted to the same level as the internal team?

This aspect of the survey covers the difference in attitudes between teams. 84% of the home team thinks the distributed teams are respected. This result is significant.

The majority of distributed staff also say “yes,” but the number is a lot smaller. 54% say they are respected. 45% say no they are not respected. This number is quite high which is problematic.

The distributed staff contributes so much to the product development—if they do not feel respected in their work, the level of their work will not be what the home team needs. Having worked with so many distributed teams, including multiple US office locations, I often see a difference in the way home and offshore offices feel and the treatment that they receive. Many times they feel badly about how a project went, when the home team feels fine about it. There can be cultural issues that lead to misunderstandings in project issues, and there can be situations where there is no “thank you” given to the team. There can also be issues where the home team is not happy.

There are many reasons as to why people in the distributed office do not feel that they are of equal value. The deadline is met, but the distributed team does not hear a word from the home office. The home teams need to do a better job of expressing their feedback and appreciation to the distributed team, whether that means saying “thank you”, “this was great,” or “this project went badly.” Any type of communication improves the relationship between the two teams.

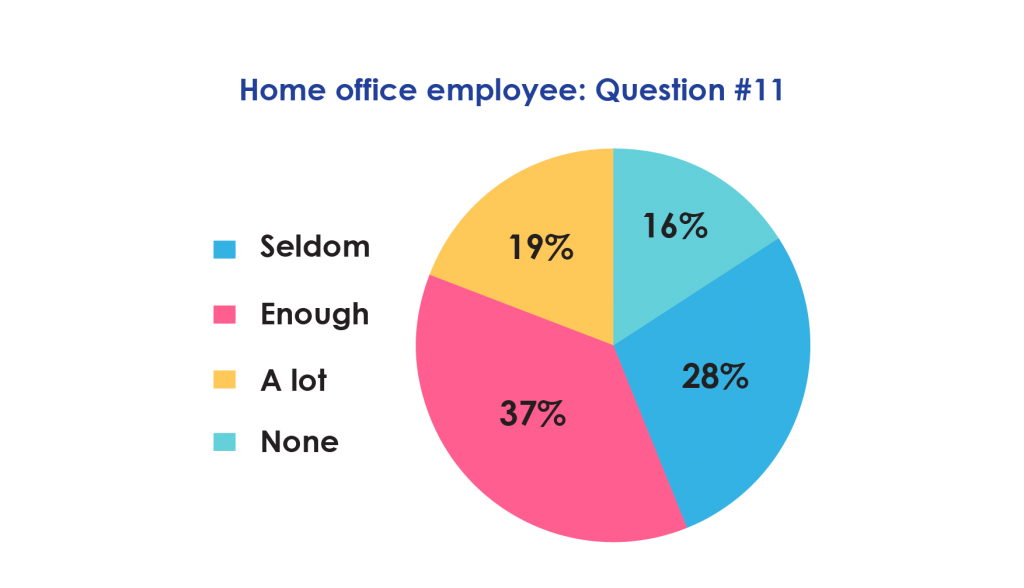

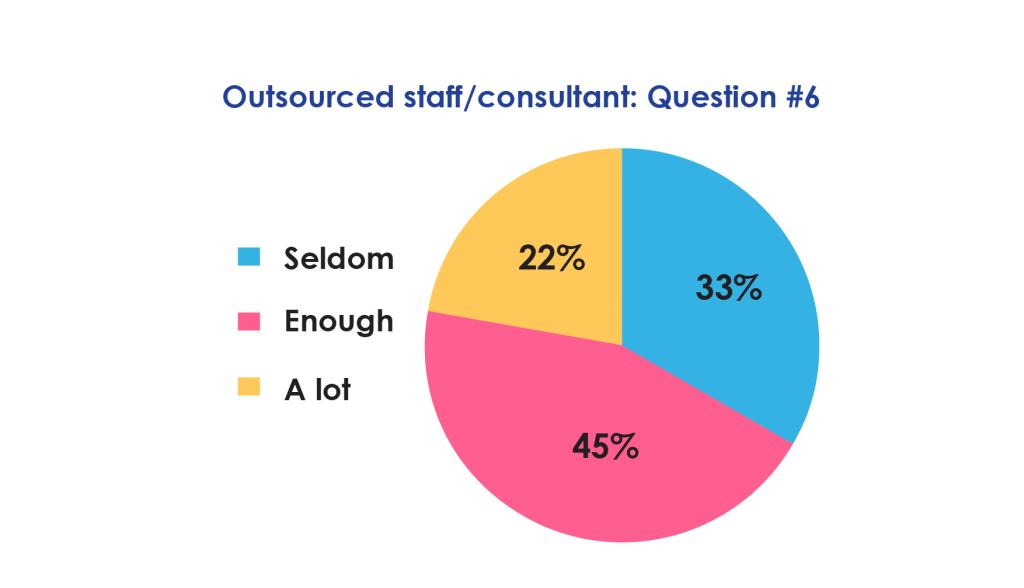

#11 and #6: How much time and effort is spent training your team?

This was an indicator question for me. The amount of training can simply be that—or it can be an indicator of the investment, care, concern, and the value that the home office is contributing to the distributed team.

About two-thirds of both the home and the distributed team receive a lot or enough training. These numbers are good and much higher than the last survey, where the majorities of both the home and the distributed teams felt training was not enough. This surprised me. Perhaps it is particular to the respondents of this survey—distributing a relatively short time.

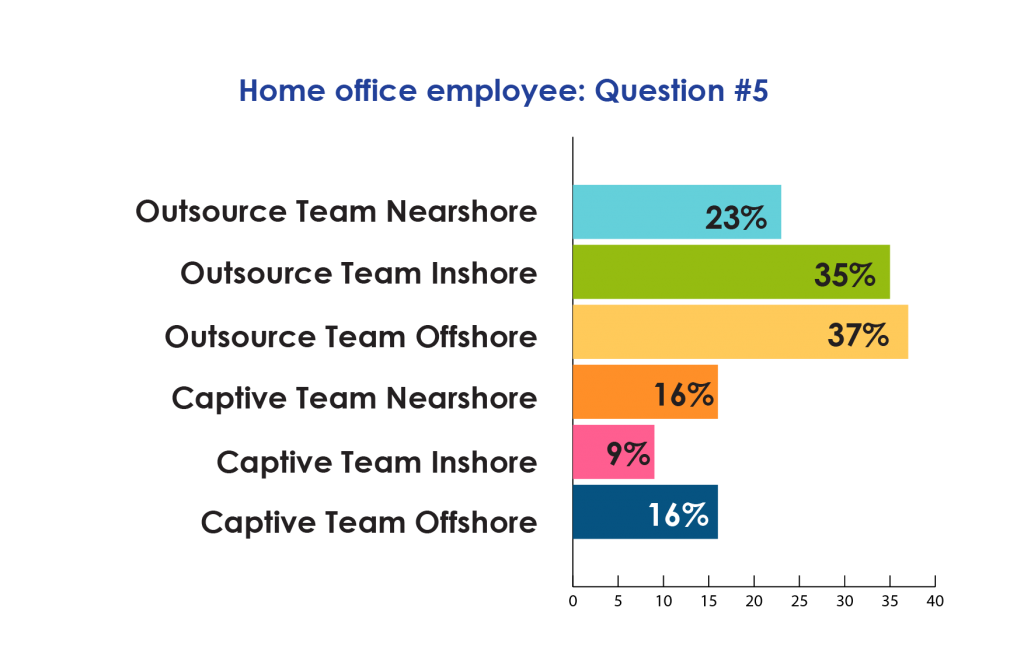

#5 Your staffing strategy includes: (Multiple answers selected)

These results speak for themselves. Outsourced teams for both inshore and offshore, account for most of the responses. It is interesting that so few companies that responded had captive offices, their own company offshore for work distribution. Does this validate the idea that outsourced is more efficient than captive?

Captive is the opening of a company office in a “low cost” area, it was once considered the optimal solution. Now, the industry trend is outsourcing that work to local management, local culture, and lower cost with the “remote” office being treated as a separate entity. For more information on the differences between these types of outsourcing, please refer to the glossary.

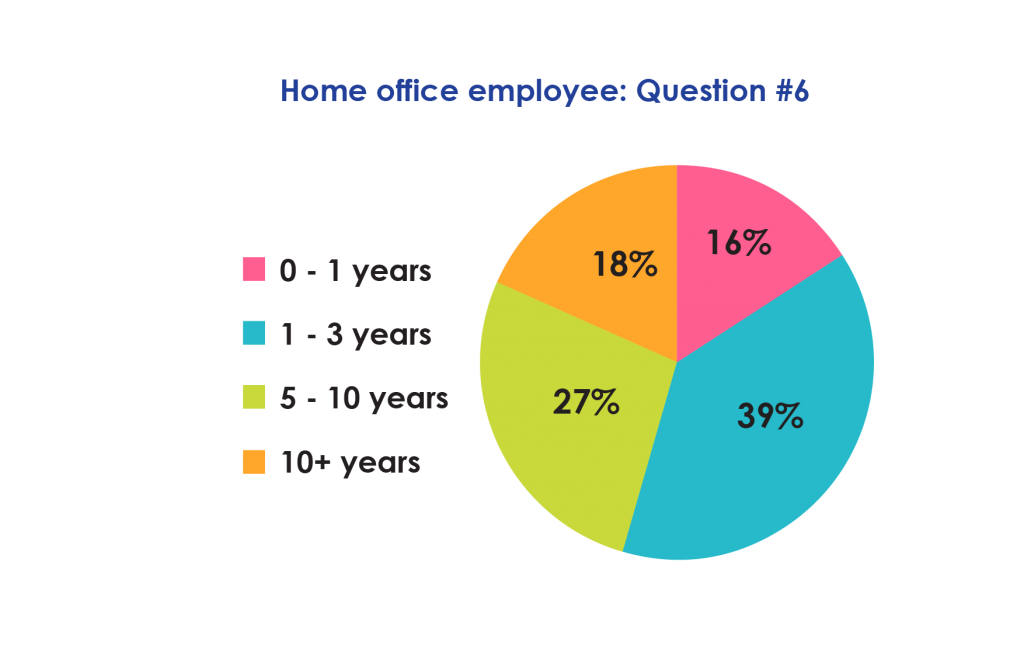

#6 How long has your organization had a distributed model?

This response surprised me. With distributing work growing in so many industries since the early 2000s, for so many teams, this seems like a normal part of software development today. Perhaps the respondents to this survey represent a new wave, or more organizations of varying sizes—not only large enterprises but also smaller organizations doing more work distribution. There are two other important factors possible here as well.

The “newness” to outsourcing may be companies recovering from the Great Recession and are attempting to outsource again. It could also be those companies that are coming out of the latest Tech Boom trying to outsource for the first time. It could also be the tightening labor market in the US. Also, many test companies reevaluated team size as they became Agile. It could be that now that they have adjusted to the new development paradigms, they are reevaluating the best uses for outsourcing.

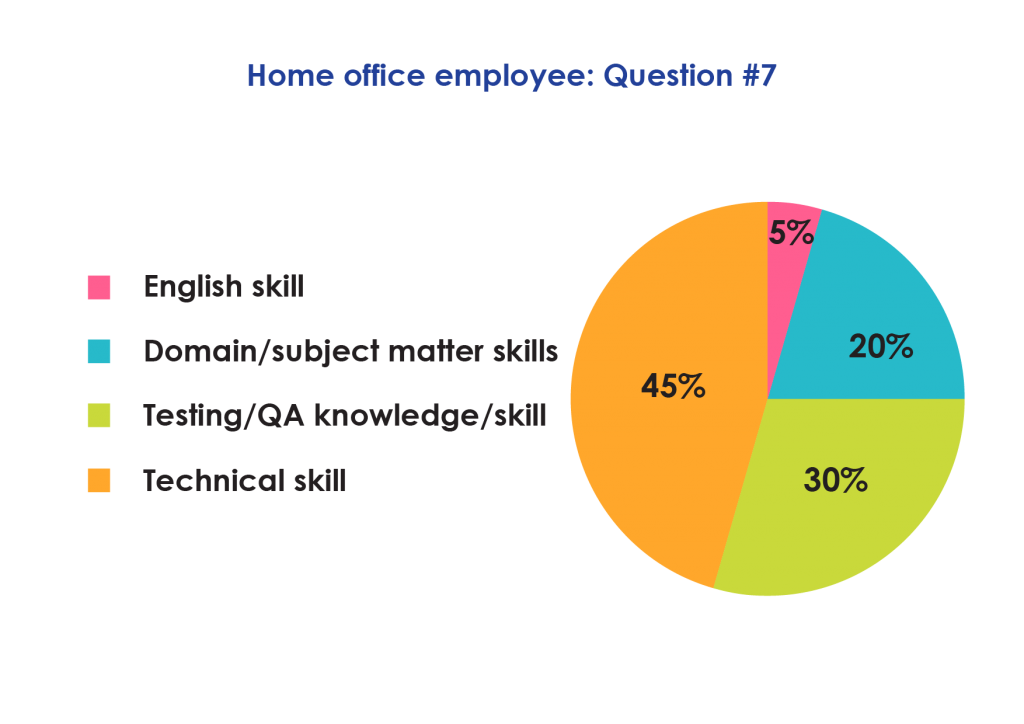

#7 What skills do you want the most when it comes to picking a distributed team?

The biggest need being for technical staff which is exactly what I expected. US test/quality teams traditionally build more for subject matter expertise, focusing on customer experience and satisfaction rather than for

technical testers. The modern movement for technical testers increases technical work in the requirement and the daily workload. This shift does not only pertain to more technical test automation but also to more technical gray box and API testing.

I am surprised that the domain/subject matter skills are the primary need for only 20% of respondents. There have been many articles written about how more corporations are looking for domain expertise in distributed staff. Take financial services, for example. Having financial services-savvy staff testing financial software is supposed to be in high demand.

Based on these results, this may have been more of a news story rather than a real requirement or a need that went unfulfilled since hiring. For example, graphic designers to test a graphics or web design product in “low cost” work distribution locations is never as easy as some might think.

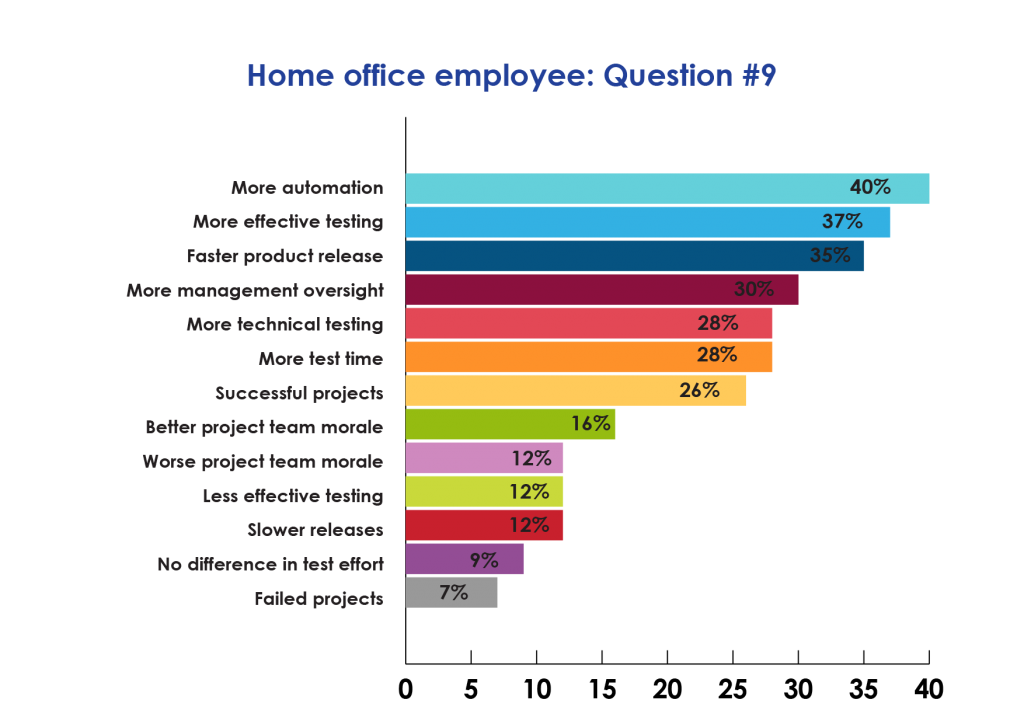

#9 What is the biggest impact of outsourcing/offshoring of testing: (Multiple answers selected)

It’s stellar that the major benefit of distributing work is automation. A large number of software development organizations need more automation. Automation coupled with a US-based focus on subject matter expertise staff as opposed to tech staff, covers the tech staffing gap for more automation.

Past this first answer, there are clusters of answers:

For about one-third of respondents, distributing work means a combination of faster product release, more test time, more automation, and more technical testing. This is all good and the goals of work distribution are met.

There is also a smaller cluster of responses regarding project problems: Worse project team morale, less effective testing, or delayed releases. For about 1 out of every 9 respondents (12%) there are project problems. Clearly, this is not a good thing. I would suggest reevaluating the needs of the work distribution and shaking things up. Even for Continuous Improvement’s sake, with some attention, things always get better.

More management oversight was chosen by approximately one-third of respondents. All leads or managers face this factor with all distributed staffing. There needs to be more management oversight. The goal is to optimize daily and weekly management time through communication infrastructure, broad training, and building trust in all locations.