Find out how you compare to others in our survey results

Test Automation is the topic of the third survey in our State of Software Testing Survey Series. This survey covers skills, tools, benefits, and problem areas of test automation.

We all know that automation is a necessity, but there is such a diversity in the required solutions that it distracts us from improvement across the board. For example, too many people immediately segregate API level automation from mobile automation. Yes, they are different, but they both need investment, design for maintainability, good reporting, integration into the tool chain, skill growth and training, etc. All these factors can be applied across the board to all test automation projects. This survey’s goal is to look at all levels of test team automation to identify issues and to take the pulse of what’s happening in the software development world today.

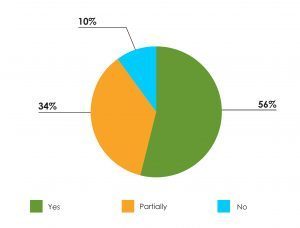

#1 Do you, or your current team, possess enough technical skills to build and maintain test automation?

Luckily the majority answered yes— but still, barely more than half say they do have the skills.

There are levels of skills needed here, as there are in any form of software development. They are defined as:

- Organizing and creating the tests

- Running and maintaining test suites

- Automating the tests

There are architects, developers, designers, etc. For Test Automation, the first level is organizing and creating good, meaningful, and effective tests. This is based on testing/QA skills. The next level is running and maintaining the created test suites. This is based on knowledge of the automation tool. The next level after that is automating the tests in the automation tool. This is based on knowledge of the tool and the language used in authoring the tests in that tool as well as an understanding of the system under test.

It’s great to see that more than half of respondents are at the right level to build and maintain tests. This is an important area of growth for all test engineers.

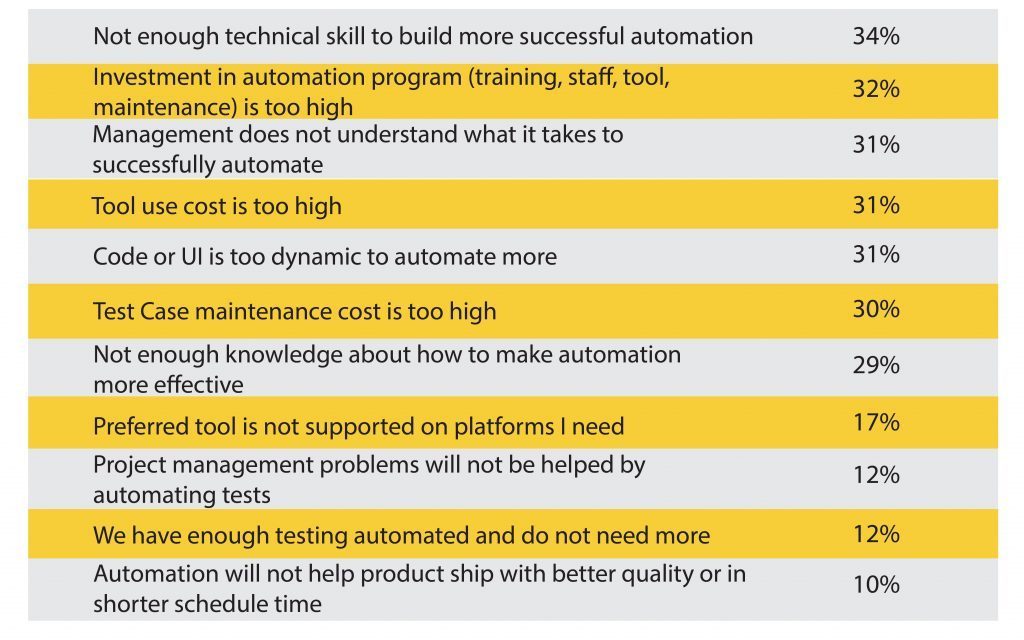

#2 What would prevent you from automating more tests?

Multiple answers selected

The range of answers, and how prevalent they are, surprised me here.

In our survey from a few years ago, the #1 answer, by far, was that management does not understand what it takes to make a successful automation program. That percentage has gone down. However, almost one-third of the respondents suffer from so many classic problems, and that is disappointing.

If we look at a few answers together— the tool cost, maintenance cost, and management not understanding what it takes — they are all factors that can make or break automation. Automation is still a part of software development, but it gets much less attention. While it’s true that automation is not at the same level of production code, we can’t ignore that it is still a part of software development, and this part of software development also needs architecture, design, and skill. Most importantly, it needs serious investment and management!

Test Automation also needs serious time and investment. Even as tools get better and better, a bad automation architecture will incur large maintenance costs. The management and investment needs to be in place from the start.

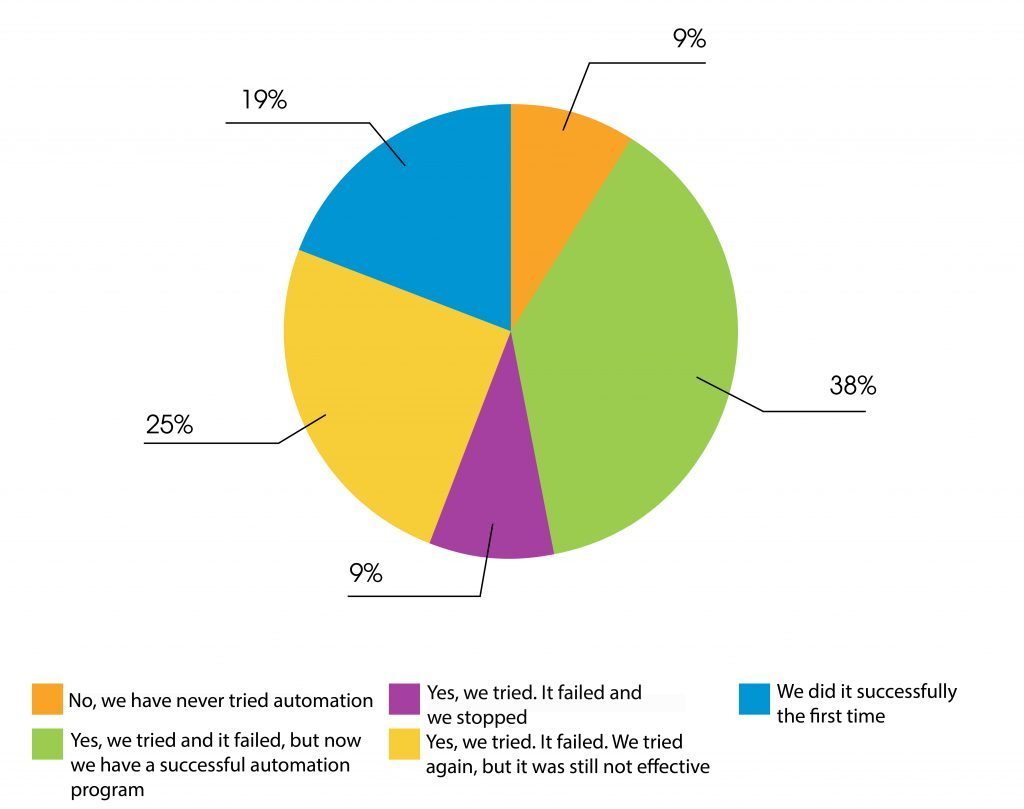

#3 Have you tried automation programs in the past and failed?

There is a lot to glean from these results. It is great that almost half of the responses (38%) at least tried automation. The program might have failed the first time but they persevered, worked through problems, and now have a successful program! For the almost one-fifth who were successful the first time— congratulations! It should be noted that 43% of respondents still do not have a successful program, as a result of different reasons. That is significant. The most notable reasons were given in #2, namely management and investment in order to treat test automation like software development. I encourage you to try, or try again, and work to get automation treated as a significant project.

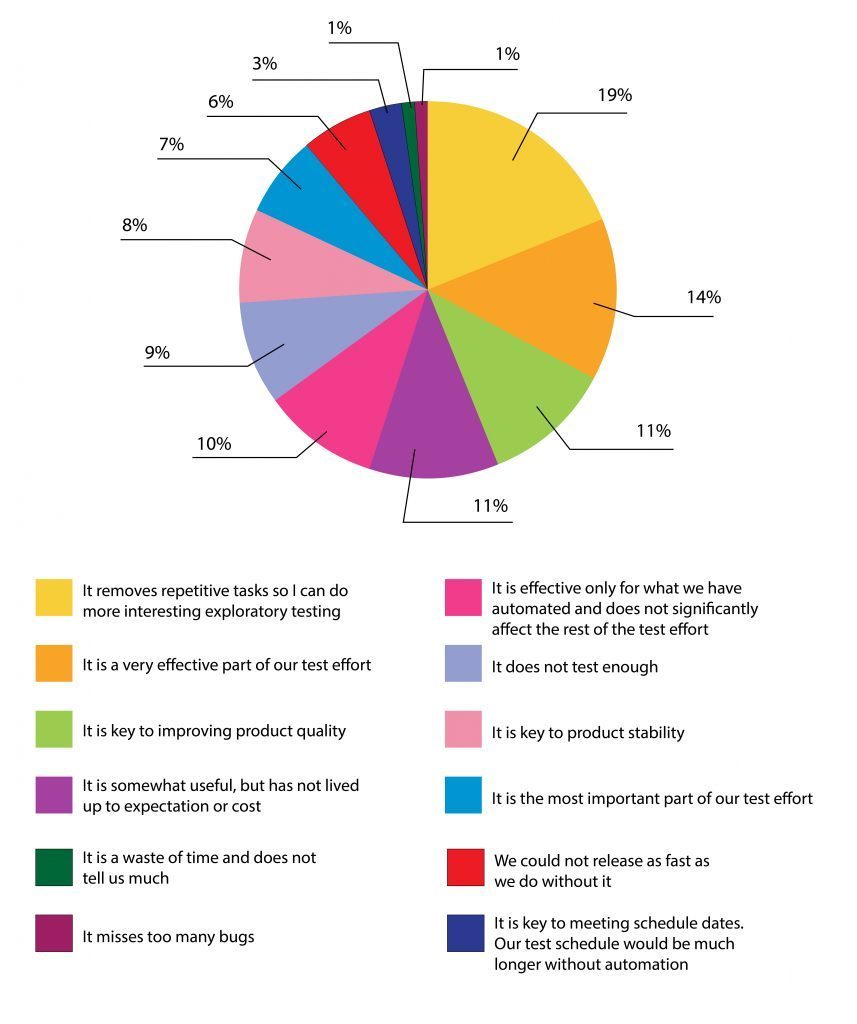

#4 How would you best describe your automated test effort?

The distribution of answers here shows the variety in the usefulness of test automation.

But the most popular answer is also the best— test automation frees up more time to do exploratory, bug-finding tests.

In contrast, the answer that the automation program has not lived up to expectation and does not test enough, is problematic. Test teams need to work hard to not let this happen. If test automation gets a bad rap internally, it can often have a long term and negative impact on overall reputation. This will affect future investment, maintenance, and training by the organization.

Most importantly, the lowest answered choices are the most serious— that it is a waste of time, and that it misses bugs. I am glad these choices are in the minority when considering the volume of responses.

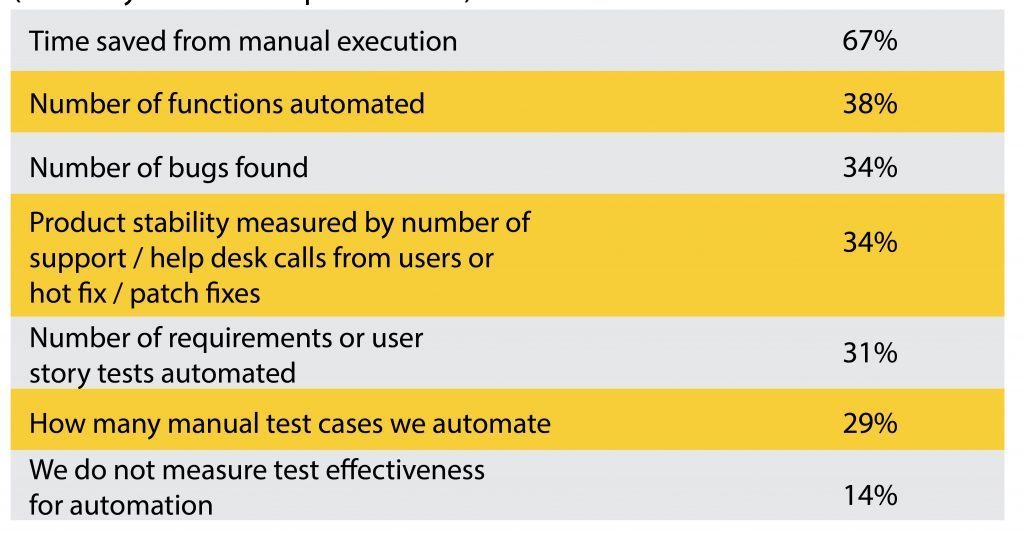

#5 How do you measure test automation effectiveness?

Multiple answers selected

Measuring the benefit of test automation can be complicated. Time saved is the most obvious measure— a measure most development teams appreciate and rely on. Number of bugs found is interesting. If these bugs would not be found by manual testing and the automation keeps customers from having these bugs— this is a very important metric. Remember, test automation is great for finding breaks, but will not usually allow finding bugs in new functionality, since there is often relatively little automation around new functions.

Measuring support calls is a great and direct view into customer satisfaction and quality. However you measure the success of your automation program, it is important to show the whole development team and management the value of test automation to ensure future investment, maintenance, and growth.

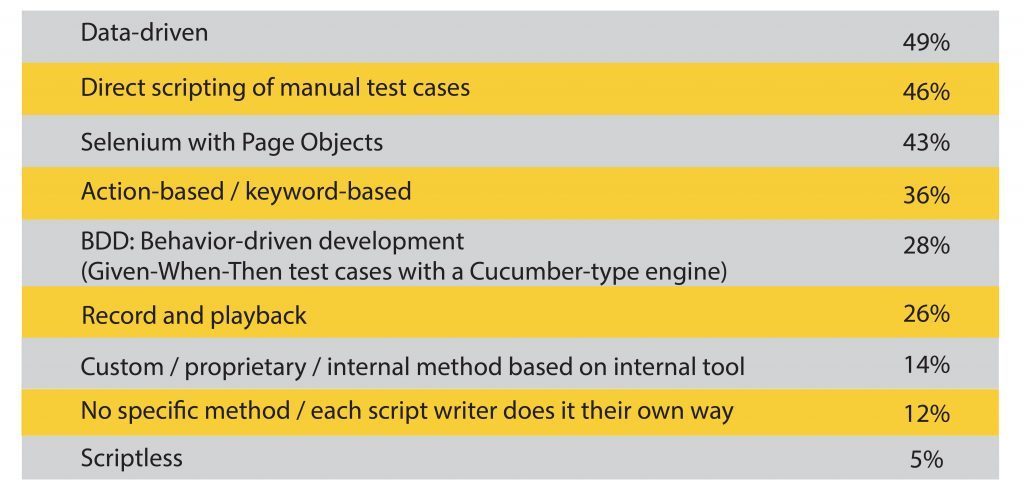

#6 What strategy or framework do you use to improve the productivity of designing and implementing your automated tests?

Multiple answers selected

Having a method for your automation is most important. Most tools these days use some sort of framework to greatly facilitate automation and reduce maintenance. The fact that data-driven is the #1 answer is expected; it is the easiest to implement and maintain. Its use in scenarios and more complicated tests is limited and difficult to implement, but it is a great starting place.

Direct scripting and record & playback are often more troublesome. It’s worth bringing attention to the fact that almost half (46%) of respondents use direct scripting and 26% use record and playback. Combined, this accounts for almost a quarter of the answers, and what’s troublesome is that these are traditionally the tests with the highest maintenance cost!

The maintenance cost of direct scripted and record and playback can ruin an automation program and bury the team in maintenance if you have no stored function libraries, that are built with an ABT type organization or other solutions.

I suggest that you before you start your implementation, you do your research. Look into a few test automation methods, choose one that is suitable, and then begin implementing more structured and reusable tests in order to reduce maintenance costs.

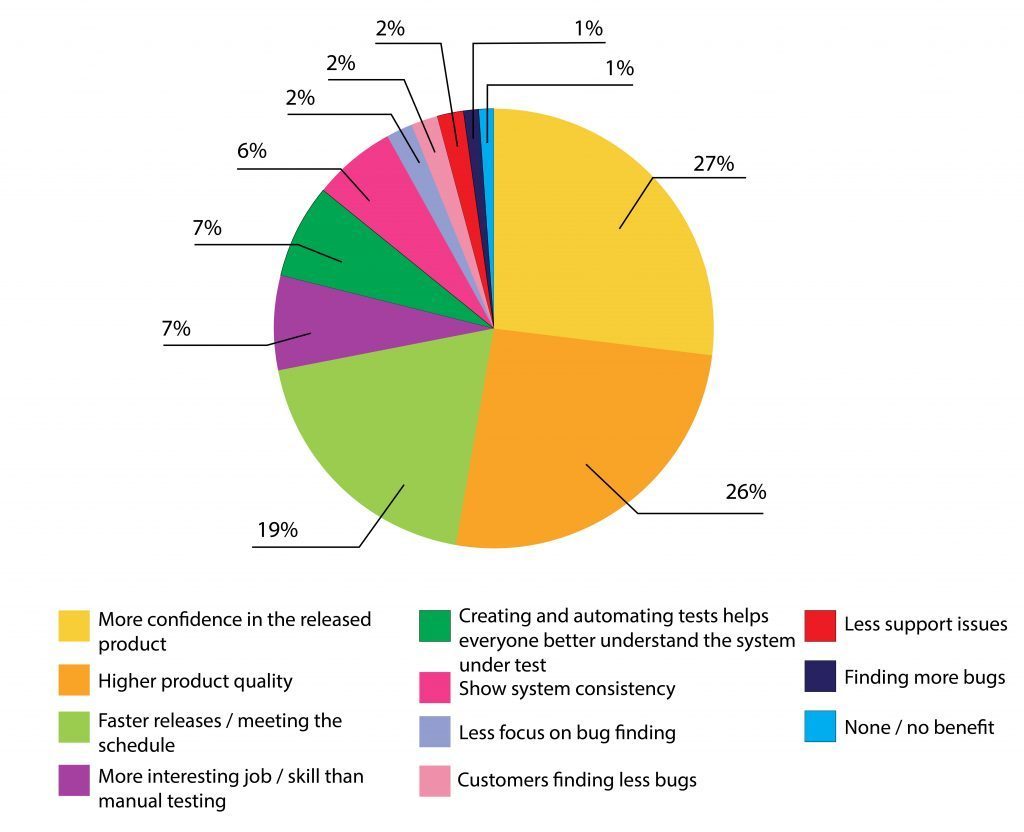

#7 What is the one main benefit of test automation you expect to gain?

This survey question does not have as much of a response spread as the others.

The main benefits of automation listed by our survey takers are higher confidence, increased quality, and faster releases.

It is disappointing to see that reduced support calls is not ranked higher — hopefully it is an important secondary benefit. It’s also good to see that the primary benefit is not finding bugs, since most teams use other methods for bug finding than automated regression testing.

I’m especially glad to see no benefit was only recorded from one response. To me that means that this survey taker is both unlucky and the outlier— and for the majority of our respondents, they have gained some benefit out of automation.

Shows system consistency is an answer you should keep and eye on. A few years ago, this may not have received any selections. I bet in the next few years this choice will grow in importance as teams adopt DevOps. DevOps/Continuous Delivery is focused on small releases with production line delivery. A stated goal of test automation is to assess the system consistency from release to release. This is because running full regression suites often takes too long to be effective as immediate feedback — something that’s crucial in DevOps. Also, it’s usually the case that a smaller suite of tests is selected for the purpose of showing system consistency, and not a full automation suite.

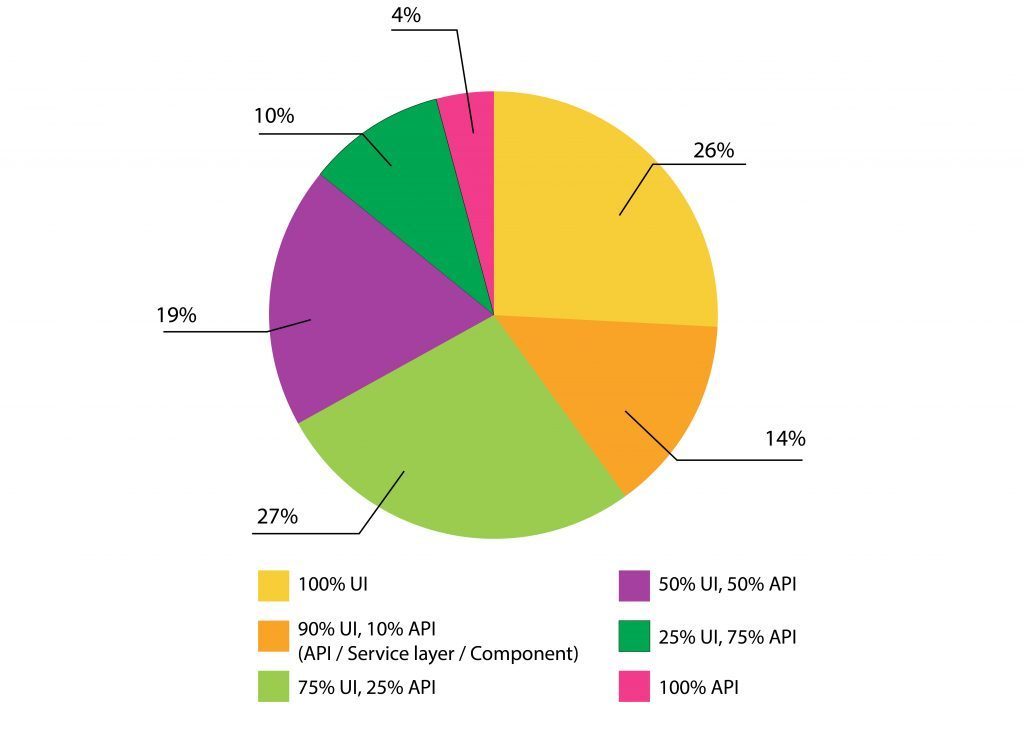

#8 At what level do you automate UI or API?

This spread of answers is a great sign that lower level test automation is happening more often.

Just over one-quarter of the responses say that they automate only at the UI level, while 74% have some automation at the API layer. This number is increasing from our last survey. Service-oriented-architecture (SOA) is raging full steam ahead and will only continue to grow as the use/influx of container tools grows for development. The use of containers necessitates automated tests at the API/service layer.

One thing worth noting is that this growth will have a long term impact on the tools and skills needed to automate tests.

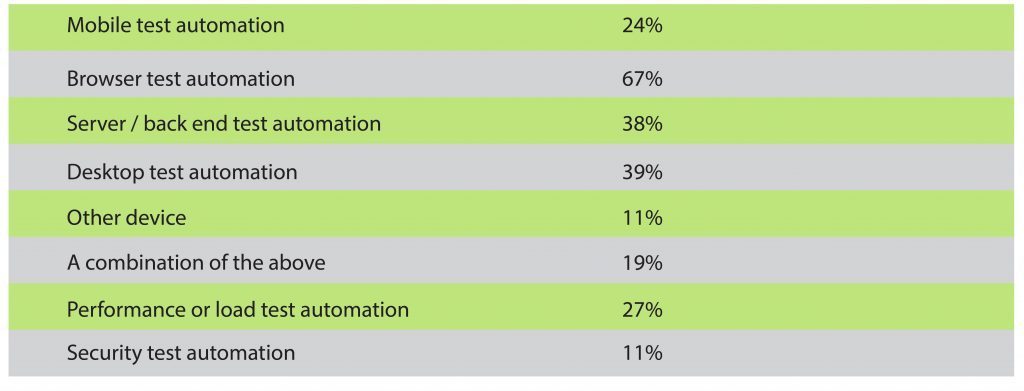

#9 What areas of test automation are you responsible for?

Multiple answers selected

The results of this question give us a snapshot of the type of automation happening today. About one in five respondents must run automation tests across mobile, browser, desktop, and server software. It’s no surprise so many people do automated browser testing, but I am surprised only one quarter (24%) do mobile testing. With so much focus on mobile growth, SMAC platforms, and mobile migration of enterprise apps, I would have thought that this answer would be more popular. Perhaps it is still new to enough teams that testing is not yet automated for them, or the tools to test it still need to mature. It could also be the case that there aren’t enough skills on the respondent’s teams to satisfy this need.

It is important to recognize that almost 40% (38% and 39%, respectively) automate server and desktop testing. These are decidedly less glamorous test automation areas today, but are clearly still in high demand.

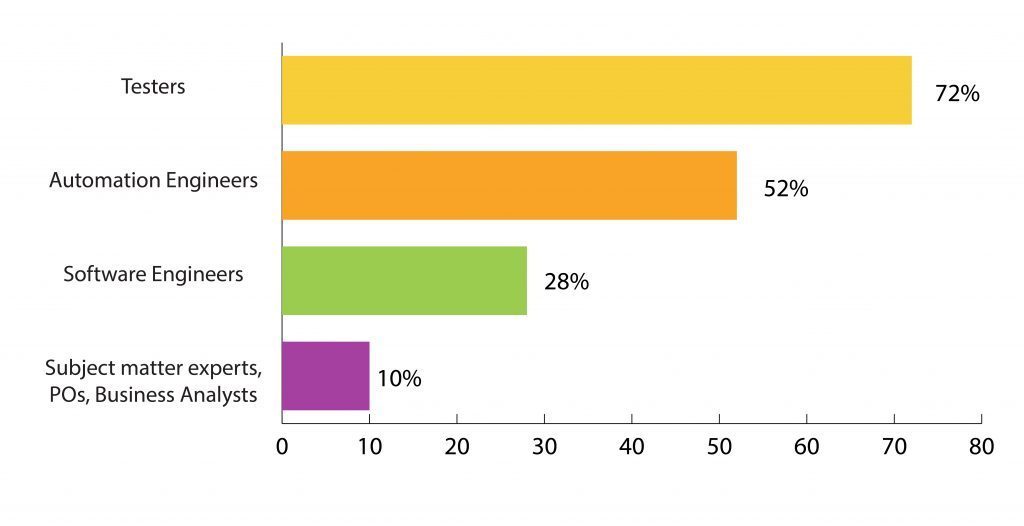

#10 Who designs and writes tests for automation?

Multiple answers selected

The fact that Testers and Automation Engineers are the primary test automators, is expected. What is surprising to me is that the percentage of Subject Matter Experts/BAs/POs who automate remains quite low. There has been a lot of growth in tools and quite a lot written about business-readable languages as well as domain specific languages (DSL). Therefore, I thought this answer would have been higher.

28% of respondents say Software Engineers also automate tests. This is a newer development, and mostly due to Scrum and modern development requiring cross-functional teams, with hybrid engineers tasked with more testing.

This question also shows the progression of testing from being primarily the responsibility of domain/subject matter skilled staff to mostly technically skilled technical staff, with 72% of Testers automating tests. This is a move in the right direction.

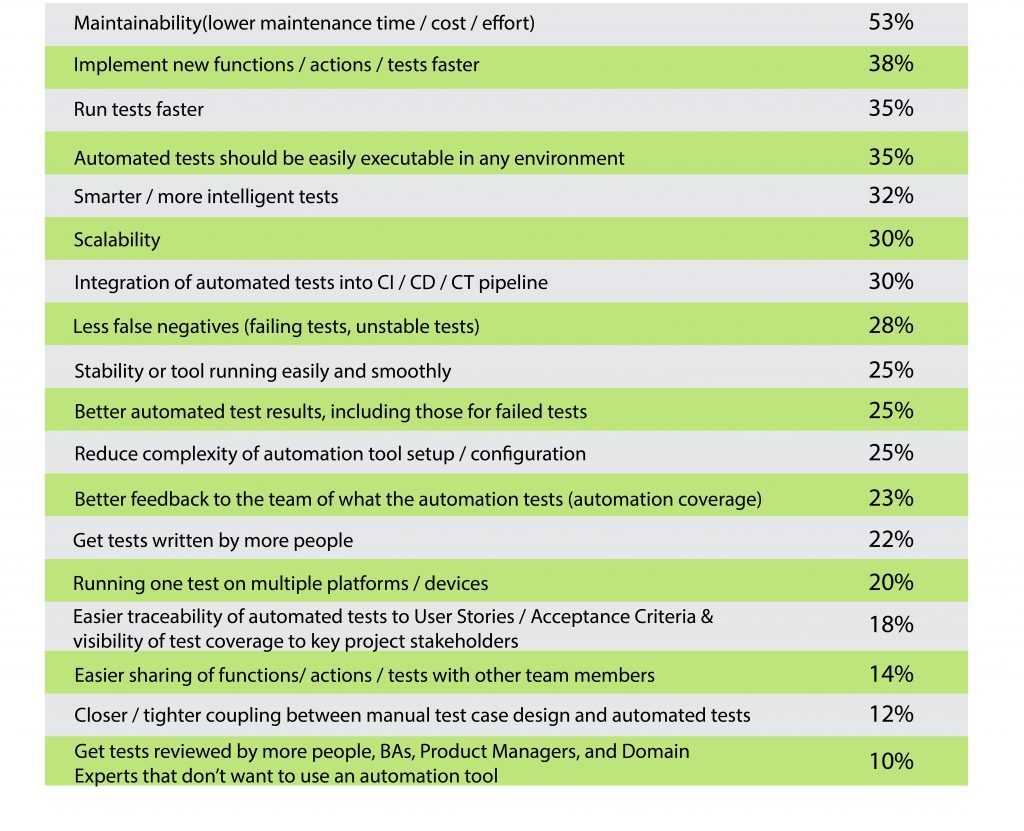

#11 What areas of your test automation do you wish to improve?

Multiple answers selected

It is no surprise that lower maintenance costs are the #1 area to improve upon. In my work, I have largely been focusing on teams beginning automation. I haven’t been focusing on building fast or big numbers of automated tests. Instead, I have been concentrating solely on design for low maintenance and reusability with a robust framework. At first, everyone focuses on tool cost when it comes to automation, and then it’s time to ramp up. Teams need to remember this way of thinking because focusing on tool cost can incur high maintenance costs and can kill an automation program.

To prevent this, focus on lower cost maintenance from the start! It will pay off tremendously. An area for tool improvement that everyone agrees on is supporting more environments, platforms, and devices.

One more notable result is that 30% of respondents say integrating with the pipeline/tool set needs to be improved in automation tools. This demand will only grow as CI and CD processes grow in usage.