I’ve been teaching a lot lately, was in India for one week, and I’m off to Seattle in two weeks to teach on performance topics. I thoroughly enjoy teaching, it allows me to stay sharp with current trends, and provides a nice break from the “implementation focus” that I generally have day to day.

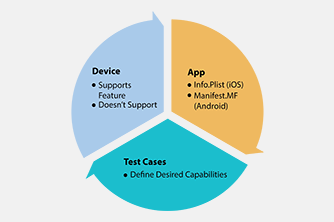

One topic however that has been gnawing at me lately is the notion of “continuous iteration” in automation. With virtualization, my feeling is that you should be running the automation you create all the time. I’ve coined a new term lately, “Test Everything all the Time”.

I find that many automation teams shelve the automation until runs are needed. I’m finding this to be a real bone of contention when it comes to automation credibility. The automation should always be in a state of readiness, in that I can run all my tests all the time if I want to. We used to be plagued by machine constraints and “unstable” platforms, but I’ve found that these issues are becoming increasingly less, but we seem to be still holding on to old automation ideas.

I’ve setup our labs here with machines that are constantly running our clients’ automation, while the testers are always adding more tests to that bank of tests. Automation should free us up to do this, but I still see too many people attending automation tests, which to me is like watching paint dry.

We have a “readiness” dashboard on all of tests all the time, so if I choose to run a set of tests immediately, we can dispatch those tests to a bank of machines and run them. It makes my job as a quality director easy, in that I never ever ask if the “automation” is ready, I have that information at my fingertips, for all types of tests. I’ve attached a simple dashboard report that I’ve used since I started working on automation projects in the early 90’s.

Remember, your automation needs to be credible and usable, not one or the other.

| 7-Sep-09 | |

| Test Cases Created and in Development | 25 |

| Test Case Create Goal for Week | 40 |

| Goal Delta | -15 |

| Test Cases to be Certified | 150 |

| Test Cases Modified* (timing only) | 5 |

| TOTAL TEST CASES IN PRODUCTION | 1253 |

| Passive (Functional) Test Cases | 631 |

| Negative Test Cases | 320 |

| Boundary Test Cases | 40 |

| Work Flow Test Cases | 78 |

| User Story Based Test Cases | 24 |

| Miscellaneous Test Cases | 160 |

| Test Cases Ready for Automation | 1065 |

| Test Cases Ready for Automation % | 85% |

| Test Cases NOT Ready for Automation % | 15% |

| Test Cases NOT Ready for Automation | 188 |

| Test Case Error/Wrong | 5 |

| Application Error | 23 |

| Missing Application Functionality | 59 |

| Interface Changes (GUI) | 2 |

| Timing Problems | 70 |

| Techincal Limitations | 6 |

| Requests to Change Test(change control) | 23 |

| Sum Check | 188 |

| TOTAL MACHINE TIME REQUIRED TO RUN | 23:45:00 |

| TOTAL MACHINES AVAILABLE TO RUN TESTS | 7 |

| TOTAL SINGLE MACHINE RUN TIME | 3:23:34 |