I recently came back from the Software Testing & Evaluation Summit in Washington, DC hosted by the National Defense Industrial Association. The objective of the workshop is to help recommend policy and guidance changes to the Defense enterprise, focusing on improving practice and productivity of software testing and evaluation (T&E) approaches in Defense acquisition.

So, what do I have to do with the Defense enterprise? One of the features of the workshop is to invite software testing experts from both commercial industry and academia to share state-of-the-practice strategies, processes and software testing methods. I was honored when the Director of Defense Research and Engineering asked me to do a keynote as the commercial industry-recognized subject matter expert on software testing, test automation and evaluation.

What I am about to share however, is not what I said at the conference. It’s about my takeaways from the summit. Although not surprised, I was intrigued by the fact that while we have made progress, there is still much work to do to overcome the four software testing challenges we have been fighting over the last three decades:

- The software testing profession is a choice, not a chance

- Testing is not free! Proper testing requires proper financing.

- Test automation is not about the technology or tool; it is about the methodology or the effective application of technology.

- Testing transparency is essential to educate and get executive support, hence funding.

As basic as these challenges are, to overcome them, sizable effort is required beyond what software testing folks like ourselves have been doing and advocating. It will require understanding and support from the business side… particularly from executives who have funding and budgeting authority. Let me address each of these challenges individually.

1. The software testing profession is a choice, not a chance

We have made a lot of progress in bringing the importance of software testing to the forefront over the years. I hope that it is common knowledge today that a good test engineer must possess three distinctive types of expertise: software testing and automation, IT (including programming), and domain expertise related to the application under test (AUT). Along with the expertise, great communication, critical thinking and creativity, and leadership skills are equally important. So, picking software testing as a career should be a conscious choice, not taken by chance. I am seeing a choice-making pattern in the Defense industry. Coincidentally, I have also experienced this in Asia. With the opening of our testing center in Vietnam five years ago, I’ve had an opportunity to reapply some of the lessons learned in the states. Here are some of the things that we do in Vietnam to encourage software testing as a career choice.

- Comparable pay scale: We compensate our test engineers comparably competitive to our software engineers. In some cases, we also aggressively reward good communication and leadership skills in our test engineers.

- Higher job requirements: Our criteria for entering the software testing profession are high, and based on the expertise and skills required for being a good test engineer. We certainly will not hire folks who actually want to do programming and consider software testing as a transitioning job.

- Higher demand for test efficiency: We pay great attention to folks who not only think about good testing, but also think about getting better testing results — qualitatively and quantitatively.

- Better funding, and incentives for and higher expectations from, academia to produce better quality programs for undergraduates and graduates in software testing: We work closely with universities to help them deliver high-standard software testing curricula and teaching materials, and provide funding and scholarship programs to incent them to produce better undergrads and grads to supply the software testing industry.

2. Testing is not free! Proper testing requires proper financing

Currently in the Defense industry, software testing is not a “called-out” requirement in the RFP. In fact, the assumption is that testing will be about 1-2% of development cost, so it would be essentially free. Of course, the industry is trying to change all that. In fact, although the advancement of software testing in the Defense industry is lagging compared to the commercial industry, I applaud them for recognizing the importance of software testing and evaluation, and creating a forum to bring business and technical folks from both sides of the aisle — the buyers (the government) and the sellers (the industry or service providers) — together to engage in productive dialogue for change. I have not seen the commercial folks (I am also guilty) organizing any forum to engage the business executives in dialogue for change. We should learn from the Defense industry.

Furthermore, I have done some research on this topic (and published the result in my book, Global Software Test Automation, HappyAbout, 2006), and the estimated range of expenditures for software testing is 20-40% of the product development budget! Therefore, software testing needs proper financing that is separate line item from development. There are several reasons for this:

- Software testing performs different services than development.

- As indicated earlier, relatively large amounts of financial resources are needed to devote to it.

- The separation ensures a balance of power, enabling the testing team to effectively interface with the programming team as equals.

- It prevents testing from getting shortchanged when the product development effort goes over budget or is running behind schedule. Because when testing gets shortchanged, product quality is shortchanged. The cycle is bound to repeat increasingly, forcing a release of low-to-marginal quality product that will ultimately end up hurting sales and reputation.

- Releasing a software product with bugs due to lack of software testing funding has hidden costs. Rushing to fix bugs after the product is in the customers’ hands means resources are allocated to trying to keep customers happy instead of developing new features for the next release.

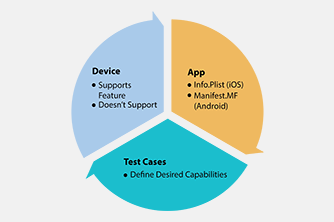

3. Test automation is not about the technology or tool; it is about the methodology or the effective application of technology

More often than not, when there is a test automation discussion, the first question being asked is “Which tool do you use?” I humbly submit that the tool is not the first thing that you should worry about.

I strongly believe that to be effective and rewarding, your automation must be high volume. In fact, I believe that if your automation coverage is less than 50% of should-be-automated test cases, you should not do it. (I will keynote on this subject at QA Test ’09 in Spain in October, and discuss more on this topic upon my return). High-volume automation is method-dependent (of course, you still need the technology to support the methodology, but it is not the first choice), so the methodology you choose should also focus on optimizing the following four drivers:

- Maintainability—handling high rate-of-change

- Reusability—ability to reuse common test components without having to program

- Scalability—ability to automate high volume of tests through reusability (and team-based cost efficiency)

- Visibility or transparency (see #4 for more on transparency) meaning tests auditable by management and non-programmer staff, and productivity, are structurally measurable

All of these drivers lead to cost efficiency, productivity and manageability (I’ve discussed this in my post “Four Drivers for Delivering Software Test Automation Success“).

4. Testing transparency is essential to educate and get executive support, hence funding

Why must we have better transparency of quality and testing effectiveness? Because when proper funding is allocated, business people need to see ROI (Return on Investment) or ROE (Return on Expense), and technical management people want to see results and efficiency improvement. Which is what we testing people want in the first place.

I believe that testing performs several functions for the benefit of the company or stakeholder, the product development effort, and the executive. Testing is a service to the company to help it produce and release higher quality software. Testing is also a service to the development team to help it produce higher quality code. Therefore, for the management team, testing should be an information service that provides transparency into the software product quality to enable more effective management. Transparency also enables us to assess some of the key internal values achieved through effective testing which include:

- Confidence in consistency and dependability

- Ability to spend more time on new development, less time on maintenance

- Effective utilization of resources and budget

- There’s time and money left over that can either flow to the bottom line or be used to increase productivity

- Elimination of surprises

Last but not least, to improve transparency, we should also pay attention to the consistency of commonly-used terminology. As of today state-of-the-practice, we still have many definitions for the same term; and to make the matter worse, the definitions are not quantifiable. For example, what is a test case? How do you size it?

In summary, what I’ve learned from this summit is that we need to continue sharpening our testing expertise and skills, and to challenge ourselves; not only to become better test engineers, but to contribute to the advancement of software testing as a whole. Advancing software testing is best done through educating, advocating and evangelizing to business executives and policy makers — by software testing folks in the industry as well as academia folks alike. It will get better!

I can see that you are ptiutng a lots of efforts into your blog. Keep posting the good work.Some really helpful information in there. Bookmarked. Nice to see your site. Thanks!