How lagging automotive design principles adversely affect final products.

Cars are integrating more and more software with every model year. The ginormous screen introduced by Tesla in their flagship Model S a few years ago was seemingly unrivaled at the time. Nowadays, screens of this size are not only commonplace in vehicles such as the Toyota Prius or Volvo XC40, they’re almost expected.

This is because cars are becoming somewhat of a second home to U.S. consumers. CNBC reports that on average, the United States citizen commutes over 200 hours annually—equivalent to 8.33 days. As people spend more time in their vehicles each year, the list of conveniences and features they want grow. For instance, the backup camera that was once viewed as a luxury item and installed on only the highest-end cars is now a requirement as of May 2018. Other conveniences such as navigation and safety features like lane keep assist are being installed as aids to the driver—but these actually are now becoming hindrances.

For the purpose of this article, the term UI testing will be used to reference the functionality of a system and usability testing will be meant to describe the ease of use of a system.

The Study: Increased Distraction

One study by the University of Utah in partnership with insurance provider AAA found that the complexity of touch screen interfaces in vehicles significantly increased distraction; they found that on 30 vehicle infotainment systems, users needed more than 40 seconds to properly program the navigation system. More importantly, these systems required the driver to divert their attention away from the road and towards these systems. With 9 Americans dying every day due to accidents caused by distracted driving, it poses the question: what sorts of usability testing are automakers conducting on their vehicles’ software before sending it to market?

The aforementioned study analyzed 257 drivers between ages 21 and 70 across nine infotainment systems. Hooked up to eye-tracking cameras and other relevant sensors, subjects were delegated 6 tasks to perform using the infotainment system while driving. The results were not promising. Even the least-distracting systems tested left drivers distracted for more than 15 seconds even after completing the task. Even when using their phone’s voice command system (Apple’s Siri or Microsoft’s Cortana), the distraction was considered high. A driver’s hands may still be on the wheel, but their brain is not focused on the road. Voice command systems differ greatly from manufacturer to manufacturer. Yet, across the board, they just do not compare to the speed and accuracy of a real human. Furthermore, the worst reported distraction levels from the study were in regards to hands-free systems.

The Problem: UI/UX Lags Behind

Why are these systems performing so poorly? The problem seems to be the whole process behind UI/UX design in the automotive industry. One anonymous Reddit user who worked in automotive UI/UX design claimed that they were designing for vehicles 3 years out-meaning in 2015, they were designing for 2018 model year vehicles. Further, these designers were additionally not allowed to ride in, or even view these vehicles prior to designing these systems for fear of “stealing ideas.” Thus, automotive UI/UX designers are essentially designing blind and hoping for the best! It is also important to note that the automotive industry’s production process is long and wieldy, with model refreshes taking around 3 years and full redesigns or new models taking between 5 and 7 years. What this means is that the testing done on these software systems is done primarily on simulators as opposed to the actual system itself. While simulators can be beneficial, the issue with simulating vehicle tests is that using simulators does not allow for testers to see how the specific test subject (i.e. the infotainment system) will interact with other aspects of the actual car. Yes, the software may work perfectly with no bugs nor errors, but how well does it function when the driver cannot divert full attention towards it? The main question here is: How usable is this system in the real-life setting?

The Impact: Reevaluate Test Strategy

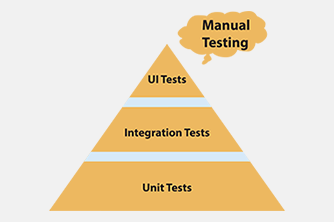

Some of the worst ranked infotainment systems were in cars such as the Tesla Model S and the Audi Q7—both of which have a starting price of over $53,000 USD. This means that consumers are paying higher price tags to get less intuitive systems that can increase the risk of getting into a distracted driving accident. Again, we’re referencing usability and ease of use here; what good is an 11 inch screen with every bell and whistle if it’s going to result in an accident? It calls for automakers to reevaluate their test strategy as well as overall test design in terms of UI design and testing. UI testing is important because it analyzes an application from a user’s point of view; however, the issue is that this sort of testing happens later in the testing life cycle—remember this as this fact will be important later. Our anonymous automotive UI/UX designer also noted that in terms of developing the software, if a deadline cannot be met for the 2017 model year, they would just push it back to the 2018 model year. This was prevalent in the 2016 Honda Civic, where Honda removed the volume knob from the infotainment system and replaced it with a less intuitive slider. After consumer outrage, the volume knob reappeared on later model years. Now, back to the fact about UI testing happening late in the game: if UI testing happens and discovers an issue, shouldn’t that issue be addressed then rather than pushing it off for an entire year?

It begins to affect quality. When it comes to software development, quality is defined as delivering an application which has the functionality and ease-of-use to meet a customer’s need. The new idea of shift-left testing attempts to install quality in the product earlier as a means of saving time and cost when finding defects. It also begins to insinuate that UI testing is not important… but it is! Unit and interface testing can only evaluate the existence of code. It cannot evaluate missing functionality or ease-of-use. This is important because it is how the user interfaces the system.

The Solution: Universality

These days, automakers are beginning to integrate universal software solutions into their own-namely Apple CarPlay and Android Auto. What these programs do is integrate the mobile device into the infotainment system in displays recognizable to the user—basically, the apps look the same as they would on their mobile phone. The term here?

Universality. No matter which vehicle a driver gets into, Apple CarPlay or Android Auto will appear the same. This streamlines mobile phone integration, music, and navigation. Rather than forcing users to learn an entirely new system, it allows for users to use prior knowledge. An informed driver is a less distracted driver, and a less distracted driver is a safer driver. Rather than attempt to differentiate themselves in every way, shape, and form possible, perhaps automakers should work together to make an easier and safer product.

The Future?

We covered a few things in this article, from deadly user interfaces to the slow, problematic production process for cars. While we can’t expect any drastic changes in the industry immediately, with Moore’s Law (stating that computing power doubles every 2 years), the future may be different. As the possibilities of computers and software increase, developers are going to need to start paying closer attention to the design of their system and how humans will interact with it. More importantly, testers are going to need to focus not only on the functionality, but also test for distraction, safety, decision speed, and haptics/multiple input methods.