What you need to know in order to have effective and reliable Test Automation for your mobile apps

What you need to know in order to have effective and reliable Test Automation for your mobile apps

I realized that Test Automation interfaces are pivotal to effective and efficient Test Automation, yet very few people trying to test their mobile apps seemed to know how their automated tests connected with the apps they wanted to test. Even if you use commercial products that claim to remove the need to write Test Automation code you may find the information enlightening, at least to understand the mechanics and some of the limitations of how these products ‘work’.

This article provides an overview of the topic of Test Automation interfaces, starting from stuff we need to know, then things we need to learn & understand, then the concluding section covers factors worth considering in order to have effective and reliable Test Automation for your mobile apps. I have included various additional topics that don’t fit into the know/understand/consider sections.

Like much of my material this is a work-in-progress. Comments, suggestions and feedback are all welcome to help me improve the utility and accuracy of this material.

The Many Stages of Test Automation

There are at least 3 key stages of Test Automation: Discovery, Design, and Execution. Each is important albeit execution may be the only one that many people outside testing recognize.

Discovery Stage

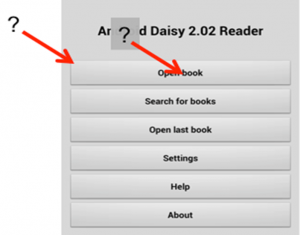

We need to discover ways our automated tests can interface with the target app.

If we can read and understand the source code perhaps there’s little to discover about the app. Conversely, if we’re less technically minded or when the source code isn’t available, discovery can be more challenging and time-consuming. Furthermore, we need to identify trustworthy identifiers that will remain useable for the Test Automation to survive new releases of the app. Dynamically assigned IDs that vary from release to release, sometimes unpredictably, can be the start of nightmares where the Test Automation needs patching and repairing virtually constantly.

Some Test Automation software tries to automatically detect these interfaces; for example, WebDriver has a ‘PageFactory’ http://code.google.com/p/selenium/wiki/PageFactory which matches a parameter with the name or ID of a web element. There’s a good introduction to how GorillaLogic’s MonkeyTalk identifies elements in the August 2012 issue of Test Magazine.

Alternatively we can use various tools to help discover how to interface with elements in the mobile app. Tools include FireBug for web apps, XCode’s ‘Instruments’ for iOS, Calabash’s Interactive Ruby Shell for iOS, etc.

Some Test Automation tools include a feature known as ‘record & playback.’ The recorder needs to capture a de-facto Test Automation interface and record the elements the user interacts with. They typically also record the values entered by the user into a single test script which can then be replayed many times to repeat the actions of the user – the ‘tester’. For the purposes of this article I’ll leave aside the discussion of the validity of ‘record & playback’ Test Automation as a Test Automation strategy; however, if we can read and understand the recorded script we can use it to help identify and discover ways to interface with the app we want to test.

Design and Execution Stages

During the design stage, we create the tests and write the Test Automation (interface) code, which is a precursor to running, or executing, the tests. Design includes picking the most robust and appropriate Test Automation interface e.g. whether to use coordinates, ordinals, or labels to identify specific elements; and whether to add descriptive identifiers to elements that don’t have anything suitable; etc.

Design also includes deciding what information we want to capture when the tests are executed (run), and making sure that information will be captured so it can be analysed later. Sometimes we may want to analyse the data much later on e.g. many months or even several years later, so good archiving techniques may also form part of the grand plan.

Finally, we need to execute, or run, the automated tests. This will involve some preparation for instance to configure the test environment, to synchronize the clocks across machines, etc. Remember to have more than enough storage for the logs and other outputs from the testing to be recorded safely.

Know the Interfaces Used by a Mobile App

A mobile phone may include lots of different interfaces, which users might choose to use as part of using a mobile app. They can be grouped into two broad groups: HCI and Sensor interfaces depending on whether humans interact with the interface, or not. HCI includes:

- Touch: where some devices support multi-touch, pressure, contact areas, and edge detection e.g. is the person also touching the edge of the screen? For multi-touch, some phones detect more simultaneous touches than others e.g. some may detect 5 and others 10 concurrent contacts. Imagine a piano app on a tablet device – would the app receive inputs for all 10 fingers and thumbs, or only one hand’s worth?

- Proximity: proximity can be used to change the sensitivity of the screen, for instance a phone app may disable the on-screen keypad when the phone is close to a person’s cheek.

- Movement: especially when playing games, motion can even be the primary interface.

- Sound: including voice input e.g. for Voice Search; or apps can ‘listen’ to music to name the artist and the track, etc.

- Light: for instance for face detection to unlock the phone, or to help a visually impaired user to find the exit in a dark room into a lighted room.

- Controls: these include D-pads, buttons, etc.

Sensor Interfaces include: accelerometers, magnetism, GPS, Orientation, Camera, NFC, etc.

You may have already noticed some overlap between these sensors, that’s OK, the goal is to identify the range of sensors and what they’re being used for in terms of the app you want to test with automated tests.

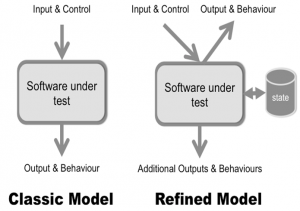

Block Diagrams

A classic model of a system under test show inputs and control which are processed by the software under test that then provides output(s) and may exhibit additional ‘behaviours.’ In practice, the software is likely to include additional outputs and behaviours beyond those we initially realise.

Some of these additional outputs and behaviours may be useful from a Test Automation perspective. For instance, one Test Automation team wrote code to process and parse the Android system log in their automated tests. They used the Robotium API method waitForLogMessage (String logMessage). The logs include a record of each network request and response for debugging, which are written by the application code when it is run in a particular configuration. The log messages are a proxy for actually capturing the network requests, which can be done programmatically.

Know Your Test Automation Interface

What information does the Test Automation interface provide? E.g. can it return the types of information we’re interested in? Can it return compound information? What about error reporting, and error recovery?

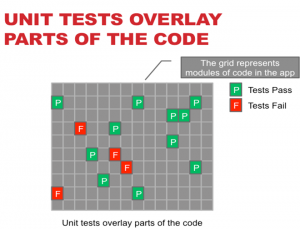

Unit tests

Unit tests

We can classify our Test Automation in various ways. Unit tests live alongside the code they test. They effectively overlay the code they will test, and they are compiled together with the code into a module that is then processed by the unit-testing framework. They do not test the application.

APIs

The app may include APIs. These APIs may be an intrinsic part of the application, for instance to enable other apps to interact with it. Or the API may be added explicitly to facilitate Test Automation. Sometimes the API may be presented as a embedded web server. When APIs are used as a Test Automation interface, they should be easy to write automated tests for as they’re inherently intended to be called by other software.

Structural

Structural interfaces represent and reflect structures in the software being tested, for instance the DOM of HTML5 apps. They support filtering and selection based on the structure or hierarchy of the presentation of the app.

Tellurium has an interesting object location algorithm called Santa and separates the object mapping from the test scripts.

GUI

GUI test interfaces match images, possibly within a larger image, the visual representation of the app. GUI Test Automation is excellent for pixel-perfect matching, however there are many legitimate reasons why images may differ during tests, for instance if they include the system clock on screen. GUI test interfaces are sometimes the Test Automation interface of last resort, when all other mechanisms are not viable.

There are various techniques we can use in order to cope with mismatches in the images we consider irrelevant. These include searching for sub-images within a larger image, using fuzzy matching (e.g. where the test accepts 2% difference in the pixels), and ignoring some sections of the screenshot.

Characteristics of Test Automation Tools

One of the key questions to understand is how will your chosen Test Automation interface detect various outputs and behaviors.

The internal mechanics of the Test Automation code may also be relevant. For instance WebDriver would need to send lots of individual requests to collect all the information on an element, rather than asking for the set of attributes in a single API call. Because the state of the element can change, even between the various requests, the final results may be inconsistent or inaccurate in some circumstances.

Know Your App

Ultimately, your app is particular to you and it’s worth knowing your app in some depth if you want to create effective Test Automation.

- Decide what aspects you want to focus on testing, checking etc.

- Realise many other facets of the app’s appearance and behaviour may be ignored by your automated tests.

Beware of ‘Wooden Testing’: testing that is limited to 1-dimensional testing of the basic functionality to show ‘something’ works. Testing that disregards the realistic aspects of the software’s behaviour, errors, timing, events, etc. At best, your automated tests will be incomplete, leaving much of the apps behaviour and qualities untested. Worse, the team may be lulled into a false sense of security, like the French were when they’d constructed the Maginot Line before World War II, thinking your automated tests will protect the project while they’re largely irrelevant and outflanked by real-world events that expose gaping problems in your app.

So, let’s think about which aspects we want to focus on testing or checking, for instance – is the performance of UI responses important? If so, will your tests be able to measure the performance sufficiently accurately? In addition, how will you implement the measurements and check they are accurate?

Try to consciously identify elements your tests will not be checking, so you’re able to recognize the types of bugs your Test Automation will not capture (because the tests are ignoring those facets of the app). If we want to test these facets, we can include some other forms of testing explicitly for these aspects e.g. interactive testing, usability testing, etc. I’ve yet to meet a team, project, or company who use 100% automated testing for their mobile apps – so don’t be embarrassed by having some interactive testing (otherwise known as manual testing) simply learn to do it well.

What parts of the app change?

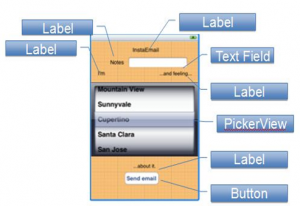

- Identify the elements on each screen in the UI

- Identify the transitions from one screen to another

- Understand what things or events causes changes in the app’s behaviour or appearances

Consider ways to:

- Launch screens independently.

- Use of mock objects, test servers, etc. to reduce the effects of ‘other’ code on the software you want to test. Lighter-weight (fly/welter weight?) Test Automation.

Related topics: outside the scope of this material.

- Test below (or without) the GUI. While this is a separate topic, sometimes you’ll get excellent results with such testing. It can augment GUI Test Automation. Effort needed to maintain your tests.

- Ways to prepare the environment and to launch the tests.

- Ways to structure your tests to reduce maintenance effort. Note: it’s worth covering aspects of how a Test Automation interface might be structured to reduce the maintenance effort. E.g., calabash currently has differences between the APIs (and the step definitions) for Android and iOS.

Know Your Test Data

The test data can often affect what’s shown in the UI and how the app behaves. Some examples include:

- The account used to by the tests to login

- Images e.g. returned in search results, picture galleries, etc.

- Ads, particularly those that target aspects of the user’s location, behavior, etc.

Unless we ‘know’ or can predict exactly what data to expect, our tests may be adversely affected. We can chose several options e.g. to ignore the contents of various fields and areas of the UI, to inject known test data, to use heuristics to decide whether the results are acceptable, etc.

Tests that depend on production data are particularly prone to challenge the behaviour of our tests. I’ve encountered image-based Test Automation that’s had to ignore virtually all aspects of the returned results because the tests couldn’t realistically cope with the variety of the content returned by the production servers.

Know Your Platform & Know Your Development Tools

Each mobile platform is different and offers distinct ways to interface with an application from a Test Automation perspective. Some incorporate ‘Accessibility’ capabilities, which are sufficiently potent to form the primary interface for Test Automation; iOS is the most potent in this area. Others rely on having access to the source code for an app, e.g. Android.

Discover; learn what the interfaces are already available for the platform you want to write automated tests for.

For Android these include:

- Android Instrumentation (primarily uses component type or resource ID)

- Android MonkeyRunner

- Image based matching

- Firing events, actions, intents, etc.

Not used:

- Accessibility features

For iOS these include:

- The Accessibility Labels

- UIScript & UIAutomation

- The interactive recorder, which is part of the Instruments developer tool provided with XCode.

Know the Devices

If you run the same tests on the same device with the same date and the same software installed you might expect the screenshots of each screen would have consistent colors, text, etc. However, we discovered a number of anomalies for some Android devices. Sometimes the size of the test for a Dialog’s title element was larger in one test run and smaller for another. Also, there were numerous differences in the colors of background pixels on the screen. There were no known reasons for the changes. However the changes had the effect of causing screenshot comparisons to fail. For this client they wanted the UI to be pixel perfect so the differences in the screenshots were relevant and meant the team had to spend significant time reviewing the differences to decide if the differences were material and enough to reject a build of the app.

We’re still researching whether there are patterns that trigger or cause the differences. In the meantime, the matching process includes 2 tolerances for differences:

- Per pixel tolerance of the color values

- Per image tolerance of the percentage of pixels that don’t match.

Additional Approaches to Test Automation

Teams can also write specific APIs and interfaces – e.g. to allow remote control of their app. These APIs and interfaces may be shipped as part of the app, disabled, or removed entirely from the app’s we intend to ship – the release versions. There are various ways of designing the APIs / interfaces; one of the most popular ways is to embed a HTTP server – effectively a mini- web-server as an intrinsic part of the app.

Some Test Automation frameworks rely on a compiled-in interface, which the development team incorporates into the testable version of their app. These include commercial offerings from Soasta and opensource offerings including Calabash-ios.

Considerations

How many ways?

How many ways?

See how many ways you can find to interact with your app.

Sometimes the challenge is to find a single way to interface your Test Automation code with your app. However, once you’ve found at least one way to connect, it’s worth seeking more ways to interface the Test Automation with the app. You may well discover more reliable and elegant ways to connect them together.

In the domain of Test Automation for web apps, WebDriver is the foremost open source Test Automation tool. It provides the ‘By’ clause, which includes a multitude of ways to interface the tests with the web site or web app. Some choices tend to be more reliable and resilient, e.g. when elements have an unique identifier.

Here’s the current set of ‘by’ options for WebDriver to inspire you:

class By(object):

ID = “id”

XPATH = “xpath”

LINK_TEXT = “link text”

PARTIAL_LINK_TEXT = “partial link text”

NAME = “name”

TAG_NAME = “tag name”

CLASS_NAME = “class name”

CSS_SELECTOR = “css selector” (found in: py/selenium/webdriver/common/by.py)

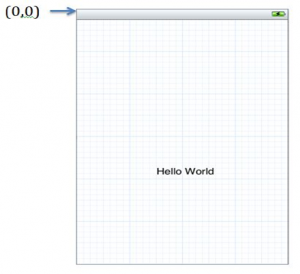

Other options include the location of elements on the screen. The location is generally calculated using a pair of coordinates, the x and y values based on a 2-dimensional grid. The origin (where x is 0 and y is 0) tends to be the top left corner or the bottom left corner, depending on the platform.

Maintainability vs. Fragility of Interfaces and the Test Code

Interfaces may be fragile, for instance where tests break when the UI is changed, even marginally.

Efficient Test Automation is more likely when the developers collaborate and facilitate the Test Automation interfaces. They’re more likely to create maintainable test interfaces than anyone else. Ideally the business and project team will recognise the importance of efficient and effective Test Automation as adding good interfaces will take some time initially, the return on investment comes after subsequent releases need to be tested – provided the tests don’t need lots of maintenance.

Each interface has limitations, beyond which it becomes unreliable or simply broken. For instance, one that’s based on the order of similar elements, e.g. the second EditText field is subject to the order of EditText fields used to create the current UI. If the designers or developers decide to change the order or add additional EditText fields before the one we intended our test would interact with, then our tests may behave in unexpected ways.

Each interface has limitations, beyond which it becomes unreliable or simply broken. For instance, one that’s based on the order of similar elements, e.g. the second EditText field is subject to the order of EditText fields used to create the current UI. If the designers or developers decide to change the order or add additional EditText fields before the one we intended our test would interact with, then our tests may behave in unexpected ways.

Using positioning and touch areas

Most elements can be described by a set of points, the most common set being 2 pairs of points describing the top-left point and bottom-right points of a rectangle. When someone touches the screen of their phone anywhere within the rectangle, the UI sends the touch event to that element.

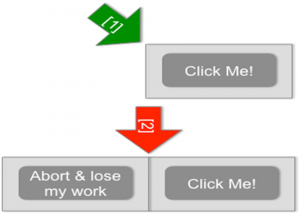

Sometimes the touch area is actually larger than the dimensions of the element. This is intended to make the user-interface easier to interact with. The following figure, with the arrow labelled [1] shows how the touch area is increased for the ‘Click Me!’ Button. For the second example in the figure, the touch areas about each other, as indicated by the arrow labelled [2]. So a user might inadvertently select the ‘Abort & lose my work’ button when they intended to press ‘Click Me!’ instead.

Developers may be able to explicitly specify the size of the touch area, or the platform’s user-interface may even do so automatically. So, we may want to test the touch area as part of our automated tests.

Also, if elements move slightly in the UI e.g. because the designer wants them moving slightly to the right, it’s worth checking whether the current coordinates are still suitable. If we’d used the top-left corner as our touch coordinate then our tests may start failing mysteriously. Whereas if we’d used the center of the rectangle, they’d still work, even though they’re no longer in the absolute center of the new location of the element. The following image indicates two choices of where to locate touch events: near the top-left of the button, or near the center.

Designing Test Automation Interfaces

Some of us will be fortunate and involved in the design of Test Automation interfaces. Here are some suggestions for what to consider.

Choosing good abstractions

A Test Automation interface needs to communicate with people as well as with computers. The people include the programmers, testers, and others on the team. A good abstraction can make the interface easier to comprehend and use, for instance by naming methods and parameters so they reflect the domain objects.

Higher-level interfaces, for instance Cucumber, are intended to be easy for everyone to read what the tests do; yet they can be simple to implement and even easy to use.

Preserving Fidelity

Preserving the fidelity of the app’s appearance and behavior is also important, so the tests can detect changes in the app’s output or behavior. As an example there was a bug reported on Selenium, #2536 where the screenshot does not match the actual viewport for Google’s Chrome web browser, but does for Firefox. The Chrome Driver is reducing the fidelity of the output, somehow, and therefore reduces our confidence in using it when we want to process or use the screenshot.

There may be a trade-off between choosing good abstractions, using higher-level test interfaces, and preserving the fidelity. Rather than me telling you what your trade-off should be, I suggest you discuss the options with the rest of the people involved in the project.

A related area is to provide sufficient capabilities to interact adequately with the app. One of the problems faced by cross platform tools, and tools that provide multiple programmatic interfaces e.g. implementations in several programming languages, is to provide the same level of capabilities for every platform and programming language.

Sometimes there may be technical reasons why some capabilities cannot be provided, if so, document these so the test automator has advanced notice rather than assuming all will be well.

Ways to increase flexibility of a Test Automation interface

Interfaces can be designed to allow greater flexibility and resilience to make the interface less brittle. Selections that use names rather than positions can cope with changes in the order of elements. Some selections are intended to be unique – for instance when IDs are used to select web elements . Others filter or match one or more elements: CSS selectors are a good example. XPATH selectors can reflect the hierarchy of elements and select some elements within a DOM structure. Named parameters in an API allow the order of elements to vary between the caller and the implementation, and default values for new elements provide some backwards compatibility for older Test Automation code.

Here are examples of each approach:

- IDs e.g. <element id=”id”>

- CSS <a href=”#”>click me</a>

- Xpath /bookstore/book[1]

- Named parameters e.g. def info (object, spacing=10, collapse=1):

For designers of Test Automation interfaces, they need to consider how much flexibility is appropriate.

Decide What’s Worth ‘Testing’

Are the colors in the UI worth testing? How about the exact location and order of elements on the screen? And what about the individual images displayed in your picture gallery? You may decide some of these are relevant to your automated testing. If so, we need to find ways to:

- Obtain the information from the app, in the Test Automation code

- Verify the information

For attributes such as colour, obtaining the information and comparing it to the expected value may be straightforward.

Images can be much harder to obtain or compare, and there may be other technical reasons why the information

may be hard to match exactly. This is particularly so when using images captured using a camera e.g. from commercial products such as Perfecto Mobile’s

Recognize the no-man’s land and the no-go areas

Some aspects may be impractical to test with the current tools e.g. rapid screen updates may be too fast for some Test Automation tools to detect (reliably). The most obvious examples include video content; also, transitions may be very hard to test robustly or reliably.

Similarly, when the test environment uses production data, the results may be hard to predict sufficiently accurately to determine if things like the search results are ‘correct’.

Sometimes the most practical approach is to err on the side of reliability where the tests wait until the GUI has stabilized e.g. when the web view has finished rendering all the content before trying to interpret the contents of the GUI.

Limitations of the chosen Test Automation interfaces

The dynamic nature of apps, latency of the Test Automation, data volumes, etc. can all adversely affect the validity, reliability and costs of the tests. These factors are all worth considering when we design and implement our automated tests, otherwise we risk the tests generating incorrect results or being undependable as the conditions vary from test run to test run.

Other limitations, particularly when testing mobile apps on actual devices, is dealing with the security defenses provided by the platform.

For iOS, there are no public ways to interact with the preinstalled apps on a device. The only viable alternative is to run tests against the Simulator, using GUI Test Automation tools such as Sikuli.

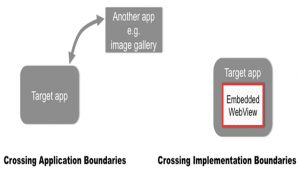

For Android, the primary Test Automation approach uses something called the Instrumentation Test Runner. This relies on the tests being written alongside the code we want to test, a white-box Test Automation technique where identifiers for UI resources may be shared between the app and the Test Automation code. Instrumentation is limited, by design to interacting with the app. However some apps interact with email clients, the photo gallery, etc. for instance, to allow a user to upload a new picture of themselves online. Android Instrumentation is not permitted to interact with the gallery or with the email clients. So the test automator needs to find an alternative Test Automation tool to interact with these apps they didn’t write. For Android they may choose to use MonkeyRunner, which is a GUI Test Automation tool, to interact with these separate apps. Or they can choose to write custom apps to replace the general-purpose email, photo picker, and other apps.

The figure above illustrates inter-app and intra-app limitations.

The custom app then provides a way to interact with them programmatically, from the Test Automation code.

Some apps embed a web browser. Few Test Automation tools can cope with interacting with the embedded web browser, which can cause significant challenges if the tests need to use it e.g. to login to the app.

Remember – the Human to Computer Interface

Ultimately, we want our apps to be useable and enjoyable for our users. Therefore we need to test on-behalf-of people we think may use the app. Remember to consider users who may be less gifted than the gifted programmers and designers; users who may be less adept at using mobile devices. Also consider users who benefit from software they can adapt to suit their needs e.g. who may use a screen reader such as VoiceOver on an iPhone, or TalkBack on Android.

Test Automation can help detect some of the problems, for instance to determine the size and proximity of touch areas that are too small or too close together. Conversely Test Automation may ‘work’ but ignore problems that make an app unusable for humans, for instance where white text is displayed on a white background.

Good luck with your Test Automation. Let me know about your experiences.

Glossary:

Term – Description.

App – Abbreviation for ‘Application’.

Application – Installable software intended to be visible and used directly by users. Compare with a Service or other background software, which don’t have a visible user interface.

Platform – The operating system of a particular mobile device. Common platforms include: iOS for the iPhone and iPad; Android for Android phones; and BlackBerry OS for BlackBerry phones.

Special Bonus Topic

Things Which can Affect Captured Images

Colour Pallets

Although a device may be able to display 24-bit colours (theoretically allowing gazillions of hues and colours to be displayed), manufacturers may transform the specified colour slightly to reduce the number of distinct, unique colours that will actually be displayed on the screen. By reducing the number of colours they can increase the performance of rendering the graphics on the display. The trade-off of tweaking the colours was often masked by limitations in the human eye’s ability to detect the changes on the device’s screen.

Some mobile devices actually had several conversion processes e.g. one in software and another in hardware. The multiple conversions made it hard for the programmer or designer of an app to know the actual colour that a user would see, unless they tested their app on each combination of device model and platform revision.

Web-safe colours

Historically, desktop displays could be limited to as few as 256 colours. The industry devised a set of around 216 distinct colours which were expected to be sufficiently distinct yet able to be rendered by all desktop and laptop computers.

Dithering

Dithering is a process that ‘decides’ what value to specify e.g. where the current value isn’t viable. It can apply to choice of colour (as mentioned in the section on colour pallets), location e.g. of an element on screen, etc. We have discovered that some smartphone models vary the location of text slightly (by a pixel or so) between one run of an application and another run. This means the screenshots can vary even when the platform and the app remain constant!

Fonts

The choices of font and appearance of text (e.g. font size, italic, bold) may have a significant effect on how the text is rendered on screen and on how much of the text is visible. If the tests match the images pixel-by-pixel then virtually any change of font or appearance may cause the image matching, and therefore the test to fail.

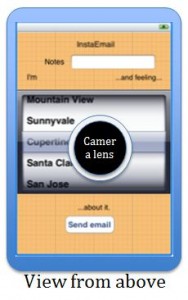

Parallax

Parallax in terms of Test Automation is the effect of the positioning a camera above the screen of the device. The centre of the screen is closer, and takes more space, than the extremes of the screen. This distorts the recorded images. The following diagram shows a simplified version of why parallax occurs.

Lighting and reflection

Lighting and reflection can also adversely affect Test Automation that relies on an external camera to capture screenshots.

Compensating for the various differences

- Tolerances, e.g. for colours , for things like the percentage of differences to allow. Fuzzy matching e.g. to find a small image within an area of the screenshot.

- Blanking out or ignoring parts of the image.

- Auto-generating the images we want to match against (this could be an entire section, I expect).

- Controlling the input data, picking distinct colours, fonts, etc. that are easier to match programmatically (in the Test Automation code).

- Controlling the lighting, picking the choice of camera carefully, etc.

- Deciding which format to capture and store images in.