Elfriede Dustin of Innovative Defense Technology, is the author of various books including Automated Software Testing, Quality Web Systems, and her latest book Effective Software Testing. Dustin discusses her views on test design, scaling automation and the current state of test automation tools.

LogiGear: With Test Design being an important ingredient to successful test automation, we are curious at LogiGear magazine how you approach the problem? What methods do you employ to insure that your teams are developing tests that are ready or supported for automation?

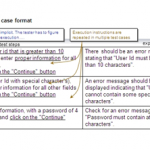

Dustin: How we approach test design depends entirely on the testing problem we are solving. For example, we support the testing of mission critical systems using our (IDT’s) Automated Test and Re-Test (ATRT) solution. For some of the mission critical systems, Graphical User Interface (GUI) automated testing is important, because the system under test (SUT) has operator intensive GUI inputs that absolutely need to work – we approach the test design based on the GUI requirements, GUI scenarios, expected behavior and scenario based expected results.

Other mission critical systems we are testing have a need for interface testing, where hundreds or thousands of messages are being sent back and forth between the interfaces – and the message data being sent back and forth has to be simulated (here we have to test load, endurance and functionality). We might have to look at detailed software requirements for message values, data inputs and expected results and/or examine the SUT’s Software Design Documents (SDDs) for such data so we can ensure the test we are designing, or even the test that already existed, are sufficient to permit validation of those messages against the requirements. Finally, it’s of not much use if we now have automated the testing of thousands of messages, and we have added to the workload of the analysis. Therefore, the message data analysis needs to be automated also and again a different test design is required here. Many more test design approaches exist and need to be applied depending on the many more testing problems we are trying to solve, such as testing web services, databases, and others.

ATRT provides a modeling component for any type of test, i.e. GUI, message-based, combination of GUI and message based and more. This modeling component provides a more intuitive view of the test flow than mere text from a test plan or in a test procedure document. The model view lends itself to producing designs that are more likely to ensure the automated test meets the requirements the testers set out to validate and reduces rework or redesign once the test is automated.

LogiGear: What are your feelings on the different methods of test design from Keyword Test Design, Behavior Driven Test Development (Cucumber Tool) to scripted test automation of manual test case narratives?

Dustin: We view keyword-driven or behavior-driven tests as automated testing approaches; not necessarily test design approaches. We generally try to separate the nomenclatures of test design approaches from automated testing approaches. Our ATRT solution provides a keyword-driven approach, i.e. the tester (automator) selects the keyword action required via an ATRT-provided icon and the automation code is generated behind the scene. We find the keyword / action-driven approach to be most effective in helping test automators and in our case ATRT users build effective tests with ease and speed.

LogiGear: How have you dealt with the problems of scaling automation? Do you strive for a certain percentage of all tests to be automated? If so, what is that percentage and how often have you attained it?

Dustin: Again the answer to the question of scaling automation depends: are we talking scaling automated testing for message-based / background testing or scaling automation for GUI-based automated testing? Scaling for message based / background automated testing seems to be an easier problem to solve than scaling for GUI-based automated testing.

We don’t necessarily strive for a certain percentage of tests to be automated. We actually have a matrix of criteria that allows us to choose the tests that lend themselves to automation vs. those tests that don’t lend themselves to automation. We also build an automated test strategy before we propose automating any tests. The strategy begins with achieving an understanding of the test regimen and test objectives then prioritizes what to automate based on the likely return on investment. Although everything a human tester does can, with enough time and resources, be automated, not all tests should be automated. It has to make sense, there has to be some measurable return on the investment of automation before we recommend applying automation. “Will automating this increase the probability of finding defects?” is a question that’s rarely asked when it comes to automated testing. In our experience, there are ample tests that are ripe for achieving significant returns through automation so we are hardly hurting our business opportunities when we tell a customer that a certain test is not a good candidate for automation. Conversely, for those who have tried automation in their test programs and who claim their tests are 100% automated my first question is “What code coverage percentage does your 100% test automation achieve? One hundred percent test automation does not mean 100% code coverage or 100% functional coverage. One of the most immediate returns on using automation is the expansion of test coverage without increasing the amount of test time (and often while actually reducing test time), especially when it comes to automated backend / message testing.

LogiGear: What is your feeling on the current state of test automation tools? Do you feel that they are adequately addressing the needs of testers? Both those who can script and those who cannot?

Dustin: Most of the current crop of test automation tools focus on Windows or Web development. Most of the existing tools must be installed on the SUT thus modifying the SUT configuration. Most of the tools are GUI technology dependent. In our test environments we have to work with various Operating Systems in various states of development, using various types of programming languages and GUI technologies. Since none of the currently provided tools on the market met our vast criteria for an automated testing tool, we at IDT developed our own tool solution mentioned earlier, ATRT. ATRT is GUI technology neutral, doesn’t need to be installed on the SUT, is OS independent and uses keyword actions. No software development experience is required. ATRT provides the tester with every GUI action a mouse will provide in addition to various other keywords. Our experience with customers using ATRT for the first time is that it provides a very intuitive user interface and that it is easy to use. We also find, interestingly, that some of the best test automators with ATRT are younger testers who have grown up playing video games coincidentally developing eye-hand coordination. Some of the testers even find working with ATRT to be fun taking them away from the monotony of manually testing the same features over and over again with each new relase.

With ATRT we wanted to provide a solution where testers could model a test before the SUT becomes available and/or see a visual representation of their tests. ATRT provides a test automation modeling component.

We needed a solution that could run various tests concurrently (whether GUI tests or message based/backend/interface test) on multiple systems. ATRT has those features.

A tester should be able to focus on test design and new features. A tester should not have to spend time crafting elaborate automated testing scripts. Asking a tester to do such work places him/her at risk of losing focus on what he/she is trying to accomplish, i.e. verify the SUT behaves as expected.

LogiGear: In your career, what has been the single greatest challenge to getting test automation implemented effectively?

Dustin: Test automation can’t be treated as a side-activity or nice-to-have activity, i.e. whenever we have some time let’s automate a test. Automated testing needs to be an integral, strategically planned part of the software development lifecycle (that is a mini-lifecycle itself) and cannot be an afterthought. See our books on “Automated Software Testing” and “Implementing Automated Software Testing” on the automated testing lifecycle.

LogiGear: Finally, what is the greatest benefit that test automation provides to a software development team and/or the test team?

Dustin: Systems have become increasingly complex. We can’t test systems the same way we did 20 years ago. Automated software testing applied with a return-on-investment strategy built collaboratively with subject matter and testing experts, provides speed, agility and efficiencies to the software development team. When done right, no software or testing team can test anywhere near as much, so quickly, consistently and repeatedly as with automated testing.